一. BlackBox Exporter 是什么

BlackBox Exporter 是 Prometheus 官方提供的黑盒监控解决方案,允许用户通过 HTTP、HTTPS、DNS、TCP 以及 ICMP 的方式对网络进行探测,这种探测方式常常用于探测一个服务的运行状态,观察服务是否正常运行。

1.1 应用场景

- HTTP 测试

定义 Request Header 信息 判断 Http status / Http Respones Header / Http Body 内容

- TCP 测试

业务组件端口状态监听 应用层协议定义与监听

- ICMP 测试

主机探活机制

- POST 测试

接口联通性

- SSL 证书过期时间

1.2 部署方案

1.针对小型K8S环境集群环境,监控的目标范围比较小,可以使用 static_configs静态配置的方式来获取数据。

2.针对大型K8S环境集群环境,监控的目标范围比较大,建议使用 file_sd_configs文件自动发现或者

kubernetes_sd_configs以service的方式自动采集数据。

3.file_sd_configs方式可以监控非K8S集群环境的服务。

二. 黑盒监控和白盒监控

2.1 什么是白盒与黑盒监控

墨盒监控

黑和监控指的是以用户的身份测试服务的运行状态。常见的黑盒监控手段包括 HTTP 探针、TCP 探针、DNS 探测、ICMP 等。黑盒监控常用于检测站点与服务可用性、连通性,以及访问效率等。

白盒监控

白盒监控一般指的是我们日常对服务器状态的监控,如服务器资源使用量、容器的运行状态、中间件的稳定情况等一系列比较直观的监控数据,这些都是支撑业务应用稳定运行的基础设施。

通过白盒能监控,可以使我们能够了解系统内部的实际运行状况,而且还可以通过对监控指标数据的观察与分析,可以让我们提前预判服务器可能出现的问题,针对可能出现的问题进行及时修正,避免造成不可预估的损失。

2.2 白盒监控和黑盒监控的区别

黑盒监控与白盒监控有着很大的不同,俩者的区别主要是,黑盒监控是以故障为主导,当被监控的服务发生故障时,能快速进行预警。而白盒监控则更偏向于主动的和提前预判方式,预测可能发生的故障。

一套完善的监控系统是需要黑盒监控与白盒监控俩者配合同时工作的,白盒监控预判可能存在的潜在问题,而黑盒监控则是快速发现已经发生的问题。

三. Kubernetes 部署 BlackBox Exporter

官当,https://github.com/prometheus/blackbox_exporter

configmap定义

https://github.com/prometheus/blackbox_exporter/blob/master/CONFIGURATION.md

https://github.com/prometheus/blackbox_exporter/blob/master/example.yml

参考 BlackBox Exporter configmap的官方示例

3.0 定义BlackBox在Prometheus抓取设置

https://github.com/prometheus/prometheus/blob/main/documentation/examples/prometheus-kubernetes.yml

3.1 创建ConfigMap

vim 1.blackbox-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-exporter

namespace: monitor

labels:

app: blackbox-exporter

data:

blackbox.yml: |-

modules:

## ----------- DNS 检测配置 -----------

dns_tcp:

prober: dns

timeout: 5s

dns:

transport_protocol: "tcp" # 默认是 udp

preferred_ip_protocol: "ip4" # 默认是 ip6

query_name: "kubernetes.default.svc.cluster.local" # 利用这个域名来检查 dns 服务器

query_type: "A" # 如果是 kube-dns ,一定要加入这个

## ----------- TCP 检测模块配置 -----------

tcp_connect:

prober: tcp

timeout: 5s

## ----------- ICMP 检测配置 -----------

icmp:

prober: icmp

timeout: 5s

icmp:

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP GET 2xx 检测模块配置 -----------

http_get_2xx:

prober: http

timeout: 10s

http:

method: GET

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200] # 验证的HTTP状态码, 默认是 2xx

fail_if_ssl: false

tls_config:

insecure_skip_verify: true

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP GET 3xx 检测模块配置 -----------

http_get_3xx:

prober: http

timeout: 10s

http:

method: GET

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [300, 301, 302, 303, 307, 308] # 验证的HTTP状态码, 默认是 2xx

fail_if_ssl: false

tls_config:

insecure_skip_verify: true

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP POST 监测模块 -----------

http_post_2xx:

prober: http

timeout: 10s

http:

method: POST

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200] # 验证的HTTP状态码, 默认是 2xx

preferred_ip_protocol: "ip4" # 默认是 ip6

#headers:

# Content-Type: "application/json"

#body: '{}'apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-exporter

namespace: monitor

labels:

app: blackbox-exporter

data:

blackbox.yml: |-

modules:

## ----------- DNS 检测配置 -----------

dns_tcp:

prober: dns

timeout: 5s

dns:

transport_protocol: "tcp" # 默认是 udp

preferred_ip_protocol: "ip4" # 默认是 ip6

query_name: "kubernetes.default.svc.cluster.local" # 利用这个域名来检查 dns 服务器

query_type: "A" # 如果是 kube-dns ,一定要加入这个

## ----------- TCP 检测模块配置 -----------

tcp_connect:

prober: tcp

timeout: 5s

## ----------- ICMP 检测配置 -----------

icmp:

prober: icmp

timeout: 5s

icmp:

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP GET 2xx 检测模块配置 -----------

http_get_2xx:

prober: http

timeout: 10s

http:

method: GET

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200] # 验证的HTTP状态码, 默认是 2xx

fail_if_ssl: false

tls_config:

insecure_skip_verify: true

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP GET 3xx 检测模块配置 -----------

http_get_3xx:

prober: http

timeout: 10s

http:

method: GET

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [300, 301, 302, 303, 307, 308] # 验证的HTTP状态码, 默认是 2xx

fail_if_ssl: false

tls_config:

insecure_skip_verify: true

preferred_ip_protocol: "ip4" # 默认是 ip6

## ----------- HTTP POST 监测模块 -----------

http_post_2xx:

prober: http

timeout: 10s

http:

method: POST

no_follow_redirects: false # 是否不跟随重定向

vaild_http_versions: ["HTTP/1.1", "HTTP/2.0"]

valid_status_codes: [200] # 验证的HTTP状态码, 默认是 2xx

preferred_ip_protocol: "ip4" # 默认是 ip6

#headers:

# Content-Type: "application/json"

#body: '{}'- apply

[root@kube-master blackbox]# kubectl apply -f 1.blackbox-configmap.yaml[root@kube-master blackbox]# kubectl apply -f 1.blackbox-configmap.yaml- 查看

[root@kube-master blackbox]# kubectl get configmaps blackbox-exporter -nmonitor

NAME DATA AGE

blackbox-exporter 1 2m2s[root@kube-master blackbox]# kubectl get configmaps blackbox-exporter -nmonitor

NAME DATA AGE

blackbox-exporter 1 2m2s3.2 创建deployment资源

vim 2.blackbox-exporter.yaml

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

type: ClusterIP

ports:

- name: http

port: 9115

targetPort: 9115

selector:

k8s-app: blackbox-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

k8s-app: blackbox-exporter

template:

metadata:

labels:

k8s-app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/blackbox-exporter:v0.21.0

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml # ConfigMap 中的配置文件

- --web.listen-address=:9115

- --log.level=info # 错误级别控制,error

ports:

- name: http

containerPort: 9115

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 200m

memory: 256Mi

securityContext:

runAsUser: 65534

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

volumeMounts:

- name: config-volume

mountPath: /etc/blackbox_exporter

volumes:

- name: config-volume

configMap:

name: blackbox-exporter

defaultMode: 420apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

type: ClusterIP

ports:

- name: http

port: 9115

targetPort: 9115

selector:

k8s-app: blackbox-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

k8s-app: blackbox-exporter

template:

metadata:

labels:

k8s-app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/blackbox-exporter:v0.21.0

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml # ConfigMap 中的配置文件

- --web.listen-address=:9115

- --log.level=info # 错误级别控制,error

ports:

- name: http

containerPort: 9115

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 200m

memory: 256Mi

securityContext:

runAsUser: 65534

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

volumeMounts:

- name: config-volume

mountPath: /etc/blackbox_exporter

volumes:

- name: config-volume

configMap:

name: blackbox-exporter

defaultMode: 420- appy

kubectl apply -f 2.blackbox-exporter.yamlkubectl apply -f 2.blackbox-exporter.yaml- 查看

[root@kube-master blackbox]# kubectl get svc -n monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

blackbox-exporter ClusterIP 192.168.200.165 <none> 9115/TCP 80s[root@kube-master blackbox]# kubectl get svc -n monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

blackbox-exporter ClusterIP 192.168.200.165 <none> 9115/TCP 80s四. 监控

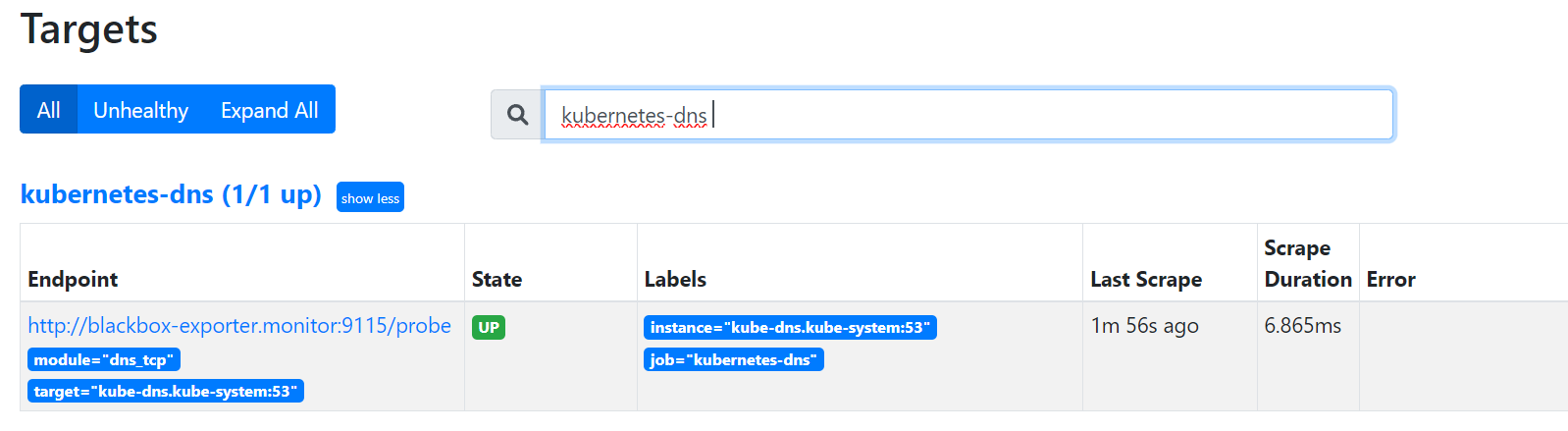

4.1 dns监控

- job_name: "kubernetes-dns"

metrics_path: /probe # 不是 metrics,是 probe

params:

module: [dns_tcp] # 使用 DNS TCP 模块

static_configs:

- targets:

- kube-dns.kube-system:53 # 不要省略端口号

relabel_configs:

## 将上面配置的静态DNS服务器地址转换为临时变量 "__param_target"

- source_labels: [__address__]

target_label: __param_target

## 将 "__param_target" 内容设置为 instance 实例名称

- source_labels: [__param_target]

target_label: instance

## # 服务地址,和上面的 Service 定义保持一致

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 - job_name: "kubernetes-dns"

metrics_path: /probe # 不是 metrics,是 probe

params:

module: [dns_tcp] # 使用 DNS TCP 模块

static_configs:

- targets:

- kube-dns.kube-system:53 # 不要省略端口号

relabel_configs:

## 将上面配置的静态DNS服务器地址转换为临时变量 "__param_target"

- source_labels: [__address__]

target_label: __param_target

## 将 "__param_target" 内容设置为 instance 实例名称

- source_labels: [__param_target]

target_label: instance

## # 服务地址,和上面的 Service 定义保持一致

- target_label: __address__

replacement: blackbox-exporter.monitor:9115update prometheus配置

热更prometheus

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 效果

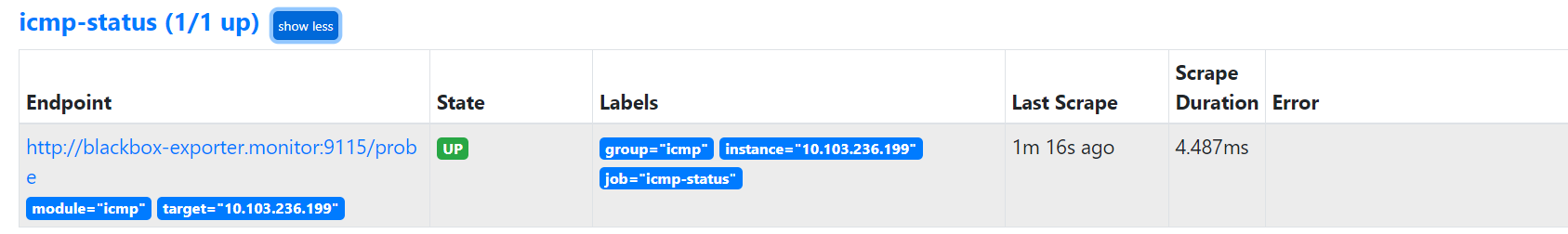

4.2 icmp

- job_name: icmp-status

metrics_path: /probe

params:

module: [icmp]

static_configs:

- targets:

- 10.103.236.199

labels:

group: icmp

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 - job_name: icmp-status

metrics_path: /probe

params:

module: [icmp]

static_configs:

- targets:

- 10.103.236.199

labels:

group: icmp

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115- 热加载

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 效果

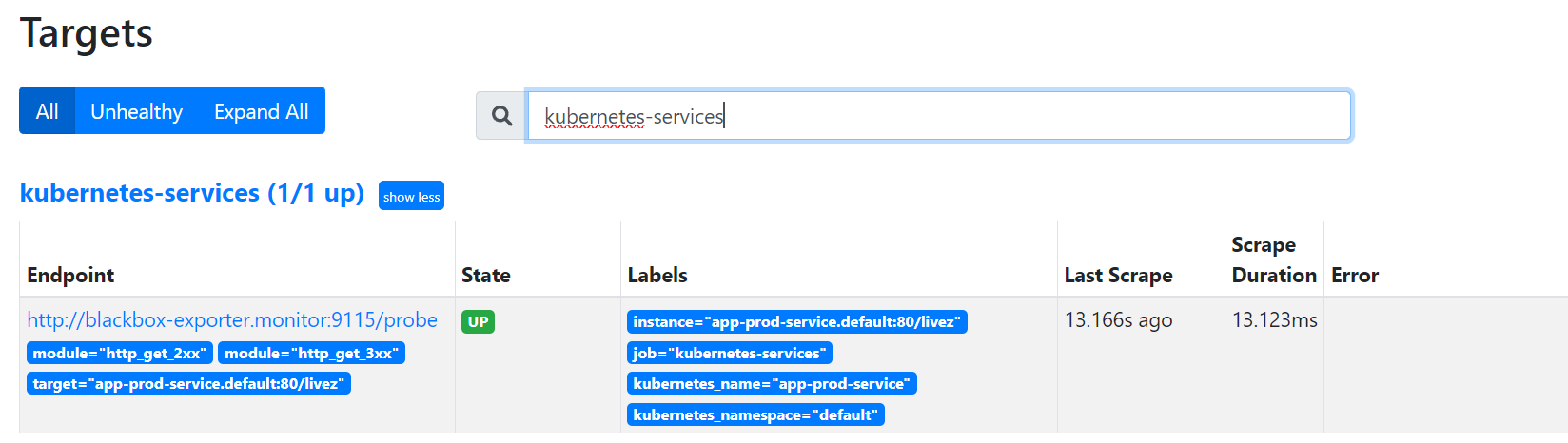

4.3 Service

创建用于探测 Kubernetes 服务的配置,对那些配置了 prometheus.io/http-probe: "true" 标签的 Kubernetes Service 资源的健康状态进行探测

http

- job_name: 'kubernetes-services'

metrics_path: /probe

params:

module: ## 使用HTTP_GET_2xx与HTTP_GET_3XX模块

- "http_get_2xx"

- "http_get_3xx"

kubernetes_sd_configs: ## 使用Kubernetes动态服务发现,且使用Service类型的发现

- role: service

relabel_configs: ## 设置只监测Kubernetes Service中Annotation里配置了注解prometheus.io/http_probe: true的service

- action: keep

source_labels: [__meta_kubernetes_service_annotation_prometheus_io_http_probe]

regex: "true"

- action: replace

source_labels:

- "__meta_kubernetes_service_name"

- "__meta_kubernetes_namespace"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_port"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_path"

target_label: __param_target

regex: (.+);(.+);(.+);(.+)

replacement: $1.$2:$3$4

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 ## BlackBox Exporter 的 Service 地址

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name - job_name: 'kubernetes-services'

metrics_path: /probe

params:

module: ## 使用HTTP_GET_2xx与HTTP_GET_3XX模块

- "http_get_2xx"

- "http_get_3xx"

kubernetes_sd_configs: ## 使用Kubernetes动态服务发现,且使用Service类型的发现

- role: service

relabel_configs: ## 设置只监测Kubernetes Service中Annotation里配置了注解prometheus.io/http_probe: true的service

- action: keep

source_labels: [__meta_kubernetes_service_annotation_prometheus_io_http_probe]

regex: "true"

- action: replace

source_labels:

- "__meta_kubernetes_service_name"

- "__meta_kubernetes_namespace"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_port"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_path"

target_label: __param_target

regex: (.+);(.+);(.+);(.+)

replacement: $1.$2:$3$4

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 ## BlackBox Exporter 的 Service 地址

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name- apply

[root@kube-master test]# kubectl apply -f 2.prometheus-config.yaml

configmap/prometheus-config configured[root@kube-master test]# kubectl apply -f 2.prometheus-config.yaml

configmap/prometheus-config configured- 热加载

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload案例:

在Service上面必须开启如下参数,才能采集数据

prometheus.io/scrape: "true"

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "80" #监控服务的端口,根据实际情况填写

prometheus.io/http-probe-path: "/index.html" #监控服务接口的地址,如果域名上下文不是为/的话,则写为/index.htmlprometheus.io/scrape: "true"

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "80" #监控服务的端口,根据实际情况填写

prometheus.io/http-probe-path: "/index.html" #监控服务接口的地址,如果域名上下文不是为/的话,则写为/index.html- 创建资源

[root@kube-master example_service]# cat 1.app-deploy-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-prod

spec:

replicas: 1

selector:

matchLabels:

app: app-prod

role: app-prod-role

template:

metadata:

labels:

app: app-prod

role: app-prod-role

spec:

containers:

- name: app

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

#Service

---

apiVersion: v1

kind: Service

metadata:

name: app-prod-service

annotations:

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "80"

prometheus.io/http-probe-path: "/livez"

spec:

type: ClusterIP

selector:

app: app-prod

role: app-prod-role

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80[root@kube-master example_service]# cat 1.app-deploy-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-prod

spec:

replicas: 1

selector:

matchLabels:

app: app-prod

role: app-prod-role

template:

metadata:

labels:

app: app-prod

role: app-prod-role

spec:

containers:

- name: app

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

#Service

---

apiVersion: v1

kind: Service

metadata:

name: app-prod-service

annotations:

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "80"

prometheus.io/http-probe-path: "/livez"

spec:

type: ClusterIP

selector:

app: app-prod

role: app-prod-role

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80- apply

kubectl apply -f 1.app-deploy-service.yamlkubectl apply -f 1.app-deploy-service.yaml- 热加载

- 效果

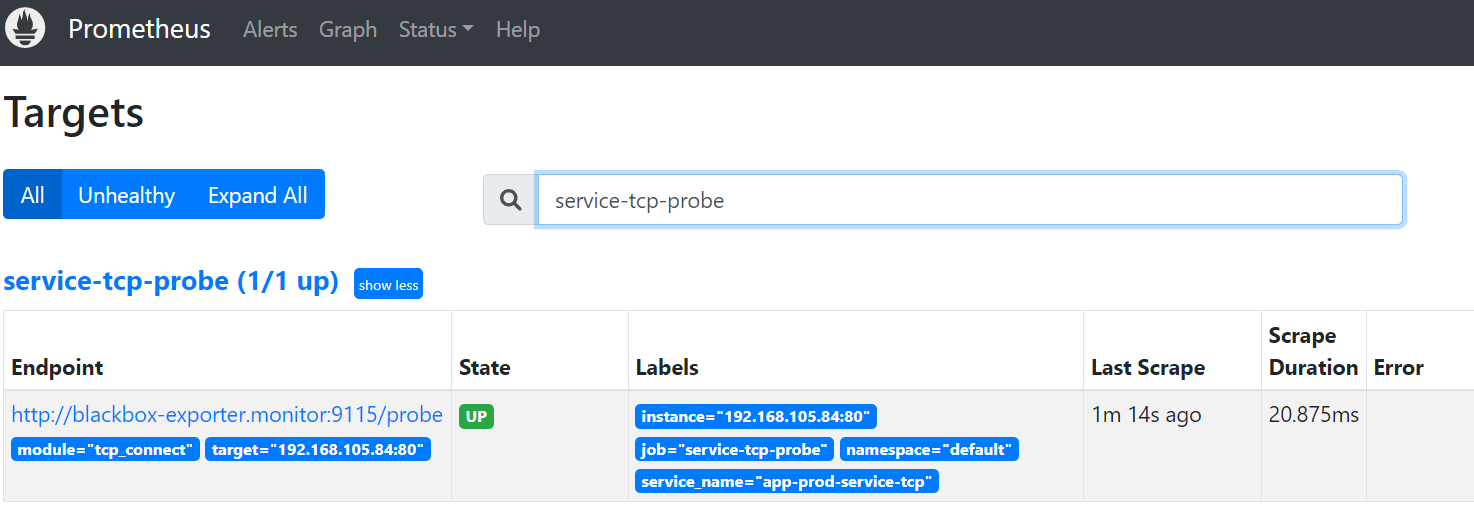

tcp

- 添加job

- job_name: "service-tcp-probe"

scrape_interval: 1m

metrics_path: /probe

# 使用blackbox-exporter配置文件的tcp_connect的探针

params:

module: [ tcp_connect ]

kubernetes_sd_configs:

- role: service

relabel_configs:

# 保留prometheus.io/scrape: "true"和prometheus.io/tcp-probe: "true"的service

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_service_annotation_prometheus_io_tcp_probe]

action: keep

regex: true;true

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_service_name]

action: replace

regex: (.*)

target_label: service_name

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_namespace]

action: replace

regex: (.*)

target_label: namespace

# 将instance改成 `clusterIP:port` 地址

- source_labels: [__meta_kubernetes_service_cluster_ip, __meta_kubernetes_service_annotation_prometheus_io_http_probe_port]

action: replace

regex: (.*);(.*)

target_label: __param_target

replacement: $1:$2

- source_labels: [__param_target]

target_label: instance

# 将__address__的值改成 `blackbox-exporter.monitor:9115`

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 - job_name: "service-tcp-probe"

scrape_interval: 1m

metrics_path: /probe

# 使用blackbox-exporter配置文件的tcp_connect的探针

params:

module: [ tcp_connect ]

kubernetes_sd_configs:

- role: service

relabel_configs:

# 保留prometheus.io/scrape: "true"和prometheus.io/tcp-probe: "true"的service

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_service_annotation_prometheus_io_tcp_probe]

action: keep

regex: true;true

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_service_name]

action: replace

regex: (.*)

target_label: service_name

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_namespace]

action: replace

regex: (.*)

target_label: namespace

# 将instance改成 `clusterIP:port` 地址

- source_labels: [__meta_kubernetes_service_cluster_ip, __meta_kubernetes_service_annotation_prometheus_io_http_probe_port]

action: replace

regex: (.*);(.*)

target_label: __param_target

replacement: $1:$2

- source_labels: [__param_target]

target_label: instance

# 将__address__的值改成 `blackbox-exporter.monitor:9115`

- target_label: __address__

replacement: blackbox-exporter.monitor:9115- 热更新

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 创建资源

3.app-deploy-service-tcp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-prod-tcp

spec:

replicas: 1

selector:

matchLabels:

app: app-prod-tcp

template:

metadata:

labels:

app: app-prod-tcp

spec:

containers:

- name: app

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

#Service

---

apiVersion: v1

kind: Service

metadata:

name: app-prod-service-tcp

annotations:

prometheus.io/scrape: "true" #Prometheus 可以对这个服务进行数据采集

prometheus.io/tcp-probe: "true" #开启 TCP 探针

prometheus.io/http-probe-port: "80" ## HTTP 探针会使用80 端口来进行探测,以检查应用程序是否正常运行

spec:

type: ClusterIP

selector:

app: app-prod-tcp

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80apiVersion: apps/v1

kind: Deployment

metadata:

name: app-prod-tcp

spec:

replicas: 1

selector:

matchLabels:

app: app-prod-tcp

template:

metadata:

labels:

app: app-prod-tcp

spec:

containers:

- name: app

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

#Service

---

apiVersion: v1

kind: Service

metadata:

name: app-prod-service-tcp

annotations:

prometheus.io/scrape: "true" #Prometheus 可以对这个服务进行数据采集

prometheus.io/tcp-probe: "true" #开启 TCP 探针

prometheus.io/http-probe-port: "80" ## HTTP 探针会使用80 端口来进行探测,以检查应用程序是否正常运行

spec:

type: ClusterIP

selector:

app: app-prod-tcp

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80- 执行

kubectl apply -f 3.app-deploy-service-tcp.yamlkubectl apply -f 3.app-deploy-service-tcp.yaml- 效果

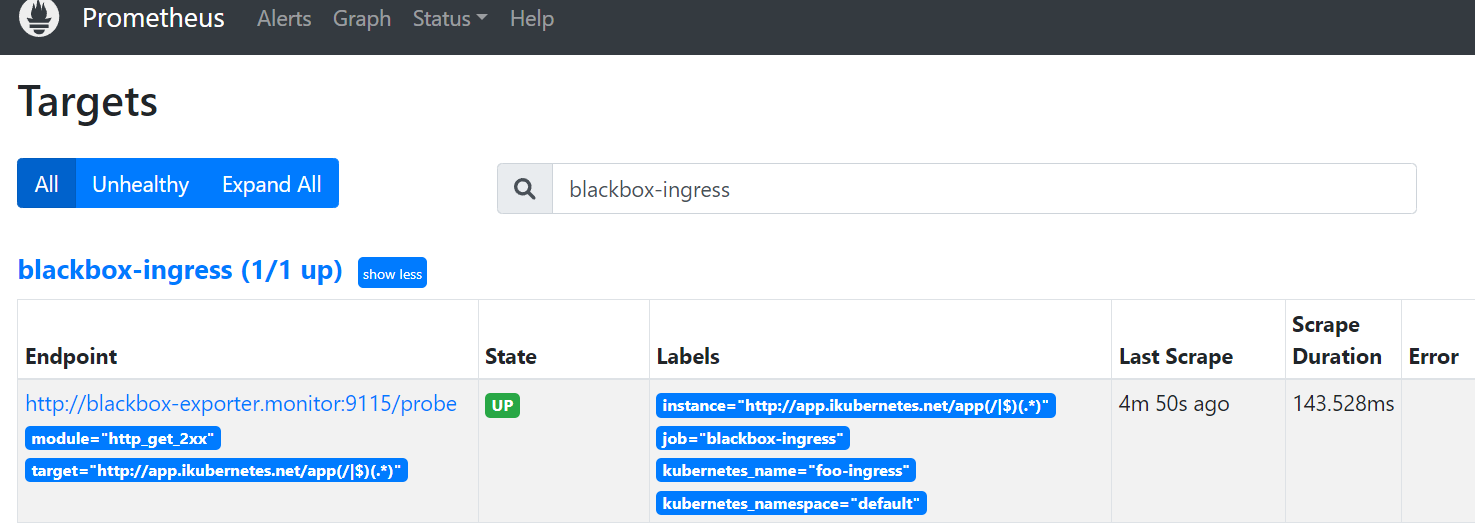

4.4 ingress

需要在ingress上添加注释必须有以下三行,才能收集到数据

annotations:

prometheus.io/http_probe: "true"

prometheus.io/http-probe-port: '80' #根据需求修改

prometheus.io/http-probe-path: '/livez' #根据需求修改annotations:

prometheus.io/http_probe: "true"

prometheus.io/http-probe-port: '80' #根据需求修改

prometheus.io/http-probe-path: '/livez' #根据需求修改- 创建采集器

- job_name: 'blackbox-ingress'

scrape_interval: 30s

scrape_timeout: 10s

metrics_path: /probe

params:

module: [http_get_2xx] # 使用定义的http模块

kubernetes_sd_configs:

- role: ingress # ingress 类型的服务发现

relabel_configs:

# 只有ingress的annotation中配置了 prometheus.io/http_probe=true 的才进行发现

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_http_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitor:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name - job_name: 'blackbox-ingress'

scrape_interval: 30s

scrape_timeout: 10s

metrics_path: /probe

params:

module: [http_get_2xx] # 使用定义的http模块

kubernetes_sd_configs:

- role: ingress # ingress 类型的服务发现

relabel_configs:

# 只有ingress的annotation中配置了 prometheus.io/http_probe=true 的才进行发现

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_http_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitor:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name- 热加载

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 效果

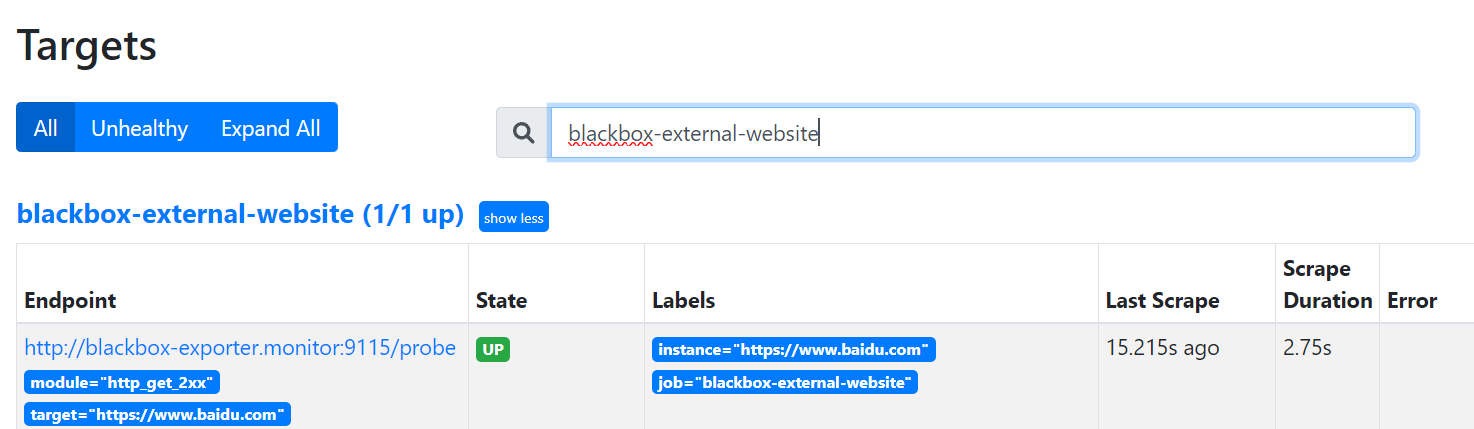

4.5 监控域名

get模式

- 创建采集器

- job_name: "blackbox-external-website"

scrape_interval: 30s

scrape_timeout: 15s

metrics_path: /probe

params:

module: [http_get_2xx]

static_configs:

- targets:

- https://www.baidu.com # 改为公司对外服务的域名

- https://www.jd.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 - job_name: "blackbox-external-website"

scrape_interval: 30s

scrape_timeout: 15s

metrics_path: /probe

params:

module: [http_get_2xx]

static_configs:

- targets:

- https://www.baidu.com # 改为公司对外服务的域名

- https://www.jd.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115- 热加载

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 效果

post模式

- job_name: 'blackbox-http-post'

metrics_path: /probe

params:

module: [http_post_2xx]

static_configs:

- targets:

- https://www.example.com/api # 要检查的网址

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115- job_name: 'blackbox-http-post'

metrics_path: /probe

params:

module: [http_post_2xx]

static_configs:

- targets:

- https://www.example.com/api # 要检查的网址

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115- 热加载

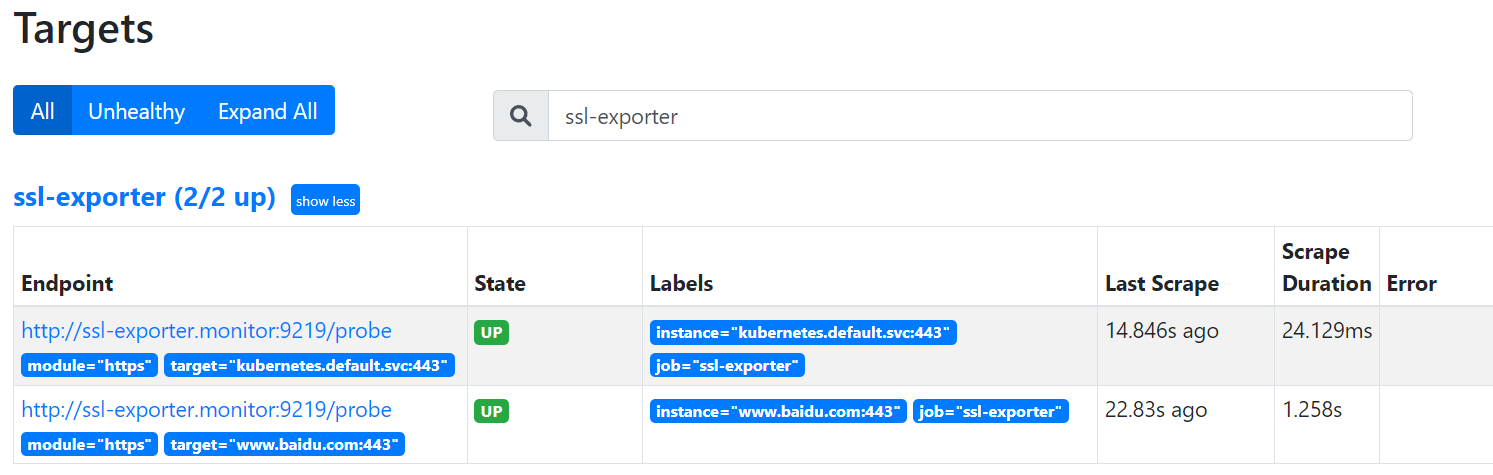

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload4.6 监控ssl证书

有时候blackbox监控时间不准

- 创建采集器

- job_name: ssl-exporter

scrape_interval: 2m

metrics_path: /probe

params:

module: ["https"] # <-----

static_configs:

- targets:

- 'kubernetes.default.svc:443'

- 'www.baidu.com:443'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: ssl-exporter.monitor:9219 - job_name: ssl-exporter

scrape_interval: 2m

metrics_path: /probe

params:

module: ["https"] # <-----

static_configs:

- targets:

- 'kubernetes.default.svc:443'

- 'www.baidu.com:443'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: ssl-exporter.monitor:9219- 部署ssl-export

1.ssl-exporter-dp.yaml

apiVersion: v1

kind: Service

metadata:

labels:

name: ssl-exporter

name: ssl-exporter

namespace: monitor

spec:

ports:

- name: ssl-exporter

protocol: TCP

port: 9219

targetPort: 9219

selector:

app: ssl-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ssl-exporter

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: ssl-exporter

template:

metadata:

name: ssl-exporter

labels:

app: ssl-exporter

spec:

initContainers:

# Install kube ca cert as a root CA

- name: ca

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/alpine:v3.7

command:

- sh

- -c

- |

set -e

apk add --update ca-certificates

cp /var/run/secrets/kubernetes.io/serviceaccount/ca.crt /usr/local/share/ca-certificates/kube-ca.crt

update-ca-certificates

cp /etc/ssl/certs/* /ssl-certs

volumeMounts:

- name: ssl-certs

mountPath: /ssl-certs

containers:

- name: ssl-exporter

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/ssl-exporter:v2.4.3

ports:

- name: tcp

containerPort: 9219

protocol: TCP

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 200m

memory: 256Mi

securityContext:

runAsUser: 1000

readOnlyRootFilesystem: true

runAsNonRoot: true

readinessProbe:

tcpSocket:

port: 9219

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

volumeMounts:

- name: ssl-certs

mountPath: /etc/ssl/certs

volumes:

- name: ssl-certs

emptyDir: {}apiVersion: v1

kind: Service

metadata:

labels:

name: ssl-exporter

name: ssl-exporter

namespace: monitor

spec:

ports:

- name: ssl-exporter

protocol: TCP

port: 9219

targetPort: 9219

selector:

app: ssl-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ssl-exporter

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: ssl-exporter

template:

metadata:

name: ssl-exporter

labels:

app: ssl-exporter

spec:

initContainers:

# Install kube ca cert as a root CA

- name: ca

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/alpine:v3.7

command:

- sh

- -c

- |

set -e

apk add --update ca-certificates

cp /var/run/secrets/kubernetes.io/serviceaccount/ca.crt /usr/local/share/ca-certificates/kube-ca.crt

update-ca-certificates

cp /etc/ssl/certs/* /ssl-certs

volumeMounts:

- name: ssl-certs

mountPath: /ssl-certs

containers:

- name: ssl-exporter

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/ssl-exporter:v2.4.3

ports:

- name: tcp

containerPort: 9219

protocol: TCP

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 200m

memory: 256Mi

securityContext:

runAsUser: 1000

readOnlyRootFilesystem: true

runAsNonRoot: true

readinessProbe:

tcpSocket:

port: 9219

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

volumeMounts:

- name: ssl-certs

mountPath: /etc/ssl/certs

volumes:

- name: ssl-certs

emptyDir: {}- apply

kubectl apply -f 1.ssl-exporter-dp.yamlkubectl apply -f 1.ssl-exporter-dp.yaml- 热加载

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload- 效果

表达式,小于30天, expr: (ssl_cert_not_after-time())/3600/24 <30

可以使用图形,9965