PV的全称是: PersistentVolume (持久化卷),是对底层的共享存储的一种抽象,PV由管理员进行创建和配置,它和具体的底层的共享存储技术的实现方式有关,比如Ceph、GlusterFS、NFS等,都是通过插件机制完成与共享存储的对接.

PVC的全称是: PersistenVolumeClaim (持久化卷声明),PVC是用户存储的一种声明,PVC和Pod比较类型,Pod是消耗节点,PVC消耗的是PV资源,Pod可以请求CPU的内存,而PVC可以请求特定的存储空间和访问模式。对于真正存储的用户不需要关心底层的存储实现细节,只需要直接使用PVC即可.

但是通过PVC请求一定的存储空间也很有可能不足以满足对于存储设备的各种需求,而且不同的应用程序对于存储性能的要求也能也不尽相同,比如读写速度、并发性能等,为了解决这一问题,Kubernetes又为我们引入了一个新的资源对象: StorageClass,通过StorageClass的定义,管理员可以将存储资源定义为某种类型的资源,比如快速存储、慢速存储等,用户根据StorageClass的描述就可以非常直观的知道各种存储资源特性了,这样就可以根据应用的特性去申请合适的存储资源了.

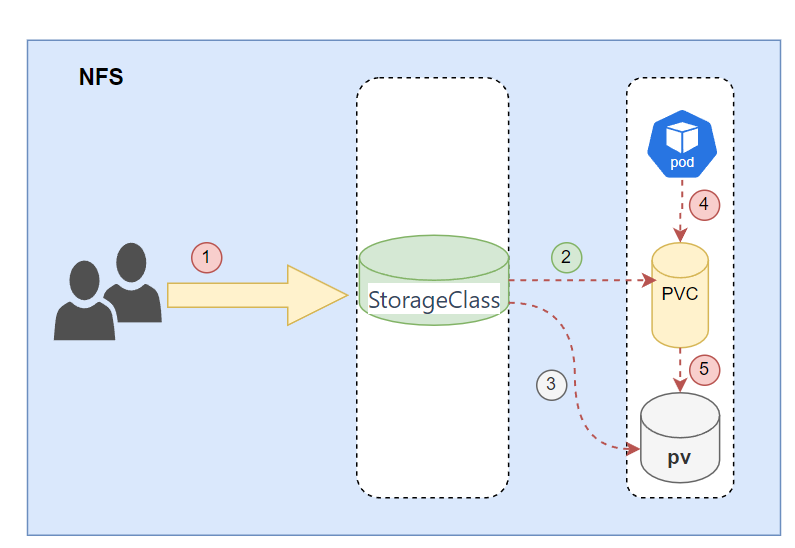

创建storeageclass流程

(1)集群管理员预先创建存储类(StorageClass)

(2)用户创建使用存储类的持久化存储声明(PVC:PersistentVolumeClaim)

(3)存储持久化声明通知系统,它需要一个持久化存储(PV: PersistentVolume)

(4)系统读取存储类的信息

(5)系统基于存储类的信息,在后台自动创建PVC需要的PV

1. storageclass策略

文档,https://kubernetes.io/docs/concepts/storage/storage-classes/

- provisioner: 该字段指定使用存储卷类型,不同的存储卷提供者类型这里要修改成对应的值

- reclaimPolicy:有两种策略:Delete、Retain。默认是Delet

- 用户删除PVC释放对PV的占用后,系统根据PV的”reclaim policy”决定对PV执行何种回收操作。 目前,”reclaim policy”有三种方式:Retained、Recycled、Deleted

Retained

保护被PVC释放的PV及其上数据,并将PV状态改成"released",不将被其它PVC绑定。集群管理员手动通过如下步骤释放存储资源

手动删除PV,但与其相关的后端存储资源如(AWS EBS, GCE PD, Azure Disk, or Cinder volume)仍然存在。

手动清空后端存储volume上的数据。

手动删除后端存储volume,或者重复使用后端volume,为其创建新的PV保护被PVC释放的PV及其上数据,并将PV状态改成"released",不将被其它PVC绑定。集群管理员手动通过如下步骤释放存储资源

手动删除PV,但与其相关的后端存储资源如(AWS EBS, GCE PD, Azure Disk, or Cinder volume)仍然存在。

手动清空后端存储volume上的数据。

手动删除后端存储volume,或者重复使用后端volume,为其创建新的PVDelete

删除被PVC释放的PV及其后端存储volume。对于动态PV其"reclaim policy"继承自其"storage class",

默认是Delete。集群管理员负责将"storage class"的"reclaim policy"设置成用户期望的形式,否则需要用

户手动为创建后的动态PV编辑"reclaim policy"删除被PVC释放的PV及其后端存储volume。对于动态PV其"reclaim policy"继承自其"storage class",

默认是Delete。集群管理员负责将"storage class"的"reclaim policy"设置成用户期望的形式,否则需要用

户手动为创建后的动态PV编辑"reclaim policy"Recycle 废弃

删除被PVC释放的PV及其后端存储volume。对于动态PV其"reclaim policy"继承自其"storage class",

默认是Delete。集群管理员负责将"storage class"的"reclaim policy"设置成用户期望的形式,否则需要用

户手动为创建后的动态PV编辑"reclaim policy"删除被PVC释放的PV及其后端存储volume。对于动态PV其"reclaim policy"继承自其"storage class",

默认是Delete。集群管理员负责将"storage class"的"reclaim policy"设置成用户期望的形式,否则需要用

户手动为创建后的动态PV编辑"reclaim policy"2. 创建yaml文件

这个插件之维护到2020年

cat 7.storageclass.yaml

#设置ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #根据实际环境设定namespace,下面类同

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

#配置StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: alma-nfs-sc

provisioner: alma-nfs #这里的名称要和provisioner配置中的环境变量PROVISIONER_NAME保持一致

parameters:

archiveOnDelete: "false"

---

#配置provisioner

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #与RBAC中的namespace保持一致

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-client-provisioner:3.1.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: alma-nfs #provisioner名称,请确保该名称与 nfs-StorageClass配置中的provisioner名称保持一致

- name: NFS_SERVER

value: 10.103.236.199 #NFS Server IP地址

- name: NFS_PATH

value: /data/nfs/pv01 #NFS挂载卷

volumes:

- name: nfs-client-root

nfs:

server: 10.103.236.199 #NFS Server IP地址

path: /data/nfs/pv01 #NFS 挂载卷cat 7.storageclass.yaml

#设置ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #根据实际环境设定namespace,下面类同

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

#配置StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: alma-nfs-sc

provisioner: alma-nfs #这里的名称要和provisioner配置中的环境变量PROVISIONER_NAME保持一致

parameters:

archiveOnDelete: "false"

---

#配置provisioner

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #与RBAC中的namespace保持一致

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-client-provisioner:3.1.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: alma-nfs #provisioner名称,请确保该名称与 nfs-StorageClass配置中的provisioner名称保持一致

- name: NFS_SERVER

value: 10.103.236.199 #NFS Server IP地址

- name: NFS_PATH

value: /data/nfs/pv01 #NFS挂载卷

volumes:

- name: nfs-client-root

nfs:

server: 10.103.236.199 #NFS Server IP地址

path: /data/nfs/pv01 #NFS 挂载卷2.1 执行apply

[root@kube-master nfs]# kubectl apply -f 7.storageclass.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

storageclass.storage.k8s.io/alma-nfs-sc created

deployment.apps/nfs-client-provisioner created[root@kube-master nfs]# kubectl apply -f 7.storageclass.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

storageclass.storage.k8s.io/alma-nfs-sc created

deployment.apps/nfs-client-provisioner created- 查看

[root@kube-master nfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

alma-nfs-sc alma-nfs Delete Immediate false 4m4s[root@kube-master nfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

alma-nfs-sc alma-nfs Delete Immediate false 4m4s2.2 测试StorageClass

- 创建

[root@kube-master nfs]# cat 8.test-storageclass-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "alma-nfs-sc" #与nfs-StorageClass的 metadata.name保持一致

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi[root@kube-master nfs]# cat 8.test-storageclass-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "alma-nfs-sc" #与nfs-StorageClass的 metadata.name保持一致

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi- 执行apply

kubectl apply -f 8.test-storageclass-pvc.yamlkubectl apply -f 8.test-storageclass-pvc.yaml- 查看

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Pending alma-nfs-sc 16m

#查看pod日志

[root@kube-master nfs]# kubetail nfs-client-provisioner-68759f5ccd-kbhjj

Will tail 1 logs...

nfs-client-provisioner-68759f5ccd-kbhjj

[nfs-client-provisioner-68759f5ccd-kbhjj] I0604 07:56:10.862168 1 controller.go:987] provision "default/test-claim" class "alma-nfs-sc": started

[nfs-client-provisioner-68759f5ccd-kbhjj] E0604 07:56:10.874303 1 controller.go:1004] provision "default/test-claim" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make referencekubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Pending alma-nfs-sc 16m

#查看pod日志

[root@kube-master nfs]# kubetail nfs-client-provisioner-68759f5ccd-kbhjj

Will tail 1 logs...

nfs-client-provisioner-68759f5ccd-kbhjj

[nfs-client-provisioner-68759f5ccd-kbhjj] I0604 07:56:10.862168 1 controller.go:987] provision "default/test-claim" class "alma-nfs-sc": started

[nfs-client-provisioner-68759f5ccd-kbhjj] E0604 07:56:10.874303 1 controller.go:1004] provision "default/test-claim" class "alma-nfs-sc": unexpected error getting claim reference: selfLink was empty, can't make reference💡 说明

解决方式:

kubernetes 1.20版本之后 禁用了 selfLink

vim /etc/kubernetes/manifests/kube-apiserver.yaml

#Under here:

spec:

containers:

- command:

- kube-apiserver

#Add this line:

- --feature-gates=RemoveSelfLink=false#Under here:

spec:

containers:

- command:

- kube-apiserver

#Add this line:

- --feature-gates=RemoveSelfLink=false保存,稍后几秒,api-server自动重启

#改变默认 StorageClass

kubectl patch storageclass alma-nfs-sc -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'#改变默认 StorageClass

kubectl patch storageclass alma-nfs-sc -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'#再次查看pvc

[root@kube-master nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-a3e2e1de-9b3f-4b80-9d25-f44612aa292b 1Mi RWX alma-nfs-sc 3s#再次查看pvc

[root@kube-master nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-a3e2e1de-9b3f-4b80-9d25-f44612aa292b 1Mi RWX alma-nfs-sc 3s2.3 创建Pod

[root@kube-master nfs]# cat 9.nginx-storageclass-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-sc

name: nginx-deploy-sc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-sc

template:

metadata:

labels:

app: nginx-deploy-sc

spec:

containers:

- image: nginx:latest

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-sc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-sc

labels:

app: nginx-sc

spec:

accessModes:

- ReadWriteMany

storageClassName: alma-nfs-sc #增加本环境的存储类

resources:

requests:

storage: 1Gi[root@kube-master nfs]# cat 9.nginx-storageclass-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-sc

name: nginx-deploy-sc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-sc

template:

metadata:

labels:

app: nginx-deploy-sc

spec:

containers:

- image: nginx:latest

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-sc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-sc

labels:

app: nginx-sc

spec:

accessModes:

- ReadWriteMany

storageClassName: alma-nfs-sc #增加本环境的存储类

resources:

requests:

storage: 1Gi- 查看pod

[root@kube-master nfs]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-sc-865d997b5b-7cj2f 1/1 Running 0 43s 172.17.74.108 kube-node03 <none> <none>

nginx-deploy-sc-865d997b5b-nshpl 1/1 Running 0 43s 172.30.0.143 kube-node01 <none> <none>[root@kube-master nfs]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-sc-865d997b5b-7cj2f 1/1 Running 0 43s 172.17.74.108 kube-node03 <none> <none>

nginx-deploy-sc-865d997b5b-nshpl 1/1 Running 0 43s 172.30.0.143 kube-node01 <none> <none>- 验证效果

[root@kube-master nfs]# curl 172.17.74.108

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.27.0</center>

</body>

</html>

# 原因是nfs中没有添加资源

#在nfs server中添加资源,会在pv01下面创建一个目录,需要你在这个目录下创建资源

[root@Rocky pv01]# pwd

/data/nfs/pv01

[root@Rocky pv01]# ls

default-nginx-sc-pvc-08863344-1951-4235-86c9-241ebf0bb634

[root@Rocky pv01]# echo "hello nginx svc" > default-nginx-sc-pvc-08863344-1951-4235-86c9-241ebf0bb634/index.html

#再次访问nginx

[root@kube-master nfs]# curl 172.17.74.108

hello nginx svc[root@kube-master nfs]# curl 172.17.74.108

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.27.0</center>

</body>

</html>

# 原因是nfs中没有添加资源

#在nfs server中添加资源,会在pv01下面创建一个目录,需要你在这个目录下创建资源

[root@Rocky pv01]# pwd

/data/nfs/pv01

[root@Rocky pv01]# ls

default-nginx-sc-pvc-08863344-1951-4235-86c9-241ebf0bb634

[root@Rocky pv01]# echo "hello nginx svc" > default-nginx-sc-pvc-08863344-1951-4235-86c9-241ebf0bb634/index.html

#再次访问nginx

[root@kube-master nfs]# curl 172.17.74.108

hello nginx svc3. 基于nfs-subdir-external-provisioner,推荐

由于kubernetes内部不包含NFS驱动,所以需要使用外部驱动nfs-subdir-external-provisioner是一个自动供应器,它使用NFS服务端来支持动态供应。

NFS-subdir-external- provisioner实例负责监视PersistentVolumeClaims请求StorageClass,并自动为它们创建NFS所支持的PresistentVolumes。

GitHub地址: https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

持续更新中

3.1 安装nfs-Server驱动

创建RBAC权限

[root@kube-master nfs]# cat 1.nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io[root@kube-master nfs]# cat 1.nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io- 执行

[root@kube-master nfs]# kubectl apply -f 1.nfs-rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created[root@kube-master nfs]# kubectl apply -f 1.nfs-rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created3.2 部署nfs-Provisioner

[root@kube-master nfs]# cat 2.nfs-provisioner-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner #NFS-Provisioner的名称,后续StorageClassName要与该名称保持一致

- name: NFS_SERVER #NFS服务器的地址

value: 10.103.236.199

- name: NFS_PATH

value: /data

volumes:

- name: nfs-client-root

nfs:

server: 10.103.236.199

path: /data[root@kube-master nfs]# cat 2.nfs-provisioner-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner #NFS-Provisioner的名称,后续StorageClassName要与该名称保持一致

- name: NFS_SERVER #NFS服务器的地址

value: 10.103.236.199

- name: NFS_PATH

value: /data

volumes:

- name: nfs-client-root

nfs:

server: 10.103.236.199

path: /data- 执行apply

[root@kube-master nfs]# kubectl apply -f 2.nfs-provisioner-deploy.yaml

deployment.apps/nfs-client-provisioner created

#Pod正常运行。

[root@kube-master nfs]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-7f5bc48c68-8z5z6 1/1 Running 0 3m37s

#describe查看Pod详细信息;

[root@kube-master nfs-subdir-external-provisioner]# kubectl describe pod nfs-client-provisioner-7f5bc48c68-8z5z6

Name: nfs-client-provisioner-7f5bc48c68-8z5z6

Namespace: default

Priority: 0

Node: kube-node01/10.103.236.202

Start Time: Tue, 04 Jun 2024 21:04:55 +0800

Labels: app=nfs-client-provisioner

pod-template-hash=7f5bc48c68

Annotations: cni.projectcalico.org/podIP: 172.30.0.158/32

cni.projectcalico.org/podIPs: 172.30.0.158/32

Status: Running

IP: 172.30.0.158

IPs:

IP: 172.30.0.158

Controlled By: ReplicaSet/nfs-client-provisioner-7f5bc48c68

Containers:

nfs-client-provisioner:

Container ID: docker://aca9232b37f76ba5589002669046d3339d2cbb9d0220ec17eb3ac678f70ed0e0

Image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2

Image ID: docker-pullable://lihuahaitang/nfs-subdir-external-provisioner@sha256:f741e403b3ca161e784163de3ebde9190905fdbf7dfaa463620ab8f16c0f6423

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 04 Jun 2024 21:04:58 +0800

Ready: True

Restart Count: 0

Environment:

PROVISIONER_NAME: k8s-sigs.io/nfs-subdir-external-provisioner

NFS_SERVER: 10.103.236.199

NFS_PATH: /data/nfs/pv01

Mounts:

/persistentvolumes from nfs-client-root (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-p97qn (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

nfs-client-root:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 10.103.236.199

Path: /data/nfs/pv01

ReadOnly: false

kube-api-access-p97qn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m3s default-scheduler Successfully assigned default/nfs-client-provisioner-7f5bc48c68-8z5z6 to kube-node01

Normal Pulling 2m1s kubelet Pulling image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2"

Normal Pulled 2m kubelet Successfully pulled image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2" in 1.229420395s

Normal Created 2m kubelet Created container nfs-client-provisioner

Normal Started 2m kubelet Started container nfs-client-provisioner[root@kube-master nfs]# kubectl apply -f 2.nfs-provisioner-deploy.yaml

deployment.apps/nfs-client-provisioner created

#Pod正常运行。

[root@kube-master nfs]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-7f5bc48c68-8z5z6 1/1 Running 0 3m37s

#describe查看Pod详细信息;

[root@kube-master nfs-subdir-external-provisioner]# kubectl describe pod nfs-client-provisioner-7f5bc48c68-8z5z6

Name: nfs-client-provisioner-7f5bc48c68-8z5z6

Namespace: default

Priority: 0

Node: kube-node01/10.103.236.202

Start Time: Tue, 04 Jun 2024 21:04:55 +0800

Labels: app=nfs-client-provisioner

pod-template-hash=7f5bc48c68

Annotations: cni.projectcalico.org/podIP: 172.30.0.158/32

cni.projectcalico.org/podIPs: 172.30.0.158/32

Status: Running

IP: 172.30.0.158

IPs:

IP: 172.30.0.158

Controlled By: ReplicaSet/nfs-client-provisioner-7f5bc48c68

Containers:

nfs-client-provisioner:

Container ID: docker://aca9232b37f76ba5589002669046d3339d2cbb9d0220ec17eb3ac678f70ed0e0

Image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2

Image ID: docker-pullable://lihuahaitang/nfs-subdir-external-provisioner@sha256:f741e403b3ca161e784163de3ebde9190905fdbf7dfaa463620ab8f16c0f6423

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 04 Jun 2024 21:04:58 +0800

Ready: True

Restart Count: 0

Environment:

PROVISIONER_NAME: k8s-sigs.io/nfs-subdir-external-provisioner

NFS_SERVER: 10.103.236.199

NFS_PATH: /data/nfs/pv01

Mounts:

/persistentvolumes from nfs-client-root (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-p97qn (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

nfs-client-root:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 10.103.236.199

Path: /data/nfs/pv01

ReadOnly: false

kube-api-access-p97qn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m3s default-scheduler Successfully assigned default/nfs-client-provisioner-7f5bc48c68-8z5z6 to kube-node01

Normal Pulling 2m1s kubelet Pulling image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2"

Normal Pulled 2m kubelet Successfully pulled image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/nfs-subdir-external-provisioner:v4.0.2" in 1.229420395s

Normal Created 2m kubelet Created container nfs-client-provisioner

Normal Started 2m kubelet Started container nfs-client-provisioner3.3 创建StorageClass

[root@kube-master nfs]# cat 3.storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass #类型为storageclass

metadata:

name: nfs-provisioner-storage #PVC申请时需明确指定的storageclass名称

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner #供应商名称,必须和上面创建的"PROVISIONER_NAME"保持一致

parameters:

archiveOnDelete: "false" #如果值为true,删除pvc后也会删除目录内容,"false"则会对数据进行保留

pathPattern: "${.PVC.namespace}/${.PVC.name}" #创建目录路径的模板,默认为随机命名。[root@kube-master nfs]# cat 3.storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass #类型为storageclass

metadata:

name: nfs-provisioner-storage #PVC申请时需明确指定的storageclass名称

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner #供应商名称,必须和上面创建的"PROVISIONER_NAME"保持一致

parameters:

archiveOnDelete: "false" #如果值为true,删除pvc后也会删除目录内容,"false"则会对数据进行保留

pathPattern: "${.PVC.namespace}/${.PVC.name}" #创建目录路径的模板,默认为随机命名。- 执行apply

[root@kube-master nfs]# kubectl apply -f 3.storageclass.yaml

storageclass.storage.k8s.io/nfs-provisioner-storage created[root@kube-master nfs]# kubectl apply -f 3.storageclass.yaml

storageclass.storage.k8s.io/nfs-provisioner-storage created- 查看

storage简写sc

[root@kube-master nfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-provisioner-storage k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 3s

describe查看配详细信息。

[root@kube-master nfs]# kubectl describe sc

Name: nfs-provisioner-storage

IsDefaultClass: Yes

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"},"name":"nfs-provisioner-storage"},"parameters":{"archiveOnDelete":"false","pathPattern":"${.PVC.namespace}/${.PVC.name}"},"provisioner":"k8s-sigs.io/nfs-subdir-external-provisioner"}

,storageclass.kubernetes.io/is-default-class=true

Provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Parameters: archiveOnDelete=false,pathPattern=${.PVC.namespace}/${.PVC.name}

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

storage简写sc

[root@kube-master nfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-provisioner-storage k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 3s

describe查看配详细信息。

[root@kube-master nfs]# kubectl describe sc

Name: nfs-provisioner-storage

IsDefaultClass: Yes

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"},"name":"nfs-provisioner-storage"},"parameters":{"archiveOnDelete":"false","pathPattern":"${.PVC.namespace}/${.PVC.name}"},"provisioner":"k8s-sigs.io/nfs-subdir-external-provisioner"}

,storageclass.kubernetes.io/is-default-class=true

Provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Parameters: archiveOnDelete=false,pathPattern=${.PVC.namespace}/${.PVC.name}

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>3.4 创建PVC-自动关联PV

[root@kube-master nfs]# cat 4.nfs-pvc-test.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc-test

spec:

storageClassName: "nfs-provisioner-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 0.5Gi[root@kube-master nfs]# cat 4.nfs-pvc-test.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc-test

spec:

storageClassName: "nfs-provisioner-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 0.5Gi- 查看

这里的PV的名字是随机的,数据的存储路径是根据pathPattern来定义的。

[root@Rocky nfs-pvc-test]# pwd

/data/nfs/pv01/default/nfs-pvc-test

[root@kube-master nfs]# kubectl get pv

pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f 512Mi RWX Delete Bound default/nfs-pvc-test nfs-provisioner-storage 5m19s

[root@kube-master nfs]# kubectl describe pv pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Name: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pv-protection]

StorageClass: nfs-provisioner-storage

Status: Bound

Claim: default/nfs-pvc-test

Reclaim Policy: Delete

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 512Mi

Node Affinity: <none>

Message:

Source:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 10.103.236.199

Path: /data/nfs/pv01/default/nfs-pvc-test

ReadOnly: false

Events: <none>

describe可用看到更详细的信息

[root@kube-master nfs]# kubectl describe pvc

Name: nfs-pvc-test

Namespace: default

StorageClass: nfs-provisioner-storage

Status: Bound

Volume: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 512Mi 定义的存储大小

Access Modes: RWX 卷的读写

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 13m persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s-sigs.io/nfs-subdir-external-provisioner" or manually created by system administrator

Normal Provisioning 13m k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-57d6d9d5f6-dcxgq_259532a3-4dba-4183-be6d-8e8b320fc778 External provisioner is provisioning volume for claim "default/nfs-pvc-test"

Normal ProvisioningSucceeded 13m k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-57d6d9d5f6-dcxgq_259532a3-4dba-4183-be6d-8e8b320fc778 Successfully provisioned volume pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f这里的PV的名字是随机的,数据的存储路径是根据pathPattern来定义的。

[root@Rocky nfs-pvc-test]# pwd

/data/nfs/pv01/default/nfs-pvc-test

[root@kube-master nfs]# kubectl get pv

pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f 512Mi RWX Delete Bound default/nfs-pvc-test nfs-provisioner-storage 5m19s

[root@kube-master nfs]# kubectl describe pv pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Name: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pv-protection]

StorageClass: nfs-provisioner-storage

Status: Bound

Claim: default/nfs-pvc-test

Reclaim Policy: Delete

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 512Mi

Node Affinity: <none>

Message:

Source:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 10.103.236.199

Path: /data/nfs/pv01/default/nfs-pvc-test

ReadOnly: false

Events: <none>

describe可用看到更详细的信息

[root@kube-master nfs]# kubectl describe pvc

Name: nfs-pvc-test

Namespace: default

StorageClass: nfs-provisioner-storage

Status: Bound

Volume: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 512Mi 定义的存储大小

Access Modes: RWX 卷的读写

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 13m persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s-sigs.io/nfs-subdir-external-provisioner" or manually created by system administrator

Normal Provisioning 13m k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-57d6d9d5f6-dcxgq_259532a3-4dba-4183-be6d-8e8b320fc778 External provisioner is provisioning volume for claim "default/nfs-pvc-test"

Normal ProvisioningSucceeded 13m k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-57d6d9d5f6-dcxgq_259532a3-4dba-4183-be6d-8e8b320fc778 Successfully provisioned volume pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f3.5 创建Pod

[root@kube-master nfs]# cat 5.nginx-pvc-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-sc

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-page

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-page

persistentVolumeClaim:

claimName: nfs-pvc-test[root@kube-master nfs]# cat 5.nginx-pvc-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-sc

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-page

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-page

persistentVolumeClaim:

claimName: nfs-pvc-test- 查看

[root@kube-master nfs]# kubectl apply -f nginx-pvc-test.yaml

pod/nginx-sc created

[root@kube-master nfs]# kubectl describe pvc

Name: nfs-pvc-test

Namespace: default

StorageClass: nfs-provisioner-storage

Status: Bound

Volume: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 512Mi

Access Modes: RWX

VolumeMode: Filesystem

Used By: nginx-sc 可以看到的是nginx-sc这个Pod在使用这个PVC。

和上面名称是一致的。

[root@kube-master nfs]# kubectl get pods nginx-sc

NAME READY STATUS RESTARTS AGE

nginx-sc 1/1 Running 0 2m43s

尝试写入数据

[root@Rocky ~]# echo "test nfs" > /data/nfs/pv01/default/nfs-pvc-test/index.html

访问测试

[root@kube-master nfs-subdir-external-provisioner]# curl 172.30.0.155

test nfs[root@kube-master nfs]# kubectl apply -f nginx-pvc-test.yaml

pod/nginx-sc created

[root@kube-master nfs]# kubectl describe pvc

Name: nfs-pvc-test

Namespace: default

StorageClass: nfs-provisioner-storage

Status: Bound

Volume: pvc-8ed67f7d-d829-4d87-8c66-d8a85f50772f

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 512Mi

Access Modes: RWX

VolumeMode: Filesystem

Used By: nginx-sc 可以看到的是nginx-sc这个Pod在使用这个PVC。

和上面名称是一致的。

[root@kube-master nfs]# kubectl get pods nginx-sc

NAME READY STATUS RESTARTS AGE

nginx-sc 1/1 Running 0 2m43s

尝试写入数据

[root@Rocky ~]# echo "test nfs" > /data/nfs/pv01/default/nfs-pvc-test/index.html

访问测试

[root@kube-master nfs-subdir-external-provisioner]# curl 172.30.0.155

test nfs区别

nfs-subdir-external-provisioner:

- 不在需要在kube-apiserver配置selfLink

- 也不用操作改变默认 StorageClass