1. 版本兼容

| Velero version | Expected Kubernetes version compatibility | Tested on Kubernetes version |

|---|---|---|

| 1.14 | 1.18-latest | 1.27.9, 1.28.9, and 1.29.4 |

| 1.13 | 1.18-latest | 1.26.5, 1.27.3, 1.27.8, and 1.28.3 |

| 1.12 | 1.18-latest | 1.25.7, 1.26.5, 1.26.7, and 1.27.3 |

| 1.11 | 1.18-latest | 1.23.10, 1.24.9, 1.25.5, and 1.26.1 |

| 1.10 | 1.18-latest | 1.22.5, 1.23.8, 1.24.6 and 1.25.1 |

2. velero部署

2.0 存储提供商

Velero支持的提供商

| 提供商 | 对象存储 | 卷快照 | 插件提供商Repo | 安装说明 |

|---|---|---|---|---|

| Amazon Web Services (AWS) | AWS S3 | AWS EBS | Velero plugin for AWS | AWS Plugin Setup |

| Google Cloud Platform (GCP) | Google Cloud Storage | Google Compute Engine Disks | Velero plugin for GCP | GCP Plugin Setup |

| Microsoft Azure | Azure Blob Storage | Azure Managed Disks | Velero plugin for Microsoft Azure | Azure Plugin Setup |

| VMware vSphere | 🚫 | vSphere Volumes | VMware vSphere | vSphere Plugin Setup |

| Container Storage Interface (CSI) | 🚫 | CSI Volumes | Velero plugin for CSI | CSI Plugin Setup |

社区支持的提供商

| Provider | Object Store | Volume Snapshotter | Plugin Documentation | Contact |

|---|---|---|---|---|

| AlibabaCloud | Alibaba Cloud OSS | Alibaba Cloud | AlibabaCloud | GitHub Issue |

| DigitalOcean | DigitalOcean Object Storage | DigitalOcean Volumes Block Storage | StackPointCloud | |

| Hewlett Packard | 🚫 | HPE Storage | Hewlett Packard | Slack, GitHub Issue |

| OpenEBS | 🚫 | OpenEBS CStor Volume | OpenEBS | Slack, GitHub Issue |

| Portworx | 🚫 | Portworx Volume | Portworx | Slack, GitHub Issue |

| Storj | Storj Object Storage | 🚫 | Storj | GitHub Issue |

2.1 存储方式

2.1.1 存储方式

minio

minio容器方式

docker run -dit --name minio -u 0 \

-h minio --net host --restart always \

-e MINIO_ROOT_USER="minio" \

-e MINIO_ROOT_PASSWORD="miniow2p0w2r4" \

-v /data/minio:/data -w /data \

registry.cn-zhangjiakou.aliyuncs.com/hsuing/minio:RELEASE.2022-11-29T23-40-49Z \

minio server /data --console-address '0.0.0.0:9001' --address="0.0.0.0:9000"

#解释

9001 ---> UI访问界面

9000 ---> api地址

#创建bucket velero

docker exec -it minio bash -c 'mc mb velero; mc ls'

#minio储存地址 http://minio-ip:9000docker run -dit --name minio -u 0 \

-h minio --net host --restart always \

-e MINIO_ROOT_USER="minio" \

-e MINIO_ROOT_PASSWORD="miniow2p0w2r4" \

-v /data/minio:/data -w /data \

registry.cn-zhangjiakou.aliyuncs.com/hsuing/minio:RELEASE.2022-11-29T23-40-49Z \

minio server /data --console-address '0.0.0.0:9001' --address="0.0.0.0:9000"

#解释

9001 ---> UI访问界面

9000 ---> api地址

#创建bucket velero

docker exec -it minio bash -c 'mc mb velero; mc ls'

#minio储存地址 http://minio-ip:9000minio k8s方式

- 创建minio-dp.yaml

apiVersion: v1

kind: Namespace

metadata:

name: velero

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

strategy:

type: Recreate

selector:

matchLabels:

component: minio

template:

metadata:

labels:

component: minio

spec:

nodeSelector:

kubernetes.io/hostname: k8smaster1

volumes:

- hostPath:

path: /data/minio

type: DirectoryOrCreate

name: storage

- name: config

emptyDir: {}

containers:

- name: minio

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/minio:RELEASE.2022-11-29T23-40-49Z

imagePullPolicy: IfNotPresent

args:

- server

- /data

- --config-dir=/config

- --console-address=:9001

env:

- name: MINIO_ROOT_USER

value: "admin"

- name: MINIO_ROOT_PASSWORD

value: "minio123"

ports:

- containerPort: 9000

- containerPort: 9001

volumeMounts:

- name: storage

mountPath: /data

- name: config

mountPath: "/config"

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: "1"

memory: 2Gi

---

apiVersion: v1

kind: Service

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

sessionAffinity: None

type: NodePort

ports:

- name: api

port: 9000

protocol: TCP

targetPort: 9000

nodePort: 30080

- name: console

port: 9001

protocol: TCP

targetPort: 9001

nodePort: 30081

selector:

component: minio

---

apiVersion: batch/v1

kind: Job

metadata:

namespace: velero

name: minio-setup

labels:

component: minio

spec:

template:

metadata:

name: minio-setup

spec:

nodeSelector:

kubernetes.io/hostname: k8s-master01 #根据自己的环境修改

restartPolicy: OnFailure

volumes:

- name: config

emptyDir: {}

containers:

- name: mc

image: airbyte/mc:latest

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- "mc --config-dir=/config config host add velero http://minio.velero.svc.cluster.local:9000 admin minio123 && mc --config-dir=/config mb -p velero/velero"

volumeMounts:

- name: config

mountPath: "/config"apiVersion: v1

kind: Namespace

metadata:

name: velero

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

strategy:

type: Recreate

selector:

matchLabels:

component: minio

template:

metadata:

labels:

component: minio

spec:

nodeSelector:

kubernetes.io/hostname: k8smaster1

volumes:

- hostPath:

path: /data/minio

type: DirectoryOrCreate

name: storage

- name: config

emptyDir: {}

containers:

- name: minio

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/minio:RELEASE.2022-11-29T23-40-49Z

imagePullPolicy: IfNotPresent

args:

- server

- /data

- --config-dir=/config

- --console-address=:9001

env:

- name: MINIO_ROOT_USER

value: "admin"

- name: MINIO_ROOT_PASSWORD

value: "minio123"

ports:

- containerPort: 9000

- containerPort: 9001

volumeMounts:

- name: storage

mountPath: /data

- name: config

mountPath: "/config"

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: "1"

memory: 2Gi

---

apiVersion: v1

kind: Service

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

sessionAffinity: None

type: NodePort

ports:

- name: api

port: 9000

protocol: TCP

targetPort: 9000

nodePort: 30080

- name: console

port: 9001

protocol: TCP

targetPort: 9001

nodePort: 30081

selector:

component: minio

---

apiVersion: batch/v1

kind: Job

metadata:

namespace: velero

name: minio-setup

labels:

component: minio

spec:

template:

metadata:

name: minio-setup

spec:

nodeSelector:

kubernetes.io/hostname: k8s-master01 #根据自己的环境修改

restartPolicy: OnFailure

volumes:

- name: config

emptyDir: {}

containers:

- name: mc

image: airbyte/mc:latest

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- "mc --config-dir=/config config host add velero http://minio.velero.svc.cluster.local:9000 admin minio123 && mc --config-dir=/config mb -p velero/velero"

volumeMounts:

- name: config

mountPath: "/config"- 执行apply

kubectl apply -f minio-dp.yamlkubectl apply -f minio-dp.yaml- 查看

kubectl get all -n velerokubectl get all -n velerooss

在阿里云oss创建bucket bucket名称k8s-hsuing region为oss-cn-shanghai

oss的region和访问域名 https://help.aliyun.com/zh/oss/user-guide/regions-and-endpoints

#创建凭证 create auth

cat >/root/velero/auth-oss.txt <<EOF

[default]

aws_access_key_id = LTAI4FoDtp4y7ENqv9X4emSE

aws_secret_access_key = lVNCxCVGciaJqUa5axxx

EOF

#install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--use-volume-snapshots=false \

--bucket k8s-hsuing \

--secret-file ~/k8s/velero/auth-oss.txt \

--backup-location-config region=oss-cn-shanghai,s3ForcePathStyle="false",s3Url=http://oss-cn-shanghai.aliyuncs.com

#oss使用virtual hosting访问方式,配置s3ForcePathStyle="false"

velero version

#创建凭证 create auth

cat >/root/velero/auth-oss.txt <<EOF

[default]

aws_access_key_id = LTAI4FoDtp4y7ENqv9X4emSE

aws_secret_access_key = lVNCxCVGciaJqUa5axxx

EOF

#install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--use-volume-snapshots=false \

--bucket k8s-hsuing \

--secret-file ~/k8s/velero/auth-oss.txt \

--backup-location-config region=oss-cn-shanghai,s3ForcePathStyle="false",s3Url=http://oss-cn-shanghai.aliyuncs.com

#oss使用virtual hosting访问方式,配置s3ForcePathStyle="false"

velero version七牛云

在七牛云创建储存https://portal.qiniu.com/kodo/bucket

获取S3空间域名,打开创建的储存,空间概述,S3 域名,点击查询

七牛云储存region和访问域名 https://developer.qiniu.com/kodo/4088/s3-access-domainname

#创建凭证 create auth

cat >/root/velero/auth-qiniu.txt <<EOF

[default]

aws_access_key_id = foqsLZBJSr7yF59_3sB5RguezMh0l223s2NcC9Kz

aws_secret_access_key = BTBwrrCE7TLKjztpsBZX2GA45Cb3yR9Fxxxx

EOF

#install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--use-volume-snapshots=false \

--bucket 817nb3 \

--secret-file /root/velero/auth-qiniu.txt \

--backup-location-config region=cn-east-1,s3ForcePathStyle="false",s3Url=http://s3.cn-east-1.qiniucs.com

#七牛云bucket使用S3空间域名前部分

velero version

#创建凭证 create auth

cat >/root/velero/auth-qiniu.txt <<EOF

[default]

aws_access_key_id = foqsLZBJSr7yF59_3sB5RguezMh0l223s2NcC9Kz

aws_secret_access_key = BTBwrrCE7TLKjztpsBZX2GA45Cb3yR9Fxxxx

EOF

#install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--use-volume-snapshots=false \

--bucket 817nb3 \

--secret-file /root/velero/auth-qiniu.txt \

--backup-location-config region=cn-east-1,s3ForcePathStyle="false",s3Url=http://s3.cn-east-1.qiniucs.com

#七牛云bucket使用S3空间域名前部分

velero version2.1.2 restic方式

Restic 是一款 GO 语言开发的开源免费且快速、高效和安全的跨平台备份工具。它是文件系统级别备份持久卷数据并将其发送到 Velero 的对象存储。执行速度取决于本地 IO 能力,网络带宽和对象存储性能,相对快照方式备份慢。但如果当前集群或者存储出现问题,由于所有资源和数据都存储在远端的对象存储上,用 Restic 方式备份可以很容易的将应用恢复。 Tips: 使用 Restic 来对 PV 进行备份会有一些限制:

- 不支持备份 hostPath,支持 EFS、AzureFile、NFS、emptyDir、local 或其他没有本地快照概念的卷类型

- 备份数据标志只能通过 Pod 来识别

- 单线程操作大量文件比较慢

velero install \

--provider aws \

--bucket velero \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--namespace velero \

--secret-file /home/qm/velero/velero-v1.8.1-linux-amd64/credentials-velero \

--use-volume-snapshots=false \

--default-volumes-to-restic=true \

--kubeconfig=/root/.kube/config \

--use-restic \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.103.236.199:9000velero install \

--provider aws \

--bucket velero \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--namespace velero \

--secret-file /home/qm/velero/velero-v1.8.1-linux-amd64/credentials-velero \

--use-volume-snapshots=false \

--default-volumes-to-restic=true \

--kubeconfig=/root/.kube/config \

--use-restic \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.103.236.199:9000解释:

- --use-restic 表示使用开源免费备份工具 restic 备份和还原持久卷数据,启用该参数后会部署一个名为 restic 的 DaemonSet 对象

❌ 注意

注意的是启动需要修改 Restic DaemonSet spec 配置,调整为实际环境中 Kubernetes 指定 pod 保存路径的 hostPath

# 根据实际Kubernetes 部署环境修改hostPath路径

kubectl -n velero patch daemonset restic -p '{"spec":{"template":{"spec":{"volumes":[{"name":"host-pods","hostPath":{"path":"/apps/data/kubelet/pods"}}]}}}}'

#查看服务

kubectl get all -n velero# 根据实际Kubernetes 部署环境修改hostPath路径

kubectl -n velero patch daemonset restic -p '{"spec":{"template":{"spec":{"volumes":[{"name":"host-pods","hostPath":{"path":"/apps/data/kubelet/pods"}}]}}}}'

#查看服务

kubectl get all -n velero2.2 服务端安装

官档,https://velero.io/docs/v1.8/customize-installation/

2.2.1 k8s-minio方式

#创建凭证 create auth

mkdir -p /k8s/velero

cat >~/k8s/velero/auth-minio.txt <<EOF

[default]

aws_access_key_id = minio

aws_secret_access_key = miniow2p0w2r4

EOF

#velero install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--bucket velero \

--use-volume-snapshots=false \

--secret-file ~/k8s/velero/auth-minio.txt \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.kube-public.svc:9000

#自定义镜像地址 --image --plugins

#查看版本

[root@kube-master velero]# velero version

Client:

Version: v1.13.2

Git commit: 4d961fb6fec384ed7f3c1b7c65c818106107f5a6

Server:

Version: v1.13.2

#

[root@kube-master velero]# kubectl api-versions |grep velero

velero.io/v1

velero.io/v2alpha1

#查看pod

[root@kube-master velero]# kubectl get pod -n velero

NAME READY STATUS RESTARTS AGE

velero-5cdfb5ccc4-xphcj 1/1 Running 0 3h25m

#查看crd

kubectl -n velero get crds -l component=velero

#创建凭证 create auth

mkdir -p /k8s/velero

cat >~/k8s/velero/auth-minio.txt <<EOF

[default]

aws_access_key_id = minio

aws_secret_access_key = miniow2p0w2r4

EOF

#velero install

velero install \

--image registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero:v1.13.2 \

--plugins registry.cn-zhangjiakou.aliyuncs.com/hsuing/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--bucket velero \

--use-volume-snapshots=false \

--secret-file ~/k8s/velero/auth-minio.txt \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.kube-public.svc:9000

#自定义镜像地址 --image --plugins

#查看版本

[root@kube-master velero]# velero version

Client:

Version: v1.13.2

Git commit: 4d961fb6fec384ed7f3c1b7c65c818106107f5a6

Server:

Version: v1.13.2

#

[root@kube-master velero]# kubectl api-versions |grep velero

velero.io/v1

velero.io/v2alpha1

#查看pod

[root@kube-master velero]# kubectl get pod -n velero

NAME READY STATUS RESTARTS AGE

velero-5cdfb5ccc4-xphcj 1/1 Running 0 3h25m

#查看crd

kubectl -n velero get crds -l component=velero2.2.2 容器方式

velero install \

--image registry.aliyuncs.com/elvin/velero:v1.13.2 \

--plugins registry.aliyuncs.com/elvin/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--bucket velero \

--use-volume-snapshots=false \

--secret-file /root/velero/auth-minio.txt \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.103.236.199:9001velero install \

--image registry.aliyuncs.com/elvin/velero:v1.13.2 \

--plugins registry.aliyuncs.com/elvin/velero-plugin-for-aws:v1.9.2 \

--provider aws \

--bucket velero \

--use-volume-snapshots=false \

--secret-file /root/velero/auth-minio.txt \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.103.236.199:9001- 查看

## 查看服务启动情况

[root@kube-master velero]# kubectl get all -n velero

NAME READY STATUS RESTARTS AGE

pod/velero-5cdfb5ccc4-kmwbs 1/1 Running 0 3m8s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/velero 1/1 1 1 3m8s

NAME DESIRED CURRENT READY AGE

replicaset.apps/velero-5cdfb5ccc4 1 1 1 3m8s## 查看服务启动情况

[root@kube-master velero]# kubectl get all -n velero

NAME READY STATUS RESTARTS AGE

pod/velero-5cdfb5ccc4-kmwbs 1/1 Running 0 3m8s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/velero 1/1 1 1 3m8s

NAME DESIRED CURRENT READY AGE

replicaset.apps/velero-5cdfb5ccc4 1 1 1 3m8s2.3 客户端安装

#下载

wget https://github.com/vmware-tanzu/velero/releases/download/v1.13.2/velero-v1.13.2-linux-amd64.tar.gz

#解压,由于是二进制包,直接解压使用

tar zxvf velero-v1.13.2-linux-amd64.tar.gz && mv velero-v1.13.2-linux-amd64/velero /usr/local/bin/

#验证

[root@kube-master velero-v1.13.2-linux-amd64]# velero version

Client:

Version: v1.13.2

Git commit: 4d961fb6fec384ed7f3c1b7c65c818106107f5a6

<error getting server version: no matches for kind "ServerStatusRequest" in version "velero.io/v1">

#出现上面的原因,服务端还没有安装#下载

wget https://github.com/vmware-tanzu/velero/releases/download/v1.13.2/velero-v1.13.2-linux-amd64.tar.gz

#解压,由于是二进制包,直接解压使用

tar zxvf velero-v1.13.2-linux-amd64.tar.gz && mv velero-v1.13.2-linux-amd64/velero /usr/local/bin/

#验证

[root@kube-master velero-v1.13.2-linux-amd64]# velero version

Client:

Version: v1.13.2

Git commit: 4d961fb6fec384ed7f3c1b7c65c818106107f5a6

<error getting server version: no matches for kind "ServerStatusRequest" in version "velero.io/v1">

#出现上面的原因,服务端还没有安装- 启用命令补全

#启用命令补全

velero completion bash > /etc/bash_completion.d/velero

. /etc/bash_completion.d/velero

#查看帮助

velero -h#启用命令补全

velero completion bash > /etc/bash_completion.d/velero

. /etc/bash_completion.d/velero

#查看帮助

velero -h3. velero常用操作

3.0 基本命令

帮助:velero --help

#velero基本命令

velero get backup #查看备份

velero get schedule #查看定时备份

velero get restore #查看已有的恢复

velero get plugins #查看插件

#备份所有

velero backup create k8s-bakcup-all --ttl 72h

#恢复集群所有备份,对已经存在的服务不会覆盖

velero restore create --from-backup k8s-bakcup-all

#仅恢复default的namespace,包括集群资源

velero restore create --from-backup k8s-bakcup-all --include-namespaces default --include-cluster-resources=true

#恢复储存pv,pvc

velero restore create pvc --from-backup k8s-bakcup-all --include-resources persistentvolumeclaims,persistentvolumes

#恢复指定资源deployments,configmaps

velero restore create deploy-test --from-backup k8s-bakcup-all --include-resources deployments,configmaps

#筛选备份name=nginx-demo -l, --selector:通过指定label来匹配要backup的资源

velero backup create nginx-demo --from-backup k8s-bakcup-all --selector name=nginx-demo

#筛选备份恢复对象

--include-namespaces 筛选命名空间所有资源,不包括集群资源

--include-resources 筛选的资源类型

--exclude-resources 排除的资源类型

--include-cluster-resources=true 包括集群资源

#将test1命名空间资源恢复到test2

velero restore create test1-test2 --from-backup k8s-bakcup-all --namespace-mappings test1:test2

#备份hooks

#Velero支持在备份任务执行之前和执行后在容器中执行一些预先设定好的命令#velero基本命令

velero get backup #查看备份

velero get schedule #查看定时备份

velero get restore #查看已有的恢复

velero get plugins #查看插件

#备份所有

velero backup create k8s-bakcup-all --ttl 72h

#恢复集群所有备份,对已经存在的服务不会覆盖

velero restore create --from-backup k8s-bakcup-all

#仅恢复default的namespace,包括集群资源

velero restore create --from-backup k8s-bakcup-all --include-namespaces default --include-cluster-resources=true

#恢复储存pv,pvc

velero restore create pvc --from-backup k8s-bakcup-all --include-resources persistentvolumeclaims,persistentvolumes

#恢复指定资源deployments,configmaps

velero restore create deploy-test --from-backup k8s-bakcup-all --include-resources deployments,configmaps

#筛选备份name=nginx-demo -l, --selector:通过指定label来匹配要backup的资源

velero backup create nginx-demo --from-backup k8s-bakcup-all --selector name=nginx-demo

#筛选备份恢复对象

--include-namespaces 筛选命名空间所有资源,不包括集群资源

--include-resources 筛选的资源类型

--exclude-resources 排除的资源类型

--include-cluster-resources=true 包括集群资源

#将test1命名空间资源恢复到test2

velero restore create test1-test2 --from-backup k8s-bakcup-all --namespace-mappings test1:test2

#备份hooks

#Velero支持在备份任务执行之前和执行后在容器中执行一些预先设定好的命令3.1 备份

- 创建bucketname

#创建bucket velero

docker exec -it minio bash -c 'mc mb velero; mc ls'#创建bucket velero

docker exec -it minio bash -c 'mc mb velero; mc ls'- 创建

语法:velero backup create backname --include-namespaces namespace

[root@kube-master velero]# velero backup create k8s-backup-test --include-namespaces default

Backup request "k8s-backup-test" submitted successfully.

Run `velero backup describe k8s-backup-test` or `velero backup logs k8s-backup-test` for more details.[root@kube-master velero]# velero backup create k8s-backup-test --include-namespaces default

Backup request "k8s-backup-test" submitted successfully.

Run `velero backup describe k8s-backup-test` or `velero backup logs k8s-backup-test` for more details.- 查看

#查看default空间下的资源

[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

app-prod-97dfb4bf-h59vq 1/1 Running 25 (35m ago) 23d

app-prod-tcp-7bdfd5577c-tb8ls 1/1 Running 25 (35m ago) 23d

custom-nginx-errors-6df8ddcc64-ztqnz 1/1 Running 24 (35m ago) 23d

demo-6d4fc77748-dmg94 1/1 Running 6 (35m ago) 8d

demo-6d4fc77748-fbvg6 1/1 Running 6 (35m ago) 8d

nfs-client-provisioner-7f5bc48c68-8wld4 1/1 Running 32 (35m ago) 23d

old-demo-687c6ccd99-2rzwr 1/1 Running 17 (35m ago) 18d

old-demo-687c6ccd99-rv9fz 1/1 Running 53 (35m ago) 59d

[root@kube-master velero]# velero backup get

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

k8s-backup-test Completed 0 0 2024-07-25 10:12:23 +0800 CST 29d default <none>#查看default空间下的资源

[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

app-prod-97dfb4bf-h59vq 1/1 Running 25 (35m ago) 23d

app-prod-tcp-7bdfd5577c-tb8ls 1/1 Running 25 (35m ago) 23d

custom-nginx-errors-6df8ddcc64-ztqnz 1/1 Running 24 (35m ago) 23d

demo-6d4fc77748-dmg94 1/1 Running 6 (35m ago) 8d

demo-6d4fc77748-fbvg6 1/1 Running 6 (35m ago) 8d

nfs-client-provisioner-7f5bc48c68-8wld4 1/1 Running 32 (35m ago) 23d

old-demo-687c6ccd99-2rzwr 1/1 Running 17 (35m ago) 18d

old-demo-687c6ccd99-rv9fz 1/1 Running 53 (35m ago) 59d

[root@kube-master velero]# velero backup get

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

k8s-backup-test Completed 0 0 2024-07-25 10:12:23 +0800 CST 29d default <none>| 列表名字 | 描述 |

|---|---|

| NAME | velero备份名字 |

| STATUS | 备份状态 |

| ERRORS | |

| WARNINGS | |

| CREATED | 创建时间 |

| EXPIRES | 过期时间 |

| STORAGE LOCATION | 备份存储空间名字 |

| SELECTOR |

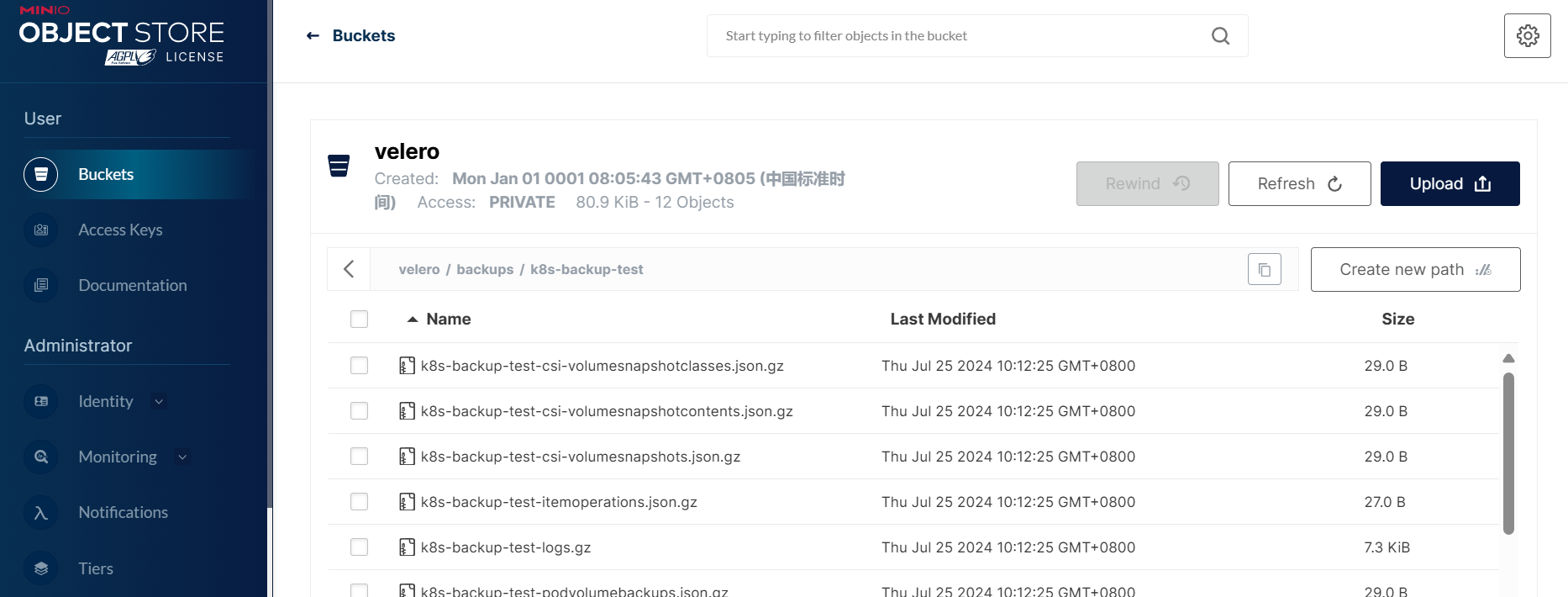

- 效果

3.2 还原

- 删除其中的pod

[root@kube-master velero]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

demo default 3 2024-07-16 18:06:26.685169771 +0800 CST deployed demo-1.0.0 v1

[root@kube-master velero]# helm uninstall demo

release "demo" uninstalled

[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

app-prod-97dfb4bf-h59vq 1/1 Running 25 (36m ago) 23d

app-prod-tcp-7bdfd5577c-tb8ls 1/1 Running 25 (36m ago) 23d

custom-nginx-errors-6df8ddcc64-ztqnz 1/1 Running 24 (36m ago) 23d

nfs-client-provisioner-7f5bc48c68-8wld4 1/1 Running 32 (36m ago) 23d

old-demo-687c6ccd99-2rzwr 1/1 Running 17 (36m ago) 18d

old-demo-687c6ccd99-rv9fz 1/1 Running 53 (36m ago) 59d[root@kube-master velero]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

demo default 3 2024-07-16 18:06:26.685169771 +0800 CST deployed demo-1.0.0 v1

[root@kube-master velero]# helm uninstall demo

release "demo" uninstalled

[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

app-prod-97dfb4bf-h59vq 1/1 Running 25 (36m ago) 23d

app-prod-tcp-7bdfd5577c-tb8ls 1/1 Running 25 (36m ago) 23d

custom-nginx-errors-6df8ddcc64-ztqnz 1/1 Running 24 (36m ago) 23d

nfs-client-provisioner-7f5bc48c68-8wld4 1/1 Running 32 (36m ago) 23d

old-demo-687c6ccd99-2rzwr 1/1 Running 17 (36m ago) 18d

old-demo-687c6ccd99-rv9fz 1/1 Running 53 (36m ago) 59d- 还原

语法:

velero restore create pod_name --from-backup k8s-backup-test --include-namespaces default

[root@kube-master velero]# velero restore create demo --from-backup k8s-backup-test --include-namespaces default

#解释

demo ----> Pod_name

k8s-backup-test ---> velero备份名字

default ---> namespace[root@kube-master velero]# velero restore create demo --from-backup k8s-backup-test --include-namespaces default

#解释

demo ----> Pod_name

k8s-backup-test ---> velero备份名字

default ---> namespace- 查看

[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo-6d4fc77748-dmg94 1/1 Running 0 5s

demo-6d4fc77748-fbvg6 1/1 Running 0 5s[root@kube-master velero]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo-6d4fc77748-dmg94 1/1 Running 0 5s

demo-6d4fc77748-fbvg6 1/1 Running 0 5s❌ 注意

- 在velero备份的时候,备份过程中创建的对象是不会被备份的。

velero restore恢复不会覆盖已有的资源,只恢复当前集群中不存在的资源。已有的资源不会回滚到之前的版本,如需要回滚,需在restore之前提前删除现有的资源

[root@kube-master velero]# velero restore get

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

demo k8s-backup-test Completed 2024-07-25 10:21:21 +0800 CST 2024-07-25 10:21:25 +0800 CST 0 12 2024-07-25 10:21:21 +0800 CST <none>[root@kube-master velero]# velero restore get

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

demo k8s-backup-test Completed 2024-07-25 10:21:21 +0800 CST 2024-07-25 10:21:25 +0800 CST 0 12 2024-07-25 10:21:21 +0800 CST <none>3.3 查看

[root@kube-master velero]# velero backup get

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

k8s-backup-test Completed 0 0 2024-07-25 10:12:23 +0800 CST 29d default <none>

k8s-bakcup-all-2024-07-25 Completed 0 0 2024-07-25 10:43:26 +0800 CST 2d default <none>

#查看备份结果

velero backup describe k8s-backup-test

velero backup logs k8s-backup-test[root@kube-master velero]# velero backup get

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

k8s-backup-test Completed 0 0 2024-07-25 10:12:23 +0800 CST 29d default <none>

k8s-bakcup-all-2024-07-25 Completed 0 0 2024-07-25 10:43:26 +0800 CST 2d default <none>

#查看备份结果

velero backup describe k8s-backup-test

velero backup logs k8s-backup-test3.4 删除

[root@kube-master velero]# velero delete backup k8s-bakcup-all-2024-07-25

Are you sure you want to continue (Y/N)? Y

Request to delete backup "k8s-bakcup-all-2024-07-25" submitted successfully.

The backup will be fully deleted after all associated data (disk snapshots, backup files, restores) are removed.

#直接不用输入Y

velero delete backup k8s-bakcup-all-2024-07-25 --confirm[root@kube-master velero]# velero delete backup k8s-bakcup-all-2024-07-25

Are you sure you want to continue (Y/N)? Y

Request to delete backup "k8s-bakcup-all-2024-07-25" submitted successfully.

The backup will be fully deleted after all associated data (disk snapshots, backup files, restores) are removed.

#直接不用输入Y

velero delete backup k8s-bakcup-all-2024-07-25 --confirm3.5 卸载velero

velero uninstall

#卸载velero,注意这里的uninstall不会删除namespace:

kubectl delete namespace/velero clusterrolebinding/velero

kubectl delete crds -l component=velerovelero uninstall

#卸载velero,注意这里的uninstall不会删除namespace:

kubectl delete namespace/velero clusterrolebinding/velero

kubectl delete crds -l component=velero3.6 任务

定时任务

#备份所有资源保留72小时

velero backup create k8s-bakcup-all-$(date +%F) --ttl 72h

#查看备份

velero backup get#备份所有资源保留72小时

velero backup create k8s-bakcup-all-$(date +%F) --ttl 72h

#查看备份

velero backup get周期性任务crongJob

#周期性任务

velero schedule create -h

#定时任务,每天16点(UTC时区)备份,保留7天(168h)

velero create schedule k8s-bakcup-all --schedule="0 16 * * *" --ttl 168h

# Create a backup every 6 hours

velero create schedule NAME --schedule="0 */6 * * *"

# Create a backup every 6 hours with the @every notation

velero create schedule NAME --schedule="@every 6h"

# Create a daily backup of the web namespace

velero create schedule NAME --schedule="@every 24h" --include-namespaces web

# Create a weekly backup, each living for 90 days (2160 hours)

velero create schedule NAME --schedule="@every 168h" --ttl 2160h0m0s

# 每日对anchnet-devops-dev/anchnet-devops-test/anchnet-devops-prod/anchnet-devops-common-test 名称空间进行备份

velero create schedule anchnet-devops-dev --schedule="@every 24h" --include-namespaces anchnet-devops-dev

velero create schedule anchnet-devops-test --schedule="@every 24h" --include-namespaces anchnet-devops-test

velero create schedule anchnet-devops-prod --schedule="@every 24h" --include-namespaces anchnet-devops-prod

velero create schedule anchnet-devops-common-test --schedule="@every 24h" --include-namespaces anchnet-devops-common-test

#查看定时任务

velero get schedule#周期性任务

velero schedule create -h

#定时任务,每天16点(UTC时区)备份,保留7天(168h)

velero create schedule k8s-bakcup-all --schedule="0 16 * * *" --ttl 168h

# Create a backup every 6 hours

velero create schedule NAME --schedule="0 */6 * * *"

# Create a backup every 6 hours with the @every notation

velero create schedule NAME --schedule="@every 6h"

# Create a daily backup of the web namespace

velero create schedule NAME --schedule="@every 24h" --include-namespaces web

# Create a weekly backup, each living for 90 days (2160 hours)

velero create schedule NAME --schedule="@every 168h" --ttl 2160h0m0s

# 每日对anchnet-devops-dev/anchnet-devops-test/anchnet-devops-prod/anchnet-devops-common-test 名称空间进行备份

velero create schedule anchnet-devops-dev --schedule="@every 24h" --include-namespaces anchnet-devops-dev

velero create schedule anchnet-devops-test --schedule="@every 24h" --include-namespaces anchnet-devops-test

velero create schedule anchnet-devops-prod --schedule="@every 24h" --include-namespaces anchnet-devops-prod

velero create schedule anchnet-devops-common-test --schedule="@every 24h" --include-namespaces anchnet-devops-common-test

#查看定时任务

velero get schedule3.7 迁移

- 创建velero使用的储存

- 在k8s-A集群安装velero并备份

- 在k8s-B集群安装velero并恢复,即完成k8s迁移

4. velero其它案例

4.1 同集群

同集群下,手动从完整备份中恢复其中一个namespace到指定namespace

#从test namespace到 test10000 namespace

velero restore create k8s-jf-test-all-restore --from-backup k8s-jf-test-all --include-namespaces test --namespace-mappings test:test10000

#查看恢复状态

velero restore describe k8s-jf-test-all-restore#从test namespace到 test10000 namespace

velero restore create k8s-jf-test-all-restore --from-backup k8s-jf-test-all --include-namespaces test --namespace-mappings test:test10000

#查看恢复状态

velero restore describe k8s-jf-test-all-restore4.2 跨集群

对于velero,跨集群需要保持2个集群使用的是同一个云厂商持久卷方案

两边环境保持一致即可。