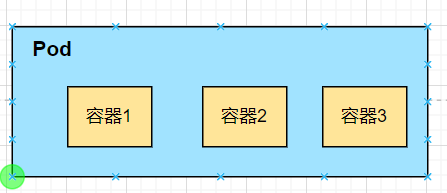

1.什么是Pod

Pod是Kubernetes中的抽象概念,也是Kubernetes中的最小部署单元。在每个Pod中至少包含一个容器或多个容器,这些容器共享Network(网络)、PID(进程)、IPC(进程间通信)HostName(主机名称)、VoLume(卷)。当我们需要部署应用时,其实就是在部署Pod,Kubernetes会将Pod中所有的容器作为一个整体,由Master调度到一个Node上运行。

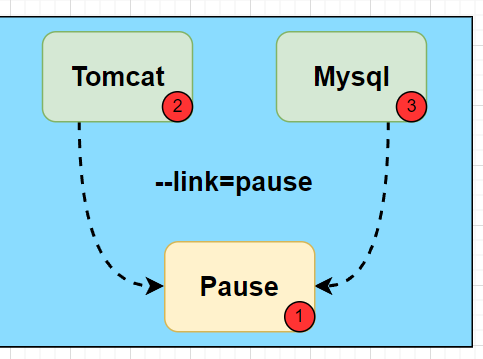

每个Pod中有个根容器(Pause容器),Pause容器的状态代表整个容器组的状态,其他业务容器共享Pause的IP,即Pod IP,共享Pause挂载的Volume,这样简化了同个Pod中不同容器之间的网络问题和文件共享问题

2.Pod共享网络

在Docker中,如果tomcat容器想与mysql容器进行共享,需要先启动mysql容器,然后tomcat容器通过--net=db:mysql选项即可和mysql共享网络,这也就意味着我们必须先运行mysql,后运行tomcat,才可以实现共享网络。

在Kubernetes中,Pod的网络共享和Docker的网络共享实现方式一致,只不过在启动Pod时,会先启动一个pause的容器,然后将后续的所有容器都--Link到这个paUse的容器,以实现网络共享。

从上图得出结论,

1、Pod中的Tomcat容器可以直接使⽤ localhost 与MySQL容器进⾏通信

2、Pod中的多个容器不允许绑定相同的端⼝,因为所有容器共享⽹络协议栈,看到的⽹络信息⼀致;

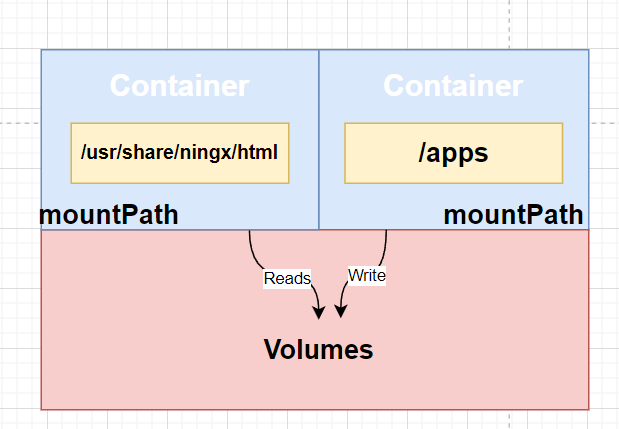

3.Pod共享存储

默认情况下所有容器的文件系统是互相隔离的,要实现文件共享则需要在Pod层面声明一个Volume卷,然后在需要共享的容器中声明VoLumeMounts来挂载文件系统,从而达到多个容器共享一个存储卷。

- 案例

apiVersion: v1

kind: Pod

metadata:

name: pod-mutil

spec:

volumes: # 申明Volumes共享卷

- name: webpage # 卷名称

emptyDir: {} # 卷类型

containers:

- name: random-app # 产⽣内容写⼊/apps/index.html ⽂件中

image: busybox

command: ['/bin/sh','-c','echo "web-$(date +%F)" >> /apps/index.html !$ sleep 30']

volumeMounts:

- name: webpage

mountPath: /apps

- name: nginx-app # 第⼆个容器读取第⼀个容器产⽣的内容,对外提供访问

image: nginx

volumeMounts:

- name: webpage

mountPath: /usr/share/nginx/htmlapiVersion: v1

kind: Pod

metadata:

name: pod-mutil

spec:

volumes: # 申明Volumes共享卷

- name: webpage # 卷名称

emptyDir: {} # 卷类型

containers:

- name: random-app # 产⽣内容写⼊/apps/index.html ⽂件中

image: busybox

command: ['/bin/sh','-c','echo "web-$(date +%F)" >> /apps/index.html !$ sleep 30']

volumeMounts:

- name: webpage

mountPath: /apps

- name: nginx-app # 第⼆个容器读取第⼀个容器产⽣的内容,对外提供访问

image: nginx

volumeMounts:

- name: webpage

mountPath: /usr/share/nginx/html4.Pod管理方式

4.1自主式管理Pod

在Kubernetes中,我们部署pod的时候,基本上都是使⽤控制器管理,那如果不使⽤控制器,也可以直接定义⼀个pod资源,那么就是pod⾃⼰去控制⾃⼰,这样的pod称为⾃主式pod

特点,

- 如果Pod被删除,那就是真的被删除,不会重新在运⾏⼀个新的Pod;

- 如果Pod所在的节点需要维护,那么节点会先执⾏驱逐,如果是⾃助式Pod,驱逐后不会被重建;

- 如果Pod期望部署多个副本,这个也能实现,但如果想持续维持副本数量,则需要⼈为参与,过于繁琐

1.创建⼀个⾃主式Pod

# cat nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80# cat nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 802.测试删除Pod,验证是否能被彻底删除

[root@master ~]# kubectl delete pod nginx-pod[root@master ~]# kubectl delete pod nginx-pod3.测试节点故障,当Pod所运⾏的节点故障,那么该Pod会被删除,不会重新运⾏起来

# 驱逐

[root@master ~]# kubectl drain node01 --ignore-daemonsets --force

# 解除不可调度

[root@master ~]# kubectl uncordon node01 # 驱逐

[root@master ~]# kubectl drain node01 --ignore-daemonsets --force

# 解除不可调度

[root@master ~]# kubectl uncordon node014.2控制管理器

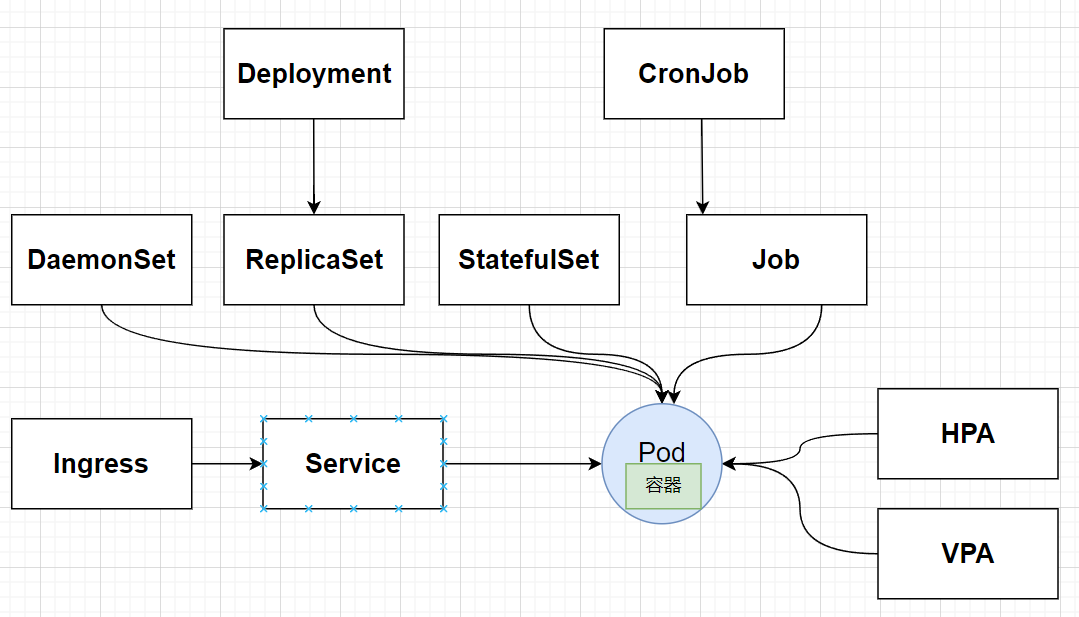

Kubernetes使用更高级的Controller的抽象层,来管理Pod实例。Controller可以创建和管理多个Pod,提供副本管理、滚动升级和集群级别的自愈能力。例如,如果一个Node故障,ControlLer就能自动将该节点上的Pod调度到其他健康的Node上。虽然可以直接使用Pod,但是在Kubernetes中通常是使用ControLLer来管理Pod的。在Kubernetes中也将这些Controller又称为工作负载

1、创建控制器管理的Pod

#cat nginx-dp.yaml

apiVersion: v1

kind: Deployment

metadata:

name: nginx-dp

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.1#cat nginx-dp.yaml

apiVersion: v1

kind: Deployment

metadata:

name: nginx-dp

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.12、测试删除Pod,会发现Pod被删除后,⽴即⼜启动了⼀个相同的Pod实例

#查看

kubectl get pod |grep nginx-dp

#删除

kubectl delete pod pod_name

#验证

kubectl get pod |grep nginx-dp#查看

kubectl get pod |grep nginx-dp

#删除

kubectl delete pod pod_name

#验证

kubectl get pod |grep nginx-dp3、测试节点故障,会发现控制器管理的Pod会在其他没有故障的节点上重新启动⼀份实例,以维持副本数量

#驱逐

kubectl drain node01 --ignore-daemonsets --force

#解除不可调度

kubectl uncordon node01#驱逐

kubectl drain node01 --ignore-daemonsets --force

#解除不可调度

kubectl uncordon node015.Pod运行应用

5.1 创建Pod应用

# cat nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx:latest# cat nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx:latest5.2 Pod运⾏阶段

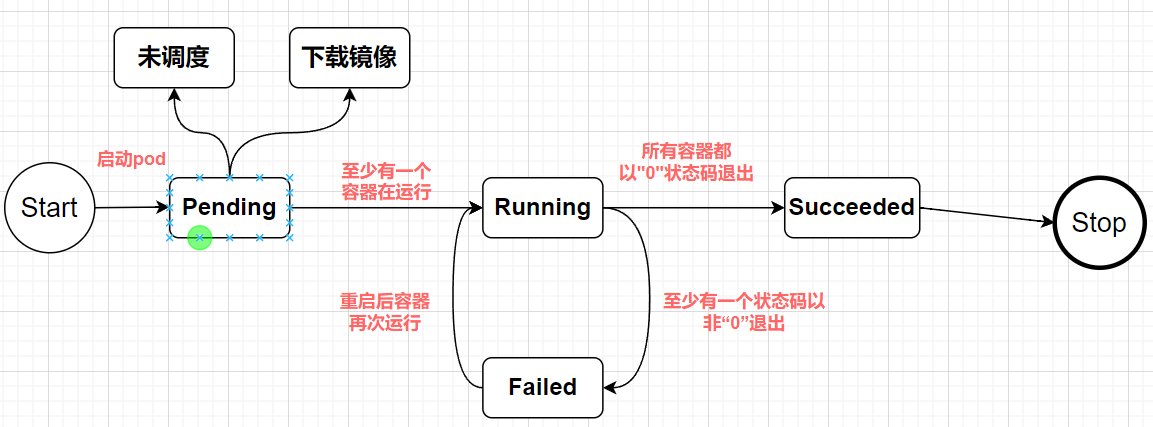

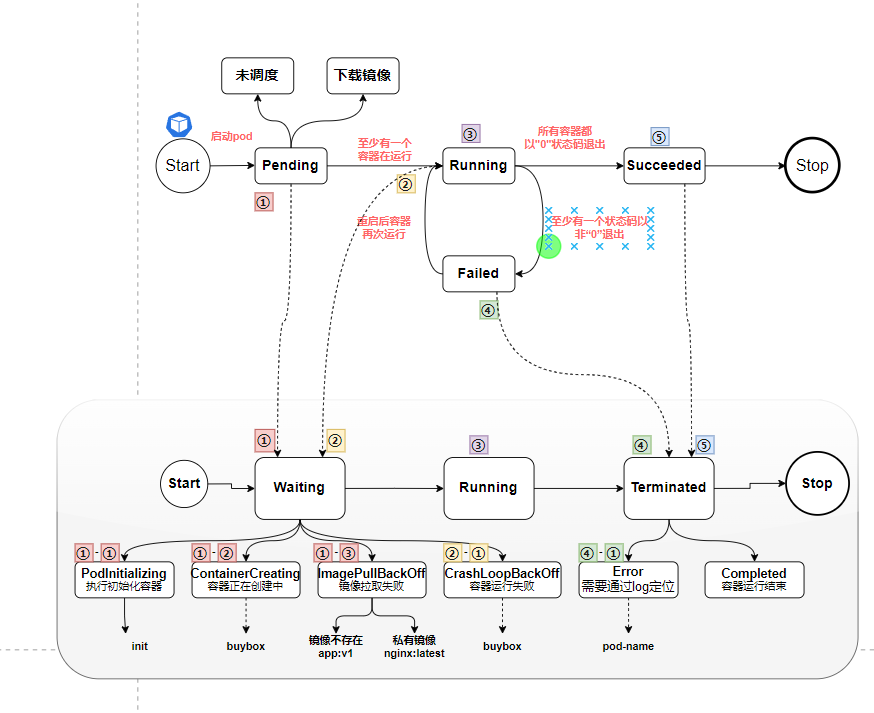

Pod创建后,起始为Pending阶段,如果其中至少有一个主要容器正常启动,则进入Running。之后的状态取决于 Pod 中是否有容器运行失败或被管理员停止运行,从而会进入Succeeded或者Failed阶段。

Pending:Pod已被Kubernetes系统接受,但有一个或者多个容器尚未创建亦未运行。此阶段包括等待Pod被调度的时间和通过网络下载镜像的时间Running:Pod已经绑定至某个节点,同时Pod中所有的容器都已创建。至少有一个容器在运行,或处于启动、重启状态Succeeded:Pod中的所有容器都已成功终止,并且不会再重启Failed:Pod中的所有容器都已终止,并且至少有一个容器是因为失败终止。也就是说,容器以非0状态退出或被系统终止Unknown:因为某些原因无法取得Pod的状态。这种情况通常是因为与Pod 所在主机通信失败

查看Pod所处阶段

[root@kube-master ~]# kubectl describe pod <pod_name>

Name: nginx-6799fc88d8-49dd6

Namespace: default

Priority: 0

Node: kube-node03/10.103.236.204

Start Time: Thu, 11 Apr 2024 15:20:45 +0800

Labels: app=nginx

pod-template-hash=6799fc88d8

Annotations: cni.projectcalico.org/podIP: 172.17.74.71/32

cni.projectcalico.org/podIPs: 172.17.74.71/32

Status: Running # 当前 Pod 为 Running 状态

。。。。

# 如果某节点死掉或者与集群中其他节点失联,Kubernetes 会实施⼀种策略,将失去的节点上运⾏的 Pod 的 phase 设置为 Failed

#查看方式

kubectl get pod jumpointpro-webapp-2 -n jumpoint-pro -o yaml | grep phase:

kubectl get pod jumpointpro-webapp-2 -n jumpoint-pro -o json | jq .status.phase

kubectl describe pod jumpointpro-webapp-2 -n jumpoint-pro | grep Status:[root@kube-master ~]# kubectl describe pod <pod_name>

Name: nginx-6799fc88d8-49dd6

Namespace: default

Priority: 0

Node: kube-node03/10.103.236.204

Start Time: Thu, 11 Apr 2024 15:20:45 +0800

Labels: app=nginx

pod-template-hash=6799fc88d8

Annotations: cni.projectcalico.org/podIP: 172.17.74.71/32

cni.projectcalico.org/podIPs: 172.17.74.71/32

Status: Running # 当前 Pod 为 Running 状态

。。。。

# 如果某节点死掉或者与集群中其他节点失联,Kubernetes 会实施⼀种策略,将失去的节点上运⾏的 Pod 的 phase 设置为 Failed

#查看方式

kubectl get pod jumpointpro-webapp-2 -n jumpoint-pro -o yaml | grep phase:

kubectl get pod jumpointpro-webapp-2 -n jumpoint-pro -o json | jq .status.phase

kubectl describe pod jumpointpro-webapp-2 -n jumpoint-pro | grep Status:查看Pod Status的具体原因

[root@kube-master ~]# kubectl describe pod <pod_name>

。。。

lastProbeTime:最后一次探测 Pod Condition 的时间戳。

lastTransitionTime:上次 Condition 从一种状态转换到另一种状态的时间。

message:上次 Condition 状态转换的详细描述。

reason:Condition 最后一次转换的原因。

status:Condition 状态类型,可以为 “True”, “False”, and “Unknown”.

Conditions:

Type Status

Initialized True #Pod中所有的 Init 容器都已经完成

Ready True #Pod可以对外提供服务,并可以加⼊对应的负载均衡中;

ContainersReady True #Pod中所有容器都已经处于就绪状态;

PodScheduled True #Pod已经成功被调度到了某节点上

。。。[root@kube-master ~]# kubectl describe pod <pod_name>

。。。

lastProbeTime:最后一次探测 Pod Condition 的时间戳。

lastTransitionTime:上次 Condition 从一种状态转换到另一种状态的时间。

message:上次 Condition 状态转换的详细描述。

reason:Condition 最后一次转换的原因。

status:Condition 状态类型,可以为 “True”, “False”, and “Unknown”.

Conditions:

Type Status

Initialized True #Pod中所有的 Init 容器都已经完成

Ready True #Pod可以对外提供服务,并可以加⼊对应的负载均衡中;

ContainersReady True #Pod中所有容器都已经处于就绪状态;

PodScheduled True #Pod已经成功被调度到了某节点上

。。。5.3容器运⾏阶段

文档,https://kubernetes.io/zh-cn/docs/concepts/workloads/pods/pod-lifecycle/#restart-policy

Kubernetes会跟踪Pod 中每个容器的状态,就像跟踪Pod 阶段-样。Pod中运行的容器状态与Pod阶段是存在关联关系的,所以当Pod出现故障时,将Pod的阶段状态和Pod中的容器状态结合起来查看,更容易定位具体的问题.

一旦调度器将Pod 分派给某个节点,kubelet就通过容器运行时开始为Pod创建容器。 名容器的状态有三种:Waiting(等待)、Running (运行中)和Terminated(已终止)。器状态官方站点

5.4 阶段状态实践

- 模拟Pod状态为Pending、⽽容器状态为waiting

kubectl run wordpress --image=wordpress

#查看

kubectl describe pod wordpresskubectl run wordpress --image=wordpress

#查看

kubectl describe pod wordpress- 模拟Pod状态为Running、⽽容器状态为waiting

kubectl run busbox --image=busboxkubectl run busbox --image=busbox- 模拟Pod状态为Failed,⽽容器状态为Terminated

cat pod_never.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-never

spec:

restartPolicy: Never # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80cat pod_never.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-never

spec:

restartPolicy: Never # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80#创建

kubectl apply pod_never.yml

#查看这台pod在哪台node上面运行,之后删除容器

docker rm -f container_name

#查看pod状态

kubectl describe pod pod-never#创建

kubectl apply pod_never.yml

#查看这台pod在哪台node上面运行,之后删除容器

docker rm -f container_name

#查看pod状态

kubectl describe pod pod-never- Failed && error

#通过kill 掉

docker kill container_name#通过kill 掉

docker kill container_name6.Pod运行应用对应字段

环境变量

自定义容器命令与参数

7.Pod重启策略

Pod 的 spec 中包含⼀个 restartPolicy 字段,⽤来设置 Pod 中所有容器的重启策略,取值有Always、OnFailure、Never。默认值是Always

- Always:当容器出现异常退出时,kubelet 会尝试重启该容器,已恢复正常状态;(默认策略)

- Never:当容器退出时,kubelet 永远不会尝试重启该容器(适合Job类⼀次性任务)

- OnFailure:当容器异常退出(且退出状态码⾮0时),kubelet会尝试重启容器(适合Job类⼀次性任务)

❌ 注意

通过 kubelet 重新启动的容器,后续如果还出现异常退出,则会以指数增加延迟(10s,20s,40s…)来进⾏容器的重新创建和启动,其最⻓延迟为 5 分钟。⼀旦容器执⾏了 10 分钟并且没有出现问题,kubelet 对该容器的重启计时器进⾏重置为初始状态。

7.1Always

1.编写yaml文件

apiVersion: v1

kind: Pod

metadata:

name: pod-always

spec:

restartPolicy: Always # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80apiVersion: v1

kind: Pod

metadata:

name: pod-always

spec:

restartPolicy: Always # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 802.检查Pod的运⾏状态,可以看到 Pod 正常运⾏,RESTARTS(重启次数)字段为 0

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 0 80s[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 0 80s3.正常停⽌容器应⽤,可以看到容器被重启了⼀次,然后Pod⼜恢复正常状态了;

[root@kube-master yaml]# kubectl exec pod-always -- /bin/bash -c "nginx -s quit"

2024/04/12 10:11:27 [notice] 37#37: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 1 (26s ago) 3m58s[root@kube-master yaml]# kubectl exec pod-always -- /bin/bash -c "nginx -s quit"

2024/04/12 10:11:27 [notice] 37#37: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 1 (26s ago) 3m58s4.⾮正常停⽌容器应⽤,可以看到容器被终⽌了,并且重启次数再次增加1次;

[root@kube-master yaml]# kubectl exec pod-always -- /bin/bash -c "kill 1"

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 0/1 Completed 1 (2m22s ago) 5m54s

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 2 (27s ago) 6m19s[root@kube-master yaml]# kubectl exec pod-always -- /bin/bash -c "kill 1"

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 0/1 Completed 1 (2m22s ago) 5m54s

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-always 1/1 Running 2 (27s ago) 6m19s💡 说明

重启策略-Always,在创建单个 Pod 的情况下,不管 Pod 中的容器是否正常停⽌,最终都会恢复

7.2 Never

1.编写Pod的yaml⽂件

apiVersion: v1

kind: Pod

metadata:

name: pod-never

spec:

restartPolicy: Never # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80apiVersion: v1

kind: Pod

metadata:

name: pod-never

spec:

restartPolicy: Never # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80kubectl apply -f pod_never.yml

2.检查Pod的运⾏状态,可以看到 Pod 正常运⾏,RESTARTS(重启次数)字段为 0

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-never 1/1 Running 0 10s[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-never 1/1 Running 0 10s3.⽆论正常或异常停⽌容器应⽤,容器不会重启应⽤;

[root@kube-master yaml]# kubectl exec pod-never -- /bin/bash -c "nginx -s quit"

2024/04/12 10:19:35 [notice] 31#31: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-never 0/1 Completed 0 2m26s[root@kube-master yaml]# kubectl exec pod-never -- /bin/bash -c "nginx -s quit"

2024/04/12 10:19:35 [notice] 31#31: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-never 0/1 Completed 0 2m26s7.3 OnFailure

1.编写Pod的yaml⽂件

apiVersion: v1

kind: Pod

metadata:

name: pod-OnFailure

spec:

restartPolicy: OnFailure # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80apiVersion: v1

kind: Pod

metadata:

name: pod-OnFailure

spec:

restartPolicy: OnFailure # Pod的重启策略

containers:

- name: pod-always

image: nginx

ports:

- containerPort: 80- 创建

[root@kube-master yaml]# kubectl apply -f pod_onfailed.yml

pod/pod-onfailure created[root@kube-master yaml]# kubectl apply -f pod_onfailed.yml

pod/pod-onfailure created2.检查Pod的运⾏状态,可以看到 Pod 正常运⾏,RESTARTS(重启次数)字段为 0

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-onfailure 1/1 Running 0 64s[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-onfailure 1/1 Running 0 64s3.正常停⽌容器应⽤,退出状态码为0;会发现容器不会重启;

[root@kube-master yaml]# kubectl exec pod-onfailure -- /bin/bash -c "nginx -s quit"

2024/04/12 10:24:51 [notice] 31#31: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-onfailure 0/1 Completed 0 2m39s[root@kube-master yaml]# kubectl exec pod-onfailure -- /bin/bash -c "nginx -s quit"

2024/04/12 10:24:51 [notice] 31#31: signal process started

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-onfailure 0/1 Completed 0 2m39s4.⾮正常停⽌容器应⽤,由于⾮正常停⽌容器,且容器退出状态码不为0,所以会触发重启

如果 kill⽆法触发⾮正常停⽌,可以登录到对应节点,强制杀死

对应的容器(docker kill ContainerID)

8.Pod生命周期

文档,https://kubernetes.io/zh-cn/docs/concepts/workloads/pods/pod-lifecycle/

8.1什么是生命周期

Pod对象从创建开始至终止退出之间的时间称其为生命周期.

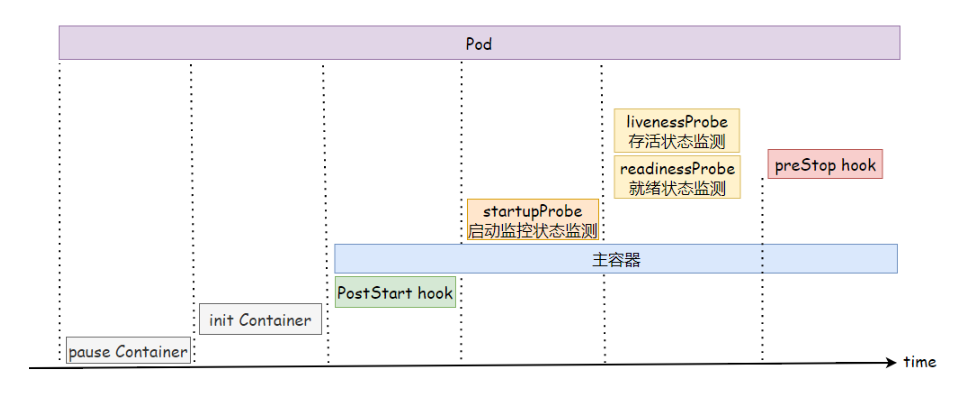

8.2生命周期流程

一个Pod的完整生命周期 过程,其中包含InitContainer、Pod Hook、健康检查三个主要部分

8.3生命周期总结

1、在启动任何容器之前,先创建pause基础容器,它初始化Pod的环境并为后续加⼊的容器提供共享的名称空间

2、按顺序以串⾏⽅式运⾏⽤户定义的各个初始化容器进⾏Pod环境初始化,任何⼀个初始化容器运⾏失败都会导致Pod创建失败,⽽后按照restartPolicy的策略进⾏处理,默认为重启

3、待所有初始化容器成功完成后,启动因此程序容器,如果有多个容器则会并⾏启动,⽽后各⾃维护各⾃的⽣命周期。当容器启动时会同时运⾏主容器上定义的PostStart钩⼦函数,该步骤失败将导致相关容器被重启

4、运⾏容器启动健康状态监测(startupProbe),判断容器是否启动成功,如果失败,则会根据restartPolicy中定义的策略进⾏处理,如果没有定义,则默认状态为Success

5、容器启动成功后定期进⾏存活状态监测(liveness)和就绪状态监测(readiness),存活状态监测失败将导致容器重启,⽽就绪状态监测失败会使得该容器从其所属的负载均衡中被移除

6、终⽌Pod时,会先运⾏preStop钩⼦函数,并在宽限期(terminationGrace-Period-Seconds)结束后终⽌容器,宽限期默认为30秒;

8.4 Pod创建过程

- 用户通过kubectl或其他api客户端提交需要创建的pod信息给apiServer

- apiServer开始生成pod对象的信息,并将信息存入etcd,然后返回确认信息至客户端

- apiServer开始反映etcd中的pod对象的变化,其它组件使用watch机制来跟踪检查apiServer上的变动

- scheduler发现有新的pod对象要创建,开始为Pod分配主机并将结果信息更新至apiServer

- node节点上的kubelet发现有pod调度过来,尝试调用docker启动容器,并将结果回送至apiServer

- apiServer将接收到的pod状态信息存入etcd中

8.5 Pod终止过程

- 用户向apiServer发送删除pod对象的命令

- apiServcer中的pod对象信息会随着时间的推移而更新,在宽限期内(默认30s),pod被视为dead

- 将pod标记为terminating状态

- kubelet在监控到pod对象转为terminating状态的同时启动pod关闭过程

- 端点控制器监控到pod对象的关闭行为时将其从所有匹配到此端点的service资源的端点列表中移除

- 如果当前pod对象定义了preStop钩子处理器,则在其标记为terminating后即会以同步的方式启动执行

- pod对象中的容器进程收到停止信号

- 宽限期结束后,若pod中还存在仍在运行的进程,那么pod对象会收到立即终止的信号

- kubelet请求apiServer将此pod资源的宽限期设置为0从而完成删除操作,此时pod对于用户已不可见

简单来说,当 Kubernetes 需要终止一个 Pod 时,它会按照以下步骤进行操作:

1.设置 Pod 状态为 Terminating ,并从所有服务的 Endpoints 列表中删除。

2.执行 PreStop Hook ,发送命令或 HTTP 请求到 Pod 中。

3.向 Pod 中的容器发送 SIGTERM 信号,通知容器即将关闭。

4.等待指定的优雅终止宽限期(terminationGracePeriod),通常是30秒,期间 PreStop Hook 和 SIGTERM 信号并行执行。

5.如果 Pod 在宽限期内终止完成,则 Pod 被删除。否则,Kubernetes 将发送 SIGKILL 信号,强制终止 Pod 中的容器。简单来说,当 Kubernetes 需要终止一个 Pod 时,它会按照以下步骤进行操作:

1.设置 Pod 状态为 Terminating ,并从所有服务的 Endpoints 列表中删除。

2.执行 PreStop Hook ,发送命令或 HTTP 请求到 Pod 中。

3.向 Pod 中的容器发送 SIGTERM 信号,通知容器即将关闭。

4.等待指定的优雅终止宽限期(terminationGracePeriod),通常是30秒,期间 PreStop Hook 和 SIGTERM 信号并行执行。

5.如果 Pod 在宽限期内终止完成,则 Pod 被删除。否则,Kubernetes 将发送 SIGKILL 信号,强制终止 Pod 中的容器。9.Init Container

9.1基本概念

Init Container是用来做初始化工作的容器。可以有一个或多个,如果多个按照定义的顺序依次执行,只有所有的执行完后,主容器才启动。由于一个Pod里的存储卷是共享的,所以Init Container里产生的数据可以被主容器使用到,但它仅仅是在Pod启动时,在主容器启动前执行,做初始化工作,如果 Pod 的 Init 容器失败,Kubernetes 会不断地重启该 Pod,直到Init 容器成功为止。如果 Pod 对应的restartPolicy 值为 Never,Kubernetes 不会重新启动 Pod。

9.2 原理

初始化容器的概念原理如下:

- 顺序执行

- Pod 中可以定义多个初始化容器,它们按照配置文件中定义的顺序依次执行。

- 当一个初始化容器成功完成其任务后,Kubernetes 才会启动下一个初始化容器。

- 只有当所有初始化容器都成功退出时,Kubernetes 才会启动 Pod 中的主要应用容器。

- 独立于应用容器

- 初始化容器与应用容器之间是相互隔离的,它们具有不同的镜像和环境,并且不共享存储卷。

- 初始化容器可以访问并修改将被应用容器使用的卷,从而实现为应用容器准备工作目录、下载配置文件、创建数据库或设置网络等任务。

- 用途

- 下载或者预热数据:比如从远程服务器拉取配置文件或数据资源到共享卷中。

- 依赖检查:确保某些外部服务已经可用,如数据库或消息队列。

- 应用初始化:执行复杂的预处理步骤,例如生成密钥对或加密凭据。

- 注册信息:向注册中心进行服务注册操作,等待注册成功后再启动应用。

- 生命周期管理

- 初始化容器不支持就绪探针(readiness probes),因为它们在Pod准备就绪之前必须完成。

- 如果某个初始化容器失败,Kubernetes 将不会启动后续的初始化容器或应用容器,而是等待该容器重新启动并完成其任务。

- 资源限制

- 就像普通的容器一样,init容器也可以设置资源限制,如CPU和内存请求/限制,以确保它们不会消耗过多的集群资源。

- 故障排查

- 初始化容器失败时,可以通过查看Pod的状态以及容器日志来了解初始化过程中遇到的问题。

9.3应⽤场景

1、app容器依赖MySQL的数据交互,所以可以启动一个初始化容器检查MySQL服务是否正常,如果正常则启动主容器

检查MySQL服务是否正常,如果正常则启动主容器

2、在启动主容器之前,使用初始化容器对系统内核参数进行调优,然后共享给主容器使用

3、获取集群成员节点地址,为主容器生成对应配置信息,这样主容器启动后,可以通过配置信息加入集群环境

场景1

1.编写yaml,使⽤初始化容器对MySQL端⼝进⾏检查,如果存活则运⾏Pod,否则就⼀直重启尝试

vim pod_init_check_mysql.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-check-mysql

spec:

restartPolicy: Always

# 初始化容器

initContainers:

- name: check-mysql

image: oldxu3957/tools

command: ['sh', '-c', 'nc -z 10.103.236.204 3306']

securityContext:

privileged: true # 以特权模式运⾏容器,否则⽆法修改内核参数

# 主容器

containers:

- name: webapps

image: nginx

ports:

- containerPort: 80apiVersion: v1

kind: Pod

metadata:

name: pod-check-mysql

spec:

restartPolicy: Always

# 初始化容器

initContainers:

- name: check-mysql

image: oldxu3957/tools

command: ['sh', '-c', 'nc -z 10.103.236.204 3306']

securityContext:

privileged: true # 以特权模式运⾏容器,否则⽆法修改内核参数

# 主容器

containers:

- name: webapps

image: nginx

ports:

- containerPort: 80#创建

[root@kube-master yaml]# kubectl apply -f pod_init_check_mysql.yml

pod/pod-check-mysql created

#查看

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-check-mysql 0/1 Init:0/1 0 49s

#当MySQL服务没有启动完毕,则该Pod会出现初始化失败,然后触发重启

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-check-mysql 0/1 Init:CrashLoopBackOff 3 (16s ago) 3m37s

#安装MySQL服务,确保3306对外监听

yum install mariadb-server -y

systemctl start mariadb#创建

[root@kube-master yaml]# kubectl apply -f pod_init_check_mysql.yml

pod/pod-check-mysql created

#查看

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-check-mysql 0/1 Init:0/1 0 49s

#当MySQL服务没有启动完毕,则该Pod会出现初始化失败,然后触发重启

[root@kube-master yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod-check-mysql 0/1 Init:CrashLoopBackOff 3 (16s ago) 3m37s

#安装MySQL服务,确保3306对外监听

yum install mariadb-server -y

systemctl start mariadb10.Pod Hook

容器⽣命周期钩⼦(Container Lifecycle Hooks)监听容器⽣命周期的特定事件,并在事件发⽣时执⾏已注册的回调函数。

Kubernetes支持postStart和preStop事件。当一个容器启动后,Kubernetes将立即发送postStart事件;在容器被终结之前,Kubernetes 将发送一个 preStop 事件。容器可以为每个事件指定一个处理程序。

10.1两种钩子

postStart

postStart:容器创建后立即执行,由于是异步执行,它无法保证一定在容器之前运行。如果失败,容器会被杀死,并根据RestartPolicy决定是否重启

#查看语法

kubectl explain pod.spec.containers.lifecycle#查看语法

kubectl explain pod.spec.containers.lifecycle#通过postStart设定端口重定向,将请求本机的80调度到本机的8080端口

lifecycle:

postStart:

exec:

command:

- "/bin/bash"

- "-c"

- "iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 8080"#通过postStart设定端口重定向,将请求本机的80调度到本机的8080端口

lifecycle:

postStart:

exec:

command:

- "/bin/bash"

- "-c"

- "iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 8080"preStop

preStop:在容器终止前执行。用于:释放占用的资源、清理注册过的信息、优雅的关闭进程。在其完成之前会阻塞删除容器的操作,默认等待时间为3θs,可以通过terminationGracePeriodSeconds宽限时间

#runner主要用来编译打包提高CI效率。启动后会注册到gitlab上,后续不需要可以删除Pod,然后清理注册信息。#通过preStop清理runner注册信息

lifecycle:

preStop:

exec:

command:

- /bin/bash

- -c

- "/usr/bin/gitlab-runner unregister --name $RUNNER_NAME"#runner主要用来编译打包提高CI效率。启动后会注册到gitlab上,后续不需要可以删除Pod,然后清理注册信息。#通过preStop清理runner注册信息

lifecycle:

preStop:

exec:

command:

- /bin/bash

- -c

- "/usr/bin/gitlab-runner unregister --name $RUNNER_NAME"10.2 案例

postStart 命令在容器的/usr/share/nginx/html/index.html自定义一段内容 preStop 负责优雅地终止 nginx 服务。terminationGracePeriodSeconds:宽限期,如果超过宽限期pod还没有终止,则会由SIGKILL强制关闭信号介入。

apiVersion: v1

kind: Pod

metadata:

name: pod-lifecycles

spec:

containers:

- name: nginx

image: nginx:latest

lifecycle:

postStart:

exec:

command:

- "/bin/sh"

- "-c"

- "echo Hello from the postStart handler > /usr/share/nginx/html/index.html"

preStop:

exec:

command:

- "/bin/sh"

- "-c"

- "nginx -s quit"apiVersion: v1

kind: Pod

metadata:

name: pod-lifecycles

spec:

containers:

- name: nginx

image: nginx:latest

lifecycle:

postStart:

exec:

command:

- "/bin/sh"

- "-c"

- "echo Hello from the postStart handler > /usr/share/nginx/html/index.html"

preStop:

exec:

command:

- "/bin/sh"

- "-c"

- "nginx -s quit"11. Pod检测探针

11.1 需要探针原因

当容器进程运行时如果出现了异常退出,Kubernetes则会认为容器发生故障,会尝试进行重启解决该问题。但有不少情况是发生了故障,但进程并没有退出。比如访问Web服务器时出现了500的内错误,可能是系统超载,也可能是资源死锁,但nginx进程并没有异常退出,在这种情况下重启容器可能是最佳的方法。那如何来实现这个检测呢

Kubernetes使用探针(probe)的方式来保障容器正常运行,实现零岩机;它通过kubeLet定期对容器进行健康检查(exec、tcp、http),当探针检测到容器状态异常时,会通过重启策略来进行重启或重建完成修复。修复后继续进行探针检测,已确保容器稳定运行

11.2 探针探测类型

startupProbe

用于检测容器中的应用是否已经正常启动。如果使用了启动探针,则所有其他探针都会被禁用,需要等待启动探针检测成功之后才可以执行。如果启动探针探测失败,则kubeLet会将容器杀死,而容器依其重启策略进行重启。如果容器没有提供启动探测,则默认状态为Success(1.16 版本增加的)

livenessProbe

用于检测容器是否存活,如果存活探测检测失败,kubelet会杀死容器,然后根据容器重启策略,决定是否重启该容器。如果容器不提供存活探针,则默认状态为Success

readinessProbe

指容器是否准备好接收网络请求,如果就绪探测失败,则将容器设定为未就绪状态,然后将其从负载均衡列表中移除,这样就不会有请求会调度到该Pod上;如果容器不提供就绪态探针,则默认状态为Success.

11.3探针检查机制

- exec:在容器内执⾏指定命令。如果命令退出时返回码为 0 则认为诊断成功。

- httpGet:对指定的IP、端⼝,执⾏HTTP请求。如果响应的状态码⼤于等于200且⼩于400,则诊断被认为是成功的。

- tcpSocket:对容器的 IP 地址上的指定端⼝执⾏ TCP 检查。如果端⼝打开,则诊断被认为是成功的

每次探测都将获得以下三种结果之⼀:

Success(成功):容器通过了诊断。

Failure(失败):容器未通过诊断,可能会触发重启操作

Unknown(未知):诊断失败,因此不会采取任何⾏动

11.4格式

apiVersion: v1

kind: Pod

metadata:

name: probe

spec:

containers:

- name: nginx

image: nginx:latest

livenessProbe:

exec:

httpGet:

tcpSocket:

initialDelaySeconds: #延迟多久探测

PeriodSeconds: #探测频率

timeoutSeconds: #探针超市

successThreshold: #成功N次,则认为是成功

failureThreshold: #失败N次,则认为是失败apiVersion: v1

kind: Pod

metadata:

name: probe

spec:

containers:

- name: nginx

image: nginx:latest

livenessProbe:

exec:

httpGet:

tcpSocket:

initialDelaySeconds: #延迟多久探测

PeriodSeconds: #探测频率

timeoutSeconds: #探针超市

successThreshold: #成功N次,则认为是成功

failureThreshold: #失败N次,则认为是失败11.5 区别

ReadinessProbe 与 LivenessProbe 的区别

ReadinessProbe 当检测失败后,将 Pod 的 IP:Port 从对应的 EndPoint 列表中删除。

livenessProbe 当检测失败后,将杀死容器并根据 Pod 的重启策略来决定作出对应的措施。ReadinessProbe 当检测失败后,将 Pod 的 IP:Port 从对应的 EndPoint 列表中删除。

livenessProbe 当检测失败后,将杀死容器并根据 Pod 的重启策略来决定作出对应的措施。StartupProbe 与 ReadinessProbe、LivenessProbe 的区别

如果三个探针同时存在,先执行 StartupProbe 探针,其他两个探针将会被暂时禁用,直到 pod 满足 StartupProbe 探针配置的条件,其他 2 个探针启动,如果不满足按照规则重启容器。另外两种探针在容器启动后,会按照配置,直到容器消亡才停止探测,而 StartupProbe 探针只是在容器启动后按照配置满足一次后,不再进行后续的探测。

11.6 什么时候使用探针?

何时使用存活探针(Liveness Probe)

容器可能卡死或无响应:如果你的应用程序在遇到问题时可能卡死或进入无响应状态,而不会自行崩溃,那么就应该使用存活探针。Liveness Probe 可以检测到这些状态,并触发 kubelet 终止并重启容器。

确保自动重启:如果你希望容器在探测失败时被杀死并重新启动,以确保应用的持续可用性,那么应配置存活探针。此时,可以将

restartPolicy设置为Always或OnFailure,以确保在探针检测到问题时容器能够自动重启。

何时使用就绪探针(Read iness Probe)

控制流量路由:如果你希望只有在探测成功时才开始向 Pod 发送请求流量,就需要指定就绪探针。就绪探针常与存活探针相同,但它确保 Pod 在启动阶段不会接收任何数据,只有探测成功后才开始接收流量。

维护状态:如果希望容器能自行进入维护状态,可以使用就绪探针,检查与存活探针不同的特定端点。对于依赖于后端服务的应用程序,可以同时使用存活探针和就绪探针。存活探针检测容器本身的健康状况,而就绪探针则确保所需的后端服务可用,避免将流量导向出错的 Pod。

Pod 删除:注意,如果只是想在 Pod 被删除时排空请求,通常不需要使用就绪探针。Pod 在删除时会自动进入未就绪状态,无论是否有就绪探针,直到容器停止为止。

何时使用启动探针(Startup Probe)

- 应用启动慢:如果容器在启动期间需要加载大型数据或配置文件,可以使用启动探针。它确保在启动完成前不会触发其他探针。

11.7案例

1.startupProbe

exec

执行一段命令,根据返回值判断执行结果。

返回值为0, 非0两种结果,可以理解为"echo $?" 执行一段命令,根据返回值判断执行结果。

返回值为0, 非0两种结果,可以理解为"echo $?"kubectl explain pod.spec.containers.startupProbe.execkubectl explain pod.spec.containers.startupProbe.execapiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

exec:

command:

- "/bin/sh"

- "-c"

- "ps aux | grp demo.py"

initialDelaySeconds: 10 # 容器启动多久后开始探测,默认0

periodSeconds: 10 # 探测频率,10s探测⼀次

timeoutSeconds: 10 # 探测超时时长

successThreshold: 1 # 成功多少次则为成功,默认1次

failureThreshold: 3 # 失败多少次则为失败,默认3次apiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

exec:

command:

- "/bin/sh"

- "-c"

- "ps aux | grp demo.py"

initialDelaySeconds: 10 # 容器启动多久后开始探测,默认0

periodSeconds: 10 # 探测频率,10s探测⼀次

timeoutSeconds: 10 # 探测超时时长

successThreshold: 1 # 成功多少次则为成功,默认1次

failureThreshold: 3 # 失败多少次则为失败,默认3次#第一次探测失败多久会重启

# initialDelaySeconds + (periodSeconds +timeoutSeconds) * failureThreshold

#程序启动完成后:此时不需要计入initiaLDelaySeconds

# (periodSeconds + timeoutSeconds)*failureThreshold#第一次探测失败多久会重启

# initialDelaySeconds + (periodSeconds +timeoutSeconds) * failureThreshold

#程序启动完成后:此时不需要计入initiaLDelaySeconds

# (periodSeconds + timeoutSeconds)*failureThresholdhttpGet

通过发起HTTTP协议的GET请求检测某个http请求的返回状态码,从而判断服务是否正常。

常见的状态码分为很多类,比如: "2xx,3xx"正常, "4xx,5xx"错误。

200: 返回状态码成功。

301: 永久跳转,会将跳转信息缓存到浏览器本地。

302: 临时跳转,并不会将本次跳转缓存到本地。

401: 验证失败。

403: 权限被拒绝。

404: 文件找不到。

413: 文件上传过大。

500: 服务器内部错误。

502: 无效的请求。

504: 后端应用网关相应超时。 通过发起HTTTP协议的GET请求检测某个http请求的返回状态码,从而判断服务是否正常。

常见的状态码分为很多类,比如: "2xx,3xx"正常, "4xx,5xx"错误。

200: 返回状态码成功。

301: 永久跳转,会将跳转信息缓存到浏览器本地。

302: 临时跳转,并不会将本次跳转缓存到本地。

401: 验证失败。

403: 权限被拒绝。

404: 文件找不到。

413: 文件上传过大。

500: 服务器内部错误。

502: 无效的请求。

504: 后端应用网关相应超时。kubectl explain pod.spec.containers.startupProbe.httpGetkubectl explain pod.spec.containers.startupProbe.httpGetapiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3apiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3tcpSocket

apiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

tcpSocket:

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3apiVersion: v1

kind: Pod

metadata:

name: pod-startup

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

startupProbe:

tcpSocket:

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 32.livenessProbe

用于检测容器是否存活,如果存活探测检测失败,kubelet会杀死容器,然后根据容器重启策略,决定是否重启该容器。如果容器不提供存活探针,则默认状态为Success

exec

apiVersion: v1

kind: Pod

metadata:

name: pod-liveness

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

exec:

command:

- "/bin/sh"

- "-c"

- '[ "$(curl -s 127.0.0.1/livez)" == "OK" ]'

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3apiVersion: v1

kind: Pod

metadata:

name: pod-liveness

spec:

containers:

- name: demoapp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

exec:

command:

- "/bin/sh"

- "-c"

- '[ "$(curl -s 127.0.0.1/livez)" == "OK" ]'

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3为了测试存活状态监测效果,可以⼿动将/livez接⼝的响应内容修改为任意值

kubectl exec -it pod-liveness-exec -- curl -S -X POST -d 'livez=error' http://127.0.0.1/livezkubectl exec -it pod-liveness-exec -- curl -S -X POST -d 'livez=error' http://127.0.0.1/livez会发现容器等待60s之后,会触发重启操作

kubectl describe pod pod-liveness

.....

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 20m default-scheduler Successfully assigned default/pod-liveness to kube-node01

Normal Pulled 13m (x4 over 20m) kubelet Container image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1" already present on machine

Normal Created 13m (x4 over 20m) kubelet Created container demoapp

Normal Started 13m (x4 over 20m) kubelet Started container demoapp

Warning Unhealthy 2m13s (x12 over 19m) kubelet Liveness probe failed:

Normal Killing 2m13s (x4 over 19m) kubelet Container demoapp failed liveness probe, will be restartedkubectl describe pod pod-liveness

.....

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 20m default-scheduler Successfully assigned default/pod-liveness to kube-node01

Normal Pulled 13m (x4 over 20m) kubelet Container image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1" already present on machine

Normal Created 13m (x4 over 20m) kubelet Created container demoapp

Normal Started 13m (x4 over 20m) kubelet Started container demoapp

Warning Unhealthy 2m13s (x12 over 19m) kubelet Liveness probe failed:

Normal Killing 2m13s (x4 over 19m) kubelet Container demoapp failed liveness probe, will be restartedhttpGet

1、编写yaml

[root@kube-master yaml]# cat pod-liveness-http.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-liveness-http

spec:

containers:

- name: pod-liveness-http

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: 'livez'

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3[root@kube-master yaml]# cat pod-liveness-http.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-liveness-http

spec:

containers:

- name: pod-liveness-http

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: 'livez'

port: 80

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 32、镜像中定义的默认响应是以200状态码响应,存活状态会成功完成,为了测试存活状态监测效果,可以⼿动将/livez接⼝的响应内容修改为任意值

kubectl exec -it pod-liveness-http -- curl -s -X POST -d 'livez=error' 127.0.0.1/livez

#现象

[root@kube-master ~]# kubectl describe pod pod-liveness-http

。。。

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 18m default-scheduler Successfully assigned default/pod-liveness-http to kube-node01

Warning Unhealthy 70s (x3 over 90s) kubelet Liveness probe failed: HTTP probe failed with statuscode: 506

Normal Killing 70s kubelet Container pod-liveness-http failed liveness probe, will be restarted

Normal Pulled 40s (x2 over 18m) kubelet Container image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1" already present on machine

Normal Created 40s (x2 over 18m) kubelet Created container pod-liveness-http

Normal Started 40s (x2 over 18m) kubelet Started container pod-liveness-httpkubectl exec -it pod-liveness-http -- curl -s -X POST -d 'livez=error' 127.0.0.1/livez

#现象

[root@kube-master ~]# kubectl describe pod pod-liveness-http

。。。

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 18m default-scheduler Successfully assigned default/pod-liveness-http to kube-node01

Warning Unhealthy 70s (x3 over 90s) kubelet Liveness probe failed: HTTP probe failed with statuscode: 506

Normal Killing 70s kubelet Container pod-liveness-http failed liveness probe, will be restarted

Normal Pulled 40s (x2 over 18m) kubelet Container image "registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1" already present on machine

Normal Created 40s (x2 over 18m) kubelet Created container pod-liveness-http

Normal Started 40s (x2 over 18m) kubelet Started container pod-liveness-httptcpSocket

1、编写yaml文件

[root@kube-master yaml]# cat pod-liveness-tcp.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-liveness-tcp

spec:

containers:

- name: pod-liveness-tcp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3[root@kube-master yaml]# cat pod-liveness-tcp.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-liveness-tcp

spec:

containers:

- name: pod-liveness-tcp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 32、直接kill掉这个容器,查看现象

3.readinessProbe

指容器是否准备好接收网络请求,如果就绪探测失败,则将容器设定为未就绪状态,然后将其从负载均衡列表中移除,这样就不会有请求会调度到该Pod上;如果容器不提供就绪态探针,则默认状态为Success。

有些程序启动后需要加载配置或数据,甚至有些程序需要运行预热的过程,需要一定的时间。所以需要避免Pod启动成功后立即让其处理客户端请求,而应该让其初始化完成后转为就绪状态,在对外提供服务。此类应用就需要使用readinessProbe探针

exec

1、编写yaml文件

[root@kube-master yaml]# cat pod-readiness-exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-readiness-exec

labels:

app: readiness

spec:

containers:

- name: pod-readiness-exec

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

readinessProbe:

exec:

command:

- "/bin/sh"

- "-c"

- '[ "$(curl -s 127.0.0.1/livez)" == "OK" ]'

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3[root@kube-master yaml]# cat pod-readiness-exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-readiness-exec

labels:

app: readiness

spec:

containers:

- name: pod-readiness-exec

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

readinessProbe:

exec:

command:

- "/bin/sh"

- "-c"

- '[ "$(curl -s 127.0.0.1/livez)" == "OK" ]'

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3httpGet

1、编写yaml

2、为了测试就绪状态监测效果,将/readyz修改为⾮OK

kubectl exec -it pod-readiness-http -- curl -s -X POST -d 'readyz=error' http://127.0.0.1/readyzkubectl exec -it pod-readiness-http -- curl -s -X POST -d 'readyz=error' http://127.0.0.1/readyz3、由于pod未就绪,所以会将该节点从Service负载均衡中准为未就绪状态(需要事先创建好负载均衡,否则难以观察效果)

kubectl get pod pod-readiness-http

kubectl describe pod pod-readiness-httpkubectl get pod pod-readiness-http

kubectl describe pod pod-readiness-httptcpSocket

[root@kube-master yaml]# cat pod-readiness-tcp.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-readiness-tcp

labels:

app: readiness

spec:

containers:

- name: pod-readiness-tcp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3[root@kube-master yaml]# cat pod-readiness-tcp.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-readiness-tcp

labels:

app: readiness

spec:

containers:

- name: pod-readiness-tcp

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/demoapp:v1

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

successThreshold: 1

failureThreshold: 3