官方文档,https://github.com/kubernetes/kube-state-metrics

1. kube-state-metrics

将 Kubernetes API 中的各种对象状态信息转化为Prometheus 可以使用的监控指标数据

1.1 版本对应关系

| kube-state-metrics | Kubernetes client-go Version |

|---|---|

| v2.4.2 | v1.22,v1.23,1.24 |

| v2.5.0 | v1.22,v1.23,1.24 |

| v2.8.2 | v1.26 |

| v2.9.2 | v1.26 |

| v2.10.1 | v1.27 |

| v2.11.0 | v1.28 |

| v2.12.0 | v1.29 |

| v2.13.0 | v1.30 |

| v2.14.0 | v1.31 |

| main | v1.31 |

1.2 常见指标说明

kube-state-metrics 中的常用监控查询指标,如下表所示。

| 分类 | 指标名称 | 类型 | 含义 |

|---|---|---|---|

| 节点 | kube_node_info | Gauge | 查询集群内所有的节点信息,可以通过 sum() 函数获得集群中的所有节点数目。 |

| kube_node_spec_unschedulable | Gauge | 查询节点是否可以调度新的 Pod。可以通过 sum() 函数获得集群中可以调度的 Pod 总数。 | |

| kube_node_status_allocatable | Gauge | 查询节点可用于调度的资源总数。包括:CPU、内存、Pods 等。允许通过标签筛选,查看节点具体的资源容量。 | |

| kube_node_status_capacity | Gauge | 查询节点的全部资源总数,包括:CPU、内存、Pods 等。允许通过标签筛选,查看节点具体的资源容量。 | |

| kube_node_status_condition | Gauge | 查询节点的状态,可以基于 OutOfDisk、MemoryPressure、DiskPressure 等状态找到状态不正常的节点。 | |

| Pod | kube_pod_info | Gauge | 查询所有的 Pod 信息,可以通过 sum() 函数获得集群中的所有 Pod 数目。 |

| kube_pod_status_phase | Gauge | 查询所有的 Pod 启动状态。状态包括:True:启动成功。Failed:启动失败。Unknown:状态未知。 | |

| kube_pod_status_ready | Gauge | 查询所有处于 Ready 状态的 Pod。可以通过 sum() 函数获得集群中的所有 Pod 数目。 | |

| kube_pod_status_scheduled | Gauge | 查询所有处于 scheduled 状态的 Pod。可以通过 sum() 函数获得集群中的所有 Pod 数目。 | |

| 容器 | kube_pod_container_info | Gauge | 查询所有 Container 的信息。可以通过 sum() 函数获得集群中的所有 Container 数目。 |

| kube_pod_container_status_ready | Gauge | 查询所有状态为 Ready 的 Container 信息。可以通过 sum() 函数获得集群中的所有 Container 数目。 | |

| kube_pod_container_status_restarts_total | Count | 查询集群中所有 Container 的重启累计次数。可以通过 irate() 函数获得集群中 Container 的重启率。 | |

| kube_pod_container_status_running | Gauge | 查询所有状态为 Running 的 Container 信息。可以通过 sum() 函数获得集群中的所有 Container 数目。 | |

| kube_pod_container_status_terminated | Gauge | 查询所有状态为 Terminated 的 Container 信息。可以通过 sum() 函数获得集群中的所有 Container 数目。 | |

| kube_pod_container_status_waiting | Gauge | 查询所有状态为 Waiting 的 Container 信息。可以通过 sum() 函数获得集群中的所有 Container 数目。 | |

| kube_pod_container_resource_requests | Gauge | 查询容器的资源需求量。允许通过标签筛选,查看容器具体的资源需求量。 | |

| kube_pod_container_resource_limits | Gauge | 查询容器的资源限制量。允许通过标签筛选,查看容器具体的资源限制量。 |

1.3 监控Kubernetes集群监控的各个维度以及策略

| 目标 | 服务发现模式 | 监控方法 | 数据源 |

|---|---|---|---|

| 从集群各节点kubelet组件中获取节点kubelet的基本运行状态的监控指标 | node | 白盒监控 | kubelet |

| 从集群各节点kubelet内置的cAdvisor中获取,节点中运行的容器的监控指标 | node | 白盒监控 | kubelet |

| 从部署到各个节点的Node Exporter中采集主机资源相关的运行资源 | node | 白盒监控 | node exporter |

| 对于内置了Prometheus支持的应用,需要从Pod实例中采集其自定义监控指标 | pod | 白盒监控 | custom pod |

| 获取API Server组件的访问地址,并从中获取Kubernetes集群相关的运行监控指标 | endpoints | 白盒监控 | api server |

| 获取集群中Service的访问地址,并通过Blackbox Exporter获取网络探测指标 | service | 黑盒监控 | blackbox exporter |

| 获取集群中Ingress的访问信息,并通过Blackbox Exporter获取网络探测指标 | ingress | 黑盒监控 | blackbox exporter |

1.4 addon-resizer

官当,https://github.com/kubernetes/autoscaler/tree/master/addon-resizer

Addon resizer 是一个很有趣的小插件。监控场景中使用了kube-state-metrics,kube-state-metrics的资源占用量会随着集群中的Pod数量的不断增长而不断上升。Addon resizer 容器会以Sidecar的形式监控与自己同一个Pod内的另一个容器(在本例中是kube-state-metrics)并且垂直的扩展或收缩这个容器。Addon resizer能依据集群中节点的数量线性地扩展kube-state-metrics,以保证其能够有能力提供完整的metrics API服务。

2. cAdvisor

用于监视容器资源使用和性能的工具,它可以收集 CPU、内存、磁盘、网络和文件系统等方面的指标数据

3. node-exporter

用于监控主机指标数据的收集器,它可以收集 CPU 负载、内存使用情况、磁盘空间、网络流量等各种指标数据。

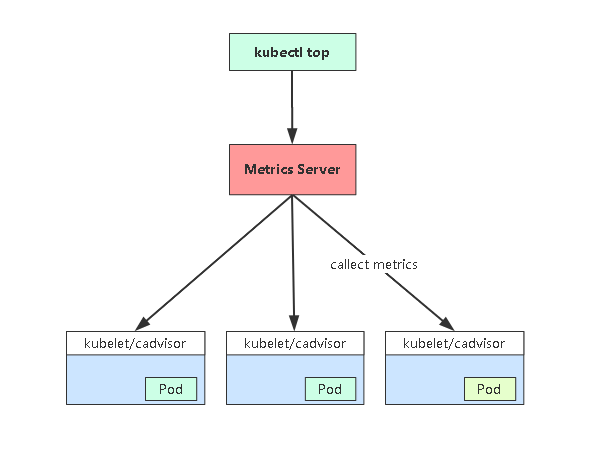

4. metrics-server

4.1 什么是metrics server

Metrics Server 是 Kubernetes 内置自动扩展管道的可扩展、高效的容器资源指标来源。

Metrics Server 从 Kubelet 收集资源指标,并通过Metrics API在 Kubernetes apiserver 中公开它们,以供Horizontal Pod Autoscaler和Vertical Pod Autoscaler 使用。Metrics API 也可以通过 访问kubectl top,从而更轻松地调试自动缩放管道。

- 使用场景

自动伸缩:结合Horizontal Pod Autoscaler (HPA)使用时,可以根据实时负载动态调整副本数量。

健康检查:定期检查关键服务的资源消耗是否正常,帮助识别潜在问题。

成本优化:分析长期运行的工作负载,找出可以减少资源请求的地方以节省成本。

❌ 注意

Metrics Server 仅用于自动扩展目的。例如,请勿将其用于将指标转发到监控解决方案,或将其作为监控解决方案指标的来源。在这种情况下,请/metrics/resource直接从 Kubelet 端点收集指标。

查看资源集群状态

查看master组件状态:

csharpkubectl get cskubectl get cs查看node状态:

csharpkubectl get nodekubectl get node查看Apiserver代理的URL:

armasmkubectl cluster-infokubectl cluster-info查看集群详细信息:

luakubectl cluster-info dumpkubectl cluster-info dump查看资源信息:

sqlkubectl describe <资源> <名称>kubectl describe <资源> <名称>查看资源信息:

csharpkubectl get <资源>kubectl get <资源>

监控集群资源利用率

查看node资源消耗

csskubectl top node <node name>kubectl top node <node name>查看pod资源消耗

csskubectl top pod <pod name>kubectl top pod <pod name>

执行时会提示错误:error: Metrics API not available

这是因为这个命令需要由metric-server服务提供数据,而这个服务默认没有安装,还需要手动部署下。

Metrics-server 工作流程图

- Metrics Server是一个集群范围的资源使用情况的数据聚合器。作为一个应用部署在集群中。Metric server从每个节点上KubeletAPI收集指标,通过Kubernetes聚合器注册在Master APIServer中。

- 为集群提供Node、Pods资源利用率指标

- 项目地址:https://github.com/kubernetes-sigs/metrics-server

4.2 Metrics-server 部署

yaml部署

官网下载地址:https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml

示例代码

yaml[root@k8s-master01 ~]# vim metrics-server.yaml [root@k8s-master01 ~]# cat metrics-server.yaml --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:aggregated-metrics-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: name: v1beta1.metrics.k8s.io spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100 --- apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server image: lizhenliang/metrics-server:v0.3.7 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux kubernetes.io/arch: "amd64" --- apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/name: "Metrics-server" kubernetes.io/cluster-service: "true" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: main-port --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system[root@k8s-master01 ~]# vim metrics-server.yaml [root@k8s-master01 ~]# cat metrics-server.yaml --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:aggregated-metrics-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: name: v1beta1.metrics.k8s.io spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100 --- apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server image: lizhenliang/metrics-server:v0.3.7 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux kubernetes.io/arch: "amd64" --- apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/name: "Metrics-server" kubernetes.io/cluster-service: "true" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: main-port --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system运行配置文件

bash[root@k8s-master01 ~]# kubectl apply -f metrics-server.yaml clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created deployment.apps/metrics-server created service/metrics-server created clusterrole.rbac.authorization.k8s.io/system:metrics-server created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created[root@k8s-master01 ~]# kubectl apply -f metrics-server.yaml clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created deployment.apps/metrics-server created service/metrics-server created clusterrole.rbac.authorization.k8s.io/system:metrics-server created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created检查是否部署成功

shell[root@k8s-master01 ~]# kubectl get apiservices |grep metrics kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodesv1beta1.metrics.k8s.io kube-system/metrics-server True 5m58s [root@k8s-master01 ~]# kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes {"kind":"NodeMetricsList","apiVersion":"metrics.k8s.io/v1beta1","metadata":{"selfLink":"/apis/metrics.k8s.io/v1beta1/nodes"},"items":[root@k8s-master01 ~]# kubectl get apiservices |grep metrics kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodesv1beta1.metrics.k8s.io kube-system/metrics-server True 5m58s [root@k8s-master01 ~]# kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes {"kind":"NodeMetricsList","apiVersion":"metrics.k8s.io/v1beta1","metadata":{"selfLink":"/apis/metrics.k8s.io/v1beta1/nodes"},"items":

helm部署

1、添加helm仓库

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/2、下载并推送到harbor

$ helm pull metrics-server/metrics-server --version 3.12.1

$ helm push metrics-server-3.12.1.tgz oci://core.xxx.com/plugins$ helm pull metrics-server/metrics-server --version 3.12.1

$ helm push metrics-server-3.12.1.tgz oci://core.xxx.com/plugins3、拉取chart包

$ helm pull oci://core.jiaxzeng.com/plugins/metrics-server --version 3.12.1 --untar --untardir /etc/kubernetes/addons/$ helm pull oci://core.jiaxzeng.com/plugins/metrics-server --version 3.12.1 --untar --untardir /etc/kubernetes/addons/4、创建metrics-server配置文件

$ cat <<'EOF' | sudo tee /etc/kubernetes/addons/metrics-server-value.yaml > /dev/null

image:

repository: core.jiaxzeng.com/library/metrics-server

args:

- --kubelet-insecure-tls

EOF$ cat <<'EOF' | sudo tee /etc/kubernetes/addons/metrics-server-value.yaml > /dev/null

image:

repository: core.jiaxzeng.com/library/metrics-server

args:

- --kubelet-insecure-tls

EOF5、安装metrics-server

helm -n kube-system install metrics-server -f /etc/kubernetes/addons/metrics-server-value.yaml /etc/kubernetes/addons/metrics-serverhelm -n kube-system install metrics-server -f /etc/kubernetes/addons/metrics-server-value.yaml /etc/kubernetes/addons/metrics-server6、验证服务

$ kubectl top nodes$ kubectl top nodes官档,

https://kubernetes.io/docs/tasks/debug/debug-cluster/resource-metrics-pipeline/#metrics-server