1. KubeStateMetrics简介

kube-state-metrics是一个Kubernetes 组件,它通过查询Kubernetes的APl服务器,收集关于Kubernetes 中各种资源(如节点、pod、服务等)的状态信息,并将这些信息转换成Prometheus可以使用的指标。

kube-state-metrics 主要功能:

kube-state-metrics通过轮询kubernetes API,提供有关资源对象指标的metrics,包括:CronJob、DaemonSet、Deployment、Job、LimitRange、Node、PersistentVolume 、PersistentVolumeClaim、Pod、Pod Disruption Budget、ReplicaSet、ReplicationController、ResourceQuota、Service、StatefulSet、Namespace、Horizontal Pod Autoscaler、Endpoint、Secret、ConfigMap、Ingress、CertificateSigningRequest

通过 kube-state-metrics 可以方便的对 Kubernetes 集群进行监控,发现问题,以及提前预警

1.1 kube-state-metrics与metrics-server对比

metric-server(或heapster)是从api-server中获取cpu、内存使用率这种监控指标,并把他们发送给存储后端,如influxdb或云厂商,他当前的核心作用是:为HPA等组件提供决策指标支持。

kube-state-metrics关注于获取k8s各种资源的最新状态,如deployment或者daemonset,之所以没有把kube-state-metrics纳入到metric-server的能力中,是因为他们的关注点本质上是不一样的。metric-server仅仅是获取、格式化现有数据,写入特定的存储,实质上是一个监控系统。而kube-state-metrics是将k8s的运行状况在内存中做了个快照,并且获取新的指标,但他没有能力导出这些指标

2. KubeStateMetrics

包含 ServiceAccount 、 ClusterRole 、 ClusterRoleBinding 、 Deployment 、ConfigMap 、 Service 六类YAML文件.

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

- apiGroups: ["networking.k8s.io", "extensions"]

resources:

- ingresses

verbs: ["list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources:

- storageclasses

verbs: ["list", "watch"]

- apiGroups: ["certificates.k8s.io"]

resources:

- certificatesigningrequests

verbs: ["list", "watch"]

- apiGroups: ["policy"]

resources:

- poddisruptionbudgets

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions","apps"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: monitor

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

#image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.2

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/kube-state-metrics:v2.4.2

ports:

- name: http-metrics ## 用于公开kubernetes的指标数据的端口

containerPort: 8080

- name: telemetry ##用于公开自身kube-state-metrics的指标数据的端口

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer ##addon-resizer 用来伸缩部署在集群内的 metrics-server, kube-state-metrics等监控组件

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/addon-resizer:v1.8.6

#image: mirrorgooglecontainers/addon-resizer:1.8.6

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: monitor

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metricsapiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

- apiGroups: ["networking.k8s.io", "extensions"]

resources:

- ingresses

verbs: ["list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources:

- storageclasses

verbs: ["list", "watch"]

- apiGroups: ["certificates.k8s.io"]

resources:

- certificatesigningrequests

verbs: ["list", "watch"]

- apiGroups: ["policy"]

resources:

- poddisruptionbudgets

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions","apps"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: monitor

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

#image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.2

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/kube-state-metrics:v2.4.2

ports:

- name: http-metrics ## 用于公开kubernetes的指标数据的端口

containerPort: 8080

- name: telemetry ##用于公开自身kube-state-metrics的指标数据的端口

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer ##addon-resizer 用来伸缩部署在集群内的 metrics-server, kube-state-metrics等监控组件

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/addon-resizer:v1.8.6

#image: mirrorgooglecontainers/addon-resizer:1.8.6

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: monitor

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: monitor

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics- 创建

kubectl apply -f kube-state-metrics.yamlkubectl apply -f kube-state-metrics.yaml- 查看

[root@kube-master Prometheus]# kubectl get pod -n monitor

NAME READY STATUS RESTARTS AGE

kube-state-metrics-69d459f598-7sf6l 2/2 Running 0 5m29s[root@kube-master Prometheus]# kubectl get pod -n monitor

NAME READY STATUS RESTARTS AGE

kube-state-metrics-69d459f598-7sf6l 2/2 Running 0 5m29s- 验证

kubectl get all -nmonitor |grep kube-state-metrics

curl -kL $(kubectl get service -n monitor | grep kube-state-metrics |awk '{ print $3 }'):8080/metricskubectl get all -nmonitor |grep kube-state-metrics

curl -kL $(kubectl get service -n monitor | grep kube-state-metrics |awk '{ print $3 }'):8080/metrics3. Kubernetes 集群监控(自动发现)

- Centos 7

- Kubernetes 1.22

- Prometheus 2.36.0

- node-export 最新

资源角色

- node角色

该node 角色发现用于检索集群中的节点目标信息,其地址默认为节点kubelet的HTTP访问端口。目标地址的默认顺序为NodeInternalIP,NodeExternalIP,NodeLegacyHostIP和NodeHostName中的第一个现有地址。

该角色可获取到的元数据标签如下:

• __meta_kubernetes_node_name:节点对象的名称。

• __meta_kubernetes_node_label_labelname:节点对象所定义的各个label

• __meta_kubernetes_node_labelpresent_labelname:节点对象所定义的各个label,value固定为true。

• _meta_kubernetes_node_annotation_annotationname:来自节点对象的每个注释

• _meta_kubernetes_node_annotationpresent_annotationname:来自节点对象的每个注释,value固定为true。

• _meta_kubernetes_node_address_address_type:每个节点地址类型的第一个地址(如果存在)

此外,节点的instance标签将被设置为从API服务检索到的节点名称。

- service角色 该角色发现用于检索集群中每个service目标,并且将该服务开放的端口做为目标端口。该地址将设置为服务的Kubernetes DNS名称以及相应的服务端口。

该角色可获取到的元数据标签如下:

- __meta_kubernetes_namespace: 服务对象的名称空间。

- __meta_kubernetes_service_annotation_annotationname:服务对象的每个注释。

- __meta_kubernetes_service_annotationpresent_annotationname: 服务对象的每个注释,value固定为true。

- __meta_kubernetes_service_cluster_ip: 服务对象的集群IP。

- __meta_kubernetes_service_external_name: 服务的DNS名称。

- __meta_kubernetes_service_label_labelname: 服务对象中的每个label。

- __meta_kubernetes_service_labelpresent_labelname: 服务对象中的每个label,value固定为true。

- __meta_kubernetes_service_name: 服务对象的名称。

- __meta_kubernetes_service_port_name: 目标服务端口的名称。

- __meta_kubernetes_service_port_protocol: 目标服务端口的协议。

- __meta_kubernetes_service_type: 服务的类型。

2.Pod角色

该pod角色发现用于发现所有Pod并将其容器做为目标访问,对于容器的每个声明的端口,将生成一个目标。如果容器没有指定的端口,则会为每个容器创建无端口目标。

该角色可获取到的元数据标签如下:

- __meta_kubernetes_namespace: pod对象的命名空间。

- __meta_kubernetes_pod_name: pod对象的名称。

- __meta_kubernetes_pod_ip: pod对象的pod IP。

- __meta_kubernetes_pod_label_labelname: 来自pod对象的每个标签。

- __meta_kubernetes_pod_labelpresent_labelname: 来自pod对象的每个标签,value固定为true。

- __meta_kubernetes_pod_annotation_annotationname: 来自pod对象的每个注释。

- __meta_kubernetes_pod_annotationpresent_annotationname: 来自pod对象的每个注释,value固定为true。

- __meta_kubernetes_pod_container_init: 如果容器是初始化容器,则value为true。

- __meta_kubernetes_pod_container_name: 目标地址指向的容器的名称。

- __meta_kubernetes_pod_container_port_name: 容器端口的名称。

- __meta_kubernetes_pod_container_port_number: 容器端口号。

- __meta_kubernetes_pod_container_port_protocol: 容器端口的协议。

- __meta_kubernetes_pod_ready: 代表pod状态是否就绪,value为true或false。

- __meta_kubernetes_pod_phase: Pod的生命周期,Value值为Pending,Running,Succeeded,Failed或Unknown 。

- __meta_kubernetes_pod_node_name: Pod所在节点的名称。

- __meta_kubernetes_pod_host_ip: pod所在节点的IP。

- __meta_kubernetes_pod_uid: pod对象的UID。

- __meta_kubernetes_pod_controller_kind: pod控制器的对象种类。

- __meta_kubernetes_pod_controller_name: pod控制器的名称。

3.endpoints角色

该endpoints角色发现用于检索服务的endpoints目标,且每个endpoints的port地址会生成一个目标。 如果端点由Pod支持,则该Pod的所有其他容器端口(包括未绑定到endpoints的端口)也将作为目标。

该角色可获取到的元数据标签如下:

- __meta_kubernetes_namespace: endpoints对象的命名空间

- __meta_kubernetes_endpoints_name: endpoints对象的名称

对于直接从端点列表中发现的所有目标(不包括由底层pod推断出来的目标),将附加以下标签:

- __meta_kubernetes_endpoint_hostname: 端点的主机名

- __meta_kubernetes_endpoint_node_name: 托管endpoints的节点名称

- __meta_kubernetes_endpoint_ready: 代表endpoint 状态是否就绪,value为true或false。

- __meta_kubernetes_endpoint_port_name: endpoint 端口的名称。

- __meta_kubernetes_endpoint_port_protocol: endpoint 端口的协议。

- __meta_kubernetes_endpoint_address_target_kind: endpoint地址目标的类型,如deployment、DaemonSet等。

- __meta_kubernetes_endpoint_address_target_name: endpoint地址目标的名称。

3.0 修改端口

在 prometheus-config.yaml 一次添加如下采集数据:

由于环境是采用kubeadm 安装,所以修改静态配置文件在,/etc/kubernetes/manifests/

[root@kube-master test]# cd /etc/kubernetes/manifests/

[root@kube-master manifests]# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml[root@kube-master test]# cd /etc/kubernetes/manifests/

[root@kube-master manifests]# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml直接修改保存,自动重启

prometheus访问监控对象端口

| 端口 | 端口对应监控对象 | 修订 |

|---|---|---|

| 6443 | apiserver | |

| 8080 | kube-state-metrics( cAdvisor容器性能分析组件 ) | |

| 9090 | prometheus | |

| 9093 | alertmanager | |

| 9100 | node-exporter | |

| 9153 | coredns | |

| 10249 | kube-proxy | kubectl edit cm/kube-proxy -n kube-system |

| 10250 | kubelet | Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler |

| 10251 | scheduler | Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler |

| 10257 | controller-manager | Prometheus监控Kubelet, kube-controller-manager 和 kube-scheduler 从1.22开始端口从10252变成10257 |

kube-scheduler 配置 --port=0 运行参数则会关闭HTTP访问,也就是无法获取默认的metrics,需要注释

默认只运行127.0.0.1访问,所以把127.0.0.1修改成0.0.0.0

3.1 kube-apiserver

需要注意的是使用https访问时,需要tls相关配置,可以指定ca证书路径或者insecure_skip_verify: true 跳过证书验证

除此之外,还要指定 bearer_token_file ,否则会提示 server returned HTTP status 400 Bad Request ;

kube-apiserver 监听在节点的 6443 端口

创建采集器

bash[root@kube-master prometheus]# kubectl describe svc kubernetes Name: kubernetes Namespace: default Labels: component=apiserver provider=kubernetes Annotations: <none> Selector: <none> Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 192.168.0.1 IPs: 192.168.0.1 Port: https 443/TCP TargetPort: 6443/TCP Endpoints: 10.103.236.201:6443 Session Affinity: None Events: <none>[root@kube-master prometheus]# kubectl describe svc kubernetes Name: kubernetes Namespace: default Labels: component=apiserver provider=kubernetes Annotations: <none> Selector: <none> Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 192.168.0.1 IPs: 192.168.0.1 Port: https 443/TCP TargetPort: 6443/TCP Endpoints: 10.103.236.201:6443 Session Affinity: None Events: <none>根据svc信息可知,apiserver位于default命名空间下,标签为component=apiserver,访问方式为https,端口为6443

- job_name: kube-apiserver

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name]

action: keep

regex: default;kubernetes

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace - job_name: kube-apiserver

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name]

action: keep

regex: default;kubernetes

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace3.2 controller-manager

kube-controller-manager 监听在节点的 10257 端口

- job_name: kube-controller-manager

metrics_path: metrics

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_component]

regex: kube-controller-manager

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:10257

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace - job_name: kube-controller-manager

metrics_path: metrics

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_component]

regex: kube-controller-manager

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:10257

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace3.3 kube-scheduler

kube-scheduler 监听在节点的 10251 端口

- job_name: kube-scheduler

kubernetes_sd_configs:

- role: pod

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_component]

regex: kube-scheduler

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:10251

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace- job_name: kube-scheduler

kubernetes_sd_configs:

- role: pod

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_component]

regex: kube-scheduler

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:10251

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace3.4 coredns

- 查看coredns资源详情

[root@kube-master prometheus]# kubectl describe svc -n kube-system kube-dns

Name: kube-dns

Namespace: kube-system

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=CoreDNS

Annotations: prometheus.io/port: 9153

prometheus.io/scrape: true

Selector: k8s-app=kube-dns

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 192.168.0.10

IPs: 192.168.0.10

Port: dns 53/UDP

TargetPort: 53/UDP

Endpoints: 172.25.244.198:53,172.25.244.202:53

Port: dns-tcp 53/TCP

TargetPort: 53/TCP

Endpoints: 172.25.244.198:53,172.25.244.202:53

Port: metrics 9153/TCP

TargetPort: 9153/TCP

Endpoints: 172.25.244.198:9153,172.25.244.202:9153

Session Affinity: None

Events: <none>[root@kube-master prometheus]# kubectl describe svc -n kube-system kube-dns

Name: kube-dns

Namespace: kube-system

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=CoreDNS

Annotations: prometheus.io/port: 9153

prometheus.io/scrape: true

Selector: k8s-app=kube-dns

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 192.168.0.10

IPs: 192.168.0.10

Port: dns 53/UDP

TargetPort: 53/UDP

Endpoints: 172.25.244.198:53,172.25.244.202:53

Port: dns-tcp 53/TCP

TargetPort: 53/TCP

Endpoints: 172.25.244.198:53,172.25.244.202:53

Port: metrics 9153/TCP

TargetPort: 9153/TCP

Endpoints: 172.25.244.198:9153,172.25.244.202:9153

Session Affinity: None

Events: <none>匹配pod对象,lable标签为component=kube-scheduler即可

[root@kube-master test]# kubectl get services -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 192.168.110.47 <none> 5473/TCP 77d

kube-controller-manager ClusterIP None <none> 10257/TCP 5h10m

kube-dns ClusterIP 192.168.0.10 <none> 53/UDP,53/TCP,9153/TCP 78d

bash-5.1# curl kube-dns.kube-system.svc:9153/metrics

# HELP coredns_build_info A metric with a constant '1' value labeled by version, revision, and goversion from which CoreDNS was built.

# TYPE coredns_build_info gauge

coredns_build_info{goversion="go1.17.1",revision="13a9191",version="1.8.6"} 1

# HELP coredns_cache_entries The number of elements in the cache.

# TYPE coredns_cache_entries gauge

coredns_cache_entries{server="dns://:53",type="denial"} 333

coredns_cache_entries{server="dns://:53",type="success"} 63[root@kube-master test]# kubectl get services -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 192.168.110.47 <none> 5473/TCP 77d

kube-controller-manager ClusterIP None <none> 10257/TCP 5h10m

kube-dns ClusterIP 192.168.0.10 <none> 53/UDP,53/TCP,9153/TCP 78d

bash-5.1# curl kube-dns.kube-system.svc:9153/metrics

# HELP coredns_build_info A metric with a constant '1' value labeled by version, revision, and goversion from which CoreDNS was built.

# TYPE coredns_build_info gauge

coredns_build_info{goversion="go1.17.1",revision="13a9191",version="1.8.6"} 1

# HELP coredns_cache_entries The number of elements in the cache.

# TYPE coredns_cache_entries gauge

coredns_cache_entries{server="dns://:53",type="denial"} 333

coredns_cache_entries{server="dns://:53",type="success"} 63- job_name: coredns

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-dns

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:9153

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace- job_name: coredns

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-dns

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:9153

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace3.5 etcd

- 查看scheduler资源详情

[root@kube-master prometheus]# kubectl describe pod -n kube-system etcd-k8s-master01

Name: etcd-k8s-master01

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: k8s-master01/10.103.236.201

Start Time: Sun, 30 Jun 2024 09:03:01 +0800

Labels: component=etcd

tier=control-plane

...

Command:

etcd

--advertise-client-urls=https://10.103.236.201:2379

--cert-file=/etc/kubernetes/pki/etcd/server.crt

--client-cert-auth=true

--data-dir=/var/lib/etcd

--experimental-initial-corrupt-check=true

--initial-advertise-peer-urls=https://10.103.236.201:2380

--initial-cluster=k8s-master01=https://10.103.236.201:2380

--key-file=/etc/kubernetes/pki/etcd/server.key

--listen-client-urls=https://127.0.0.1:2379,https://10.103.236.201:2379

--listen-metrics-urls=http://0.0.0.0:2381

--listen-peer-urls=https://10.103.236.201:2380

--name=k8s-master01

--peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

--peer-client-cert-auth=true

--peer-key-file=/etc/kubernetes/pki/etcd/peer.key

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

--snapshot-count=10000

--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

...[root@kube-master prometheus]# kubectl describe pod -n kube-system etcd-k8s-master01

Name: etcd-k8s-master01

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: k8s-master01/10.103.236.201

Start Time: Sun, 30 Jun 2024 09:03:01 +0800

Labels: component=etcd

tier=control-plane

...

Command:

etcd

--advertise-client-urls=https://10.103.236.201:2379

--cert-file=/etc/kubernetes/pki/etcd/server.crt

--client-cert-auth=true

--data-dir=/var/lib/etcd

--experimental-initial-corrupt-check=true

--initial-advertise-peer-urls=https://10.103.236.201:2380

--initial-cluster=k8s-master01=https://10.103.236.201:2380

--key-file=/etc/kubernetes/pki/etcd/server.key

--listen-client-urls=https://127.0.0.1:2379,https://10.103.236.201:2379

--listen-metrics-urls=http://0.0.0.0:2381

--listen-peer-urls=https://10.103.236.201:2380

--name=k8s-master01

--peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

--peer-client-cert-auth=true

--peer-key-file=/etc/kubernetes/pki/etcd/peer.key

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

--snapshot-count=10000

--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

...由上可知,启动参数里面有一个 --listen-metrics-urls=http://127.0.0.1:2381 的配置,该参数就是来指定 Metrics 接口运行在 2381 端口下面的,而且是 http 的协议,所以也不需要什么证书配置,这就比以前的版本要简单许多了,以前的版本需要用 https 协议访问,所以要配置对应的证书。但是还需修改配置文件,地址改为0.0.0.0

- job_name: etcd

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_component

regex: etcd

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:2381

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace- job_name: etcd

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_component

regex: etcd

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:2381

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace3.6 kube-state-metrics

编写prometheus配置文件,需要注意的是,他默认匹配到的是8080和801两个端 口,需要手动指定为8080端口;

- job_name: kube-state-metrics

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

regex: kube-state-metrics

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:8080

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace - job_name: kube-state-metrics

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

regex: kube-state-metrics

action: keep

- source_labels: [__meta_kubernetes_pod_ip]

regex: (.+)

target_label: __address__

replacement: ${1}:8080

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace部分参数介绍:

- kubernetes_sd_configs: 设置发现模式为 Kubernetes 动态服务发现

- kubernetes_sd_configs.role:指定Kubernetes 的服务发现模式,这里设置为endpoints 的服务发现模式,该模式下会调用kube-apiserver 中的接口获取指标数据。并且还限定只获取 kube-state-metrics 所在-Namespace 的空间 kube-system 中的 Endpoints 信息

- kubernetes_sd_configs.namespace:指定只在配置的 Namespace 中进行endpoints 服务发现

- relabel_configs:用于对采集的标签进行重新标记

热加载prometheus,使configmap配置文件生效(也可以等待prometheus的自动热加载):

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload3.7 kube-proxy

Kube-Proxy 默认暴露两个端口,10249用于暴露监控指标,在 /metrics 接口吐出 Prometheus 协议的监控数据

kubectl edit cm/kube-proxy -n kube-system

metricsBindAddress: "0.0.0.0:10249"kubectl edit cm/kube-proxy -n kube-system

metricsBindAddress: "0.0.0.0:10249"- 重启pod

kubectl delete pod --force `kubectl get pod -n kube-system |grep kube-proxy |awk '{print $1}'`

或者

kubectl delete pod -l k8s-app=kube-proxy -n kube-systemkubectl delete pod --force `kubectl get pod -n kube-system |grep kube-proxy |awk '{print $1}'`

或者

kubectl delete pod -l k8s-app=kube-proxy -n kube-system- 验证

[root@kube-master test]# curl -s http://localhost:10249/metrics | head -n 10

# HELP apiserver_audit_event_total [ALPHA] Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_audit_requests_rejected_total [ALPHA] Counter of apiserver requests rejected due to an error in audit logging backend.

# TYPE apiserver_audit_requests_rejected_total counter

apiserver_audit_requests_rejected_total 0

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 3.2952e-05

go_gc_duration_seconds{quantile="0.25"} 4.5446e-05[root@kube-master test]# curl -s http://localhost:10249/metrics | head -n 10

# HELP apiserver_audit_event_total [ALPHA] Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_audit_requests_rejected_total [ALPHA] Counter of apiserver requests rejected due to an error in audit logging backend.

# TYPE apiserver_audit_requests_rejected_total counter

apiserver_audit_requests_rejected_total 0

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 3.2952e-05

go_gc_duration_seconds{quantile="0.25"} 4.5446e-0510256 端口作为健康检查的端口,使用 /healthz 接口做健康检查,请求之后返回两个时间信息:

[root@kube-master test]# curl -s http://localhost:10256/healthz | jq .

{

"lastUpdated": "2024-06-27 09:49:35.832247962 +0000 UTC m=+3005.426431913",

"currentTime": "2024-06-27 09:49:35.832247962 +0000 UTC m=+3005.426431913"

}[root@kube-master test]# curl -s http://localhost:10256/healthz | jq .

{

"lastUpdated": "2024-06-27 09:49:35.832247962 +0000 UTC m=+3005.426431913",

"currentTime": "2024-06-27 09:49:35.832247962 +0000 UTC m=+3005.426431913"

}- 为

kube-proxy创建service

kube-proxy-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-proxy

namespace: kube-system

spec:

selector:

k8s-app: kube-proxy

type: ClusterIP

clusterIP: None #设置为None,不分配Service IP

ports:

- name: kube-proxy-metrics-port

port: 10249

targetPort: 10249apiVersion: v1

kind: Service

metadata:

name: kube-proxy

namespace: kube-system

spec:

selector:

k8s-app: kube-proxy

type: ClusterIP

clusterIP: None #设置为None,不分配Service IP

ports:

- name: kube-proxy-metrics-port

port: 10249

targetPort: 10249- 创建采集器

- job_name: 'kube-proxy'

metrics_path: metrics

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

regex: kube-proxy

action: keep

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+) - job_name: 'kube-proxy'

metrics_path: metrics

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

regex: kube-proxy

action: keep

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)- 热加载prometheus,使configmap配置文件生效(也可以等待prometheus的自动热加载):

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload4. cAdvisor

cAdvisor 主要功能:

1.对容器资源的使用情况和性能进行监控。它以守护进程方式运行,用于收集、聚合、处理和导出正在运行容器的有关信息。

2.cAdvisor 本身就对 Docker 容器支持,并且还对其它类型的容器尽可能的提供支持,力求兼容与适配所有类型的容器。

3.Kubernetes 已经默认将其与 Kubelet 融合,所以我们无需再单独部署 cAdvisor 组件来暴露节点中容器运行的信息。

4.1 kubelet

- 查看kubelet资源详情

[root@kube-master prometheus]# ss -tunlp | grep kubelet

tcp LISTEN 0 16384 127.0.0.1:37839 *:* users:(("kubelet",pid=1178,fd=15))

tcp LISTEN 0 16384 127.0.0.1:10248 *:* users:(("kubelet",pid=1178,fd=31))

tcp LISTEN 0 16384 [::]:10250 [::]:* users:(("kubelet",pid=1178,fd=26))[root@kube-master prometheus]# ss -tunlp | grep kubelet

tcp LISTEN 0 16384 127.0.0.1:37839 *:* users:(("kubelet",pid=1178,fd=15))

tcp LISTEN 0 16384 127.0.0.1:10248 *:* users:(("kubelet",pid=1178,fd=31))

tcp LISTEN 0 16384 [::]:10250 [::]:* users:(("kubelet",pid=1178,fd=26))kubelet在每个node节点都有运行,端口为10250,因此role使用node即可

由于 Kubelet 中已经默认集成 cAdvisor 组件,所以无需部署该组件。需要注意的是,他的指标采集地址为 /metrics/cadvisor ,需要配置https访问,可以设置insecure_skip_verify: true 跳过证书验证;

- job_name: kubelet

metrics_path: /metrics/cadvisor

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace - job_name: kubelet

metrics_path: /metrics/cadvisor

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace热加载prometheus,使configmap配置文件生效:

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload5. node-exporter

Node Exporter 是 Prometheus 官方提供的一个节点资源采集组件,可以用于收集服务器节点的数据,如 CPU频率信息、磁盘IO统计、剩余可用内存等。

由于是针对所有K8S-node节点,使用DaemonSet这种方式;

node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

#image: prom/node-exporter:latest

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/node-exporter:latest

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

#image: prom/node-exporter:latest

image: registry.cn-zhangjiakou.aliyuncs.com/hsuing/node-exporter:latest

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /- 创建

kubectl apply -f node-exporter.yamlkubectl apply -f node-exporter.yaml- 查看

[root@kube-master Prometheus]# kubectl get pod -nmonitor

NAME READY STATUS RESTARTS AGE

node-exporter-bbtbp 1/1 Running 0 47m

node-exporter-lhg62 1/1 Running 0 47m

node-exporter-ll2lb 1/1 Running 0 47m

node-exporter-xhfpq 1/1 Running 0 47m[root@kube-master Prometheus]# kubectl get pod -nmonitor

NAME READY STATUS RESTARTS AGE

node-exporter-bbtbp 1/1 Running 0 47m

node-exporter-lhg62 1/1 Running 0 47m

node-exporter-ll2lb 1/1 Running 0 47m

node-exporter-xhfpq 1/1 Running 0 47m- 验证

curl localhost:9100/metrics |grep cpu

[root@kube-master Prometheus]# curl localhost:9100/metrics |grep cpu

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP node_cpu_guest_seconds_total Seconds the CPUs spent in guests (VMs) for each mode.

# TYPE node_cpu_guest_seconds_total counter

node_cpu_guest_seconds_total{cpu="0",mode="nice"} 0

node_cpu_guest_seconds_total{cpu="0",mode="user"} 0

node_cpu_guest_seconds_total{cpu="1",mode="nice"} 0

node_cpu_guest_seconds_total{cpu="1",mode="user"} 0

# HELP node_cpu_seconds_total Seconds the CPUs spent in each mode.

# TYPE node_cpu_seconds_total counter

node_cpu_seconds_total{cpu="0",mode="idle"} 21715.6

node_cpu_seconds_total{cpu="0",mode="iowait"} 3.48

node_cpu_seconds_total{cpu="0",mode="irq"} 0curl localhost:9100/metrics |grep cpu

[root@kube-master Prometheus]# curl localhost:9100/metrics |grep cpu

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP node_cpu_guest_seconds_total Seconds the CPUs spent in guests (VMs) for each mode.

# TYPE node_cpu_guest_seconds_total counter

node_cpu_guest_seconds_total{cpu="0",mode="nice"} 0

node_cpu_guest_seconds_total{cpu="0",mode="user"} 0

node_cpu_guest_seconds_total{cpu="1",mode="nice"} 0

node_cpu_guest_seconds_total{cpu="1",mode="user"} 0

# HELP node_cpu_seconds_total Seconds the CPUs spent in each mode.

# TYPE node_cpu_seconds_total counter

node_cpu_seconds_total{cpu="0",mode="idle"} 21715.6

node_cpu_seconds_total{cpu="0",mode="iowait"} 3.48

node_cpu_seconds_total{cpu="0",mode="irq"} 05.1 k8s-node 监控

- 查看scheduler资源详情

[root@kube-master prometheus]# kubectl -nmonitor describe pod node-exporter-88fvb

Name: node-exporter-88fvb

Namespace: monitor

Priority: 0

Node: kube-node03/10.103.236.204

Start Time: Thu, 27 Jun 2024 22:22:31 +0800

Labels: controller-revision-hash=ff498cd78

name=node-exporter

pod-template-generation=6

...

Port: 9100/TCP

Host Port: 9100/TCP

...[root@kube-master prometheus]# kubectl -nmonitor describe pod node-exporter-88fvb

Name: node-exporter-88fvb

Namespace: monitor

Priority: 0

Node: kube-node03/10.103.236.204

Start Time: Thu, 27 Jun 2024 22:22:31 +0800

Labels: controller-revision-hash=ff498cd78

name=node-exporter

pod-template-generation=6

...

Port: 9100/TCP

Host Port: 9100/TCP

...在 prometheus-config.yaml 中新增采集 job:k8s-nodes

node_exporter也是每个node节点都运行,因此role使用node即可,默认address端口为10250,替换为9100即可;

- job_name: k8s-nodes

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace - job_name: k8s-nodes

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_endpoints_name]

action: replace

target_label: endpoint

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace热加载prometheus,使configmap配置文件生效:

curl -XPOST http://prometheus.ikubernetes.net/-/reload

或者

curl -XPOST `kubectl get svc -n monitor prometheus|grep -v NAME |awk '{print $3}'`:9090/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload

或者

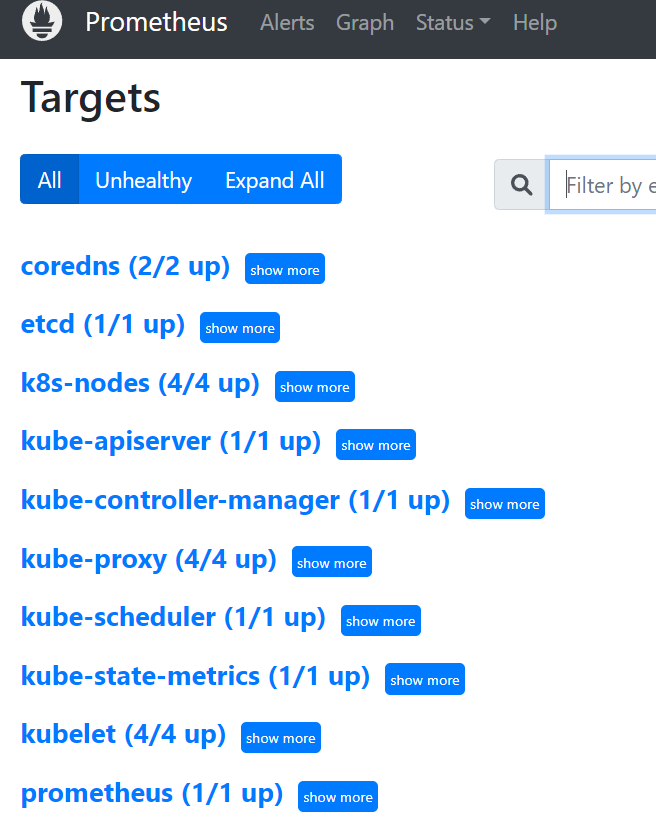

curl -XPOST `kubectl get svc -n monitor prometheus|grep -v NAME |awk '{print $3}'`:9090/-/reload6. 最终效果图

7. Pod监控

7.1 根据Service发现条件metrics

service进行过滤,只有在service配置了prometheus.io/scrape: "true"过滤出来

- 创建采集器

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_endpoints_name]

target_label: job - job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_endpoints_name]

target_label: job7.1.2 根据service动态获取

- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

# prometheus relabel to scrape only endpoints that have

# `prometheus.io/scrape: "true"` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

action: keep

regex: true

# prometheus relabel to customize metric path based on endpoints

# `prometheus.io/path: <metric path>` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

action: replace

target_label: __metrics_path__

regex: (.+)

# prometheus relabel to scrape only single, desired port for the service based

# on endpoints `prometheus.io/port: <port>` annotation.

- source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

# prometheus relabel to configure scrape scheme for all service scrape targets

# based on endpoints `prometheus.io/scheme: <scheme>` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

action: replace

target_label: __scheme__

regex: (https?)

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

# prometheus relabel to scrape only endpoints that have

# `prometheus.io/scrape: "true"` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

action: keep

regex: true

# prometheus relabel to customize metric path based on endpoints

# `prometheus.io/path: <metric path>` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

action: replace

target_label: __metrics_path__

regex: (.+)

# prometheus relabel to scrape only single, desired port for the service based

# on endpoints `prometheus.io/port: <port>` annotation.

- source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

# prometheus relabel to configure scrape scheme for all service scrape targets

# based on endpoints `prometheus.io/scheme: <scheme>` annotation.

- source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

action: replace

target_label: __scheme__

regex: (https?)

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service7.2 根据Pod注解动态采集

- job_name: "kubernetes-pods"

kubernetes_sd_configs:

- role: pod

relabel_configs:

# prometheus relabel to scrape only pods that have

# `prometheus.io/scrape: "true"` annotation.

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

action: keep

regex: true

# prometheus relabel to customize metric path based on pod

# `prometheus.io/path: <metric path>` annotation.

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

action: replace

target_label: __metrics_path__

regex: (.+)

# prometheus relabel to scrape only single, desired port for the pod

# based on pod `prometheus.io/port: <port>` annotation.

- source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod- job_name: "kubernetes-pods"

kubernetes_sd_configs:

- role: pod

relabel_configs:

# prometheus relabel to scrape only pods that have

# `prometheus.io/scrape: "true"` annotation.

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

action: keep

regex: true

# prometheus relabel to customize metric path based on pod

# `prometheus.io/path: <metric path>` annotation.

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

action: replace

target_label: __metrics_path__

regex: (.+)

# prometheus relabel to scrape only single, desired port for the pod

# based on pod `prometheus.io/port: <port>` annotation.

- source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod- 添加注解

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-local-dns

namespace: kube-system

labels:

k8s-app: node-local-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

updateStrategy:

rollingUpdate:

maxUnavailable: 10%

selector:

matchLabels:

k8s-app: node-local-dns

template:

metadata:

labels:

k8s-app: node-local-dns

annotations:

prometheus.io/port: "9253"

prometheus.io/scrape: "true"apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-local-dns

namespace: kube-system

labels:

k8s-app: node-local-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

updateStrategy:

rollingUpdate:

maxUnavailable: 10%

selector:

matchLabels:

k8s-app: node-local-dns

template:

metadata:

labels:

k8s-app: node-local-dns

annotations:

prometheus.io/port: "9253"

prometheus.io/scrape: "true"