MongoDB的部署方案有单机部署、复本集(主备)部署、分片部署、复本集与分片混合部署

1.分片集群

官方文档:https://docs.mongodb.com/manual/sharding/

分片(sharding)是指将数据拆分,将其分散存在不通机器的过程,有时也用分区(partitioning)来表示这个概念,将数据分散在不通的机器上,不需要功能强大的大型计算机就能存储更多的数据,处理更大的复制。

分片的目的是通过分片能够增加更多机器来应对不断的增加负载和数据,还不影响应用运行。

Mongodb支持自动分片,可以摆脱手动分片的管理困扰,集群自动切分数据做负载均衡。MongoDB分片的基本思想就是将集合拆分成多个块,这些块分散在若干个片里,每个片只负责总数据的一部分,应用程序不必知道哪些片对应哪些数据,甚至不需要知道数据拆分,所以在分片之前会运行一个路由进程,mongos进程,这个路由器知道所有的数据存放位置,应用只需要直接与mongos交互即可。mongos自动将请求转到相应的片上获取数据,从应用角度看分不分片没有什么区别。

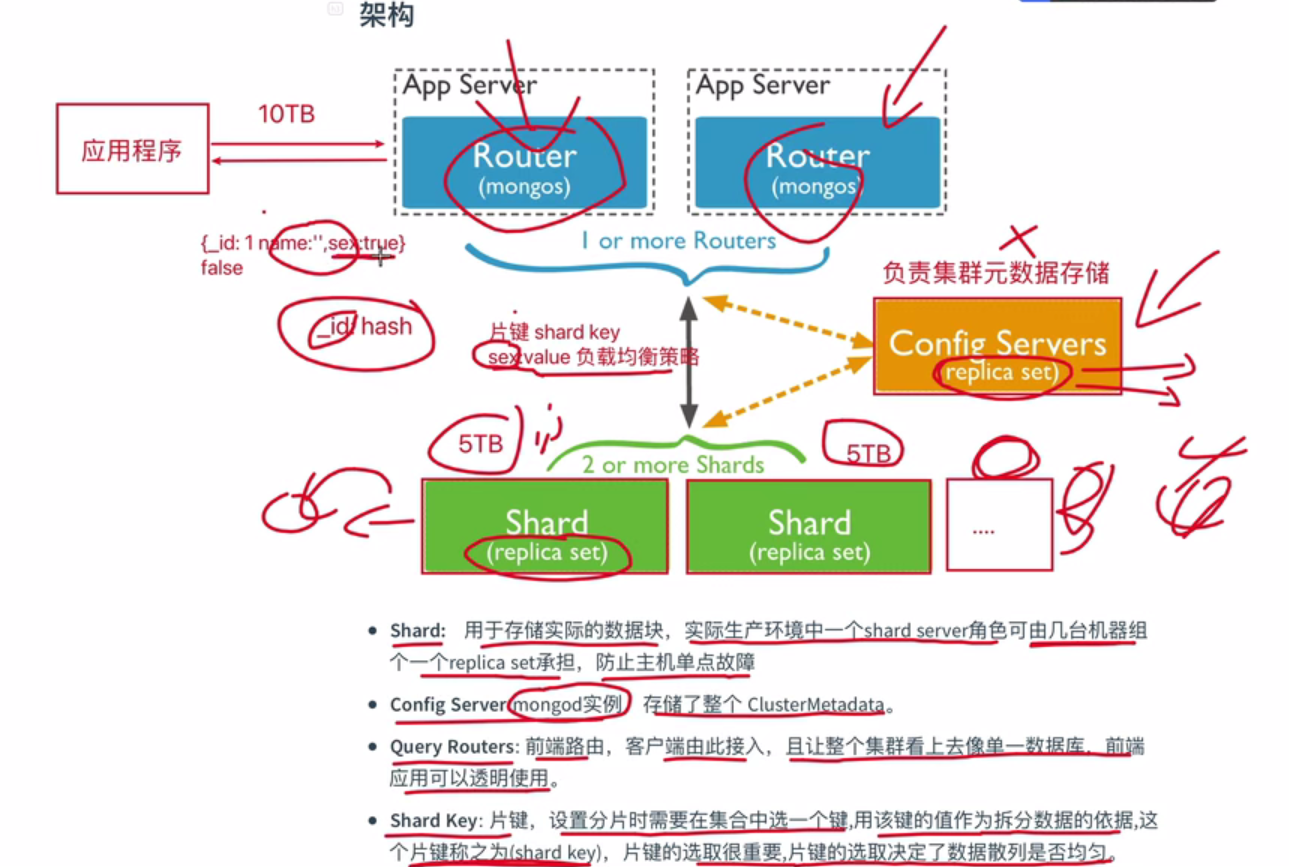

架构图:

组件

| 组件 | 说明 |

|---|---|

| Config Server | 存储集群所有节点、分片数据路由信息。默认需要配置3个Config Server节点。从MongoDB 3.4 开始,必须将配置服务器部署为副本集(CSRS) |

| Mongos | mongos充当查询路由器,在客户端应用程序和分片集群之间提供接口 |

| share_Mongod | 存储应用数据记录。一般有多个Mongod节点,达到数据分片目的 |

Mongos本身并不持久化数据,Sharded cluster所有的元数据都会存储到Config Server,而用户的数据会议分散存储到各个shard。Mongos启动后,会从配置服务器加载元数据,开始提供服务,将用户的请求正确路由到对应的碎片

集群中数据分布

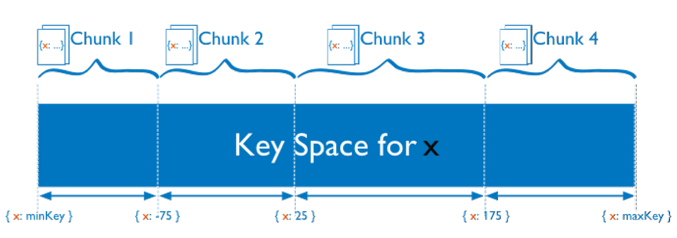

Chunk是什么

在一个shard server内部,MongoDB还是会把数据分为chunks,每个chunk代表这个shard server内部一部分数据。chunk的产生,会有以下两个用途:

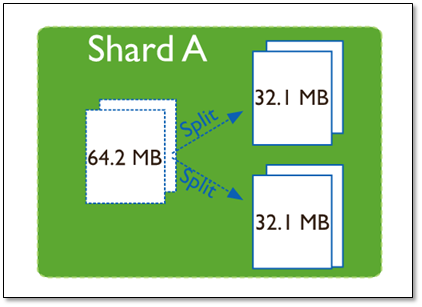

Splitting:当一个chunk的大小超过配置中的chunk size时,MongoDB的后台进程会把这个chunk切分成更小的chunk,从而避免chunk过大的情况

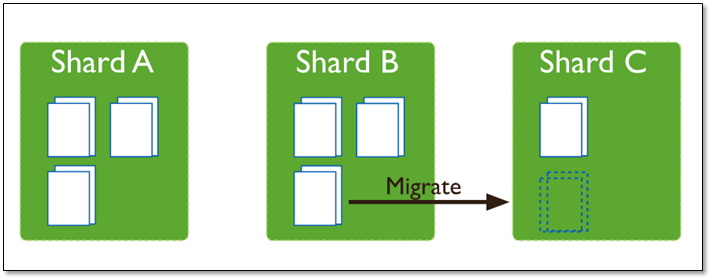

Balancing:在MongoDB中,balancer是一个后台进程,负责chunk的迁移,从而均衡各个shard server的负载,系统初始1个chunk,chunk size默认值64M,生产库上选择适合业务的chunk size是最好的。MongoDB会自动拆分和迁移chunks.

分片集群的数据分布(shard节点)

(1)使用chunk来存储数据

(2)进群搭建完成之后,默认开启一个chunk,大小是64M,

(3)存储需求超过64M,chunk会进行分裂,如果单位时间存储需求很大,设置更大的chunk

(4)chunk会被自动均衡迁移。

chunksize的选择

chunk的分裂和迁移非常消耗IO资源;chunk分裂的时机:在插入和更新,读数据不会分裂。

chunksize的选择:

小的chunksize:数据均衡是迁移速度快,数据分布更均匀。数据分裂频繁,路由节点消耗更多资源。大的chunksize:数据分裂少。数据块移动集中消耗IO资源。通常100-200M

chunk分裂及迁移

随着数据的增长,其中的数据大小超过了配置的chunk size,默认是64M,则这个chunk就会分裂成两个。数据的增长会让chunk分裂得越来越多。

这时候,各个shard 上的chunk数量就会不平衡。这时候,mongos中的一个组件balancer 就会执行自动平衡。把chunk从chunk数量最多的shard节点挪动到数量最少的节点。

chunkSize 对分裂及迁移的影响

MongoDB 默认的 chunkSize 为64MB,如无特殊需求,建议保持默认值;chunkSize 会直接影响到 chunk 分裂、迁移的行为。

chunkSize 越小,chunk 分裂及迁移越多,数据分布越均衡;反之,chunkSize 越大,chunk 分裂及迁移会更少,但可能导致数据分布不均。

chunkSize 太小,容易出现 jumbo chunk(即shardKey 的某个取值出现频率很高,这些文档只能放到一个 chunk 里,无法再分裂)而无法迁移;chunkSize 越大,则可能出现 chunk 内文档数太多(chunk 内文档数不能超过 250000 )而无法迁移。

chunk 自动分裂只会在数据写入时触发,所以如果将 chunkSize 改小,系统需要一定的时间来将 chunk 分裂到指定的大小。

chunk 只会分裂,不会合并,所以即使将 chunkSize 改大,现有的 chunk 数量不会减少,但 chunk 大小会随着写入不断增长,直到达到目标大小。

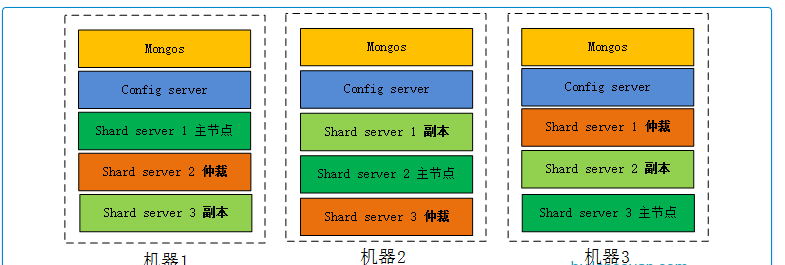

2.分片部署

- 环境

- 5.0.5

- centos8

端口分配

mongos:20000

config:21000

shard1:27001

shard2:27002

shard3:27003

分别在每台机器建立mongos、config、shard1、shard2、shard3六个目录,因为mongos不存储数据,只需要建立日志文件目录即可

mkdir -p /data/mongodb_data/mongos/log

mkdir -p /data/mongodb_data/config/{data,log}

mkdir -p /data/mongodb_data/shard1/{data,log}

mkdir -p /data/mongodb_data/shard2/{data,log}

mkdir -p /data/mongodb_data/shard3/{data,log}mkdir -p /data/mongodb_data/mongos/log

mkdir -p /data/mongodb_data/config/{data,log}

mkdir -p /data/mongodb_data/shard1/{data,log}

mkdir -p /data/mongodb_data/shard2/{data,log}

mkdir -p /data/mongodb_data/shard3/{data,log}- 配置文件

systemLog:

quiet: false

path: "/data/mongodb_data/config/log/config.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/config/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/config/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.244,127.0.0.1

port: 21000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: configRS

sharding:

clusterRole: configsvr

archiveMovedChunks: truesystemLog:

quiet: false

path: "/data/mongodb_data/config/log/config.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/config/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/config/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.244,127.0.0.1

port: 21000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: configRS

sharding:

clusterRole: configsvr

archiveMovedChunks: true- 启动

#启动对应服务:

mongod -f logpath#启动对应服务:

mongod -f logpathconfig初始化

- 配置

[root@master config]# cat config.conf

systemLog:

quiet: false

path: "/data/mongodb_data/config/log/config.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/config/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/config/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.246,127.0.0.1

port: 21000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: configRS

sharding:

clusterRole: configsvr

archiveMovedChunks: true[root@master config]# cat config.conf

systemLog:

quiet: false

path: "/data/mongodb_data/config/log/config.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/config/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/config/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.246,127.0.0.1

port: 21000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: configRS

sharding:

clusterRole: configsvr

archiveMovedChunks: true登录任意一台配置服务器,初始化配置副本集

configRS---->副本名字

[root@slave01 config]# mongo 192.168.122.14:21000

>config = {

_id : "configRS",

configsvr: true,

members : [

{_id : 0, host : "192.168.122.14:21000" },

{_id : 1, host : "192.168.122.244:21000" },

{_id : 2, host : "192.168.122.246:21000" }

]

}

# 初始化配置

>rs.initiate(config)

#或者

rs.initiate( {

_id : "configRS",

members: [

{ _id: 0, host: "192.168.122.244:21000" },

{ _id: 1, host: "192.168.122.246:21000" },

{ _id: 2, host: "192.168.122.14:21000" }

]

})configRS---->副本名字

[root@slave01 config]# mongo 192.168.122.14:21000

>config = {

_id : "configRS",

configsvr: true,

members : [

{_id : 0, host : "192.168.122.14:21000" },

{_id : 1, host : "192.168.122.244:21000" },

{_id : 2, host : "192.168.122.246:21000" }

]

}

# 初始化配置

>rs.initiate(config)

#或者

rs.initiate( {

_id : "configRS",

members: [

{ _id: 0, host: "192.168.122.244:21000" },

{ _id: 1, host: "192.168.122.246:21000" },

{ _id: 2, host: "192.168.122.14:21000" }

]

})share副本集配置

- 配置

[root@master shard1]# cat share1.conf

systemLog:

quiet: false

path: "/data/mongodb_data/shard1/log/share1.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/shard1/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/shard1/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.246,127.0.0.1

port: 27001

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: shard1

sharding:

clusterRole: shardsvr

archiveMovedChunks: true[root@master shard1]# cat share1.conf

systemLog:

quiet: false

path: "/data/mongodb_data/shard1/log/share1.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/shard1/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

storage:

dbPath: "/data/mongodb_data/shard1/data"

journal:

enabled: true

commitIntervalMs: 100

directoryPerDB: true

syncPeriodSecs: 60

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 12

statisticsLogDelaySecs: 0

journalCompressor: snappy

directoryForIndexes: true

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.122.246,127.0.0.1

port: 27001

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

operationProfiling:

slowOpThresholdMs: 200

mode: slowOp

security:

authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

replication:

oplogSizeMB: 5120

replSetName: shard1

sharding:

clusterRole: shardsvr

archiveMovedChunks: true- 启动

mongod -f shard1.confmongod -f shard1.conf登录任意一台配置服务器,进行初始化

[root@slave shard1]# mongo 192.168.122.244:27001

>rs.initiate( {

_id : "shard1",

members: [

{ _id: 0, host: "192.168.122.244:27001" },

{ _id: 1, host: "192.168.122.246:27001" },

{ _id: 2, host: "192.168.122.14:27001" }

]

})[root@slave shard1]# mongo 192.168.122.244:27001

>rs.initiate( {

_id : "shard1",

members: [

{ _id: 0, host: "192.168.122.244:27001" },

{ _id: 1, host: "192.168.122.246:27001" },

{ _id: 2, host: "192.168.122.14:27001" }

]

})mongos路由配置

[root@master mongos]# cat mongos.conf

systemLog:

quiet: false

path: "/data/mongodb_data/mongos/log/mongos.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/mongos/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

net:

bindIp: 192.168.122.246,127.0.0.1

port: 20000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

security:

#authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

sharding:

configDB: configRS/192.168.122.14:21000,192.168.122.244:21000,192.168.122.246:21000[root@master mongos]# cat mongos.conf

systemLog:

quiet: false

path: "/data/mongodb_data/mongos/log/mongos.log"

logAppend: true

logRotate: rename

destination: file

timeStampFormat: iso8601-local

processManagement:

fork: true

pidFilePath: "/data/mongodb_data/mongos/mongod.pid"

timeZoneInfo: /usr/share/zoneinfo

net:

bindIp: 192.168.122.246,127.0.0.1

port: 20000

maxIncomingConnections: 52528

wireObjectCheck: true

ipv6: false

unixDomainSocket:

enabled: false

security:

#authorization: disabled

#clusterAuthMode: keyFile

#keyFile: "/data/mongodb_data/keyfile"

javascriptEnabled: true

setParameter:

enableLocalhostAuthBypass: true

authenticationMechanisms: SCRAM-SHA-1

sharding:

configDB: configRS/192.168.122.14:21000,192.168.122.244:21000,192.168.122.246:21000- 分别启动三台

[root@master mongos]#mongos -f mongos.conf[root@master mongos]#mongos -f mongos.conf启用分片

目前搭建了mongodb配置服务器、路由服务器,各个分片服务器,不过应用程序连接到mongos路由服务器并不能使用分片机制,还需要在程序里设置分片配置,让分片生效。

片键是集合的一个键,MongoDB根据这个键拆分数据。例如,如果选择基于“username”进行分片,MongoDB会根据不同的用户名进行分片。选择片键可以认为是选择集合中数据的顺序。它与索引是个相似的概念:随着集合的不断增长,片键就会成为集合上最重要的索引。只有被索引过的键才能够作为片键

登陆

[root@master config]# mongo 192.168.122.14:20000[root@master config]# mongo 192.168.122.14:20000串联路由

串联路由服务器与分配副本集

mongos> use admin;

switched to db admin

mongos>

#添加分片信息

mongos> sh.addShard("shard1/192.168.122.244:27001,192.168.122.246:27001,192.168.122.14:27001")

{

"shardAdded" : "shard1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641803717, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641803717, 4)

}

mongos>sh.addShard("shard2/192.168.122.244:27002,192.168.122.246:27002,192.168.122.14:27002")

mongos>sh.addShard("shard3/192.168.122.244:27003,192.168.122.246:27003,192.168.122.14:27003")

#或者

mongos>db.runCommand({ addshard:"shard1/192.168.122.244:27001,192.168.122.246:27001,192.168.122.14:27001","allowLocal":true })mongos> use admin;

switched to db admin

mongos>

#添加分片信息

mongos> sh.addShard("shard1/192.168.122.244:27001,192.168.122.246:27001,192.168.122.14:27001")

{

"shardAdded" : "shard1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641803717, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641803717, 4)

}

mongos>sh.addShard("shard2/192.168.122.244:27002,192.168.122.246:27002,192.168.122.14:27002")

mongos>sh.addShard("shard3/192.168.122.244:27003,192.168.122.246:27003,192.168.122.14:27003")

#或者

mongos>db.runCommand({ addshard:"shard1/192.168.122.244:27001,192.168.122.246:27001,192.168.122.14:27001","allowLocal":true })- 查看状态

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("61dbe3728c0a24e39415b7ac")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.122.14:27001,192.168.122.244:27001,192.168.122.246:27001", "state" : 1, "topologyTime" : Timestamp(1641803717, 1) }

{ "_id" : "shard2", "host" : "shard2/192.168.122.14:27002,192.168.122.244:27002,192.168.122.246:27002", "state" : 1, "topologyTime" : Timestamp(1641803781, 1) }

{ "_id" : "shard3", "host" : "shard3/192.168.122.14:27003,192.168.122.244:27003,192.168.122.246:27003", "state" : 1, "topologyTime" : Timestamp(1641803793, 2) }

active mongoses:

"5.0.5" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("61dbe3728c0a24e39415b7ac")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.122.14:27001,192.168.122.244:27001,192.168.122.246:27001", "state" : 1, "topologyTime" : Timestamp(1641803717, 1) }

{ "_id" : "shard2", "host" : "shard2/192.168.122.14:27002,192.168.122.244:27002,192.168.122.246:27002", "state" : 1, "topologyTime" : Timestamp(1641803781, 1) }

{ "_id" : "shard3", "host" : "shard3/192.168.122.14:27003,192.168.122.244:27003,192.168.122.246:27003", "state" : 1, "topologyTime" : Timestamp(1641803793, 2) }

active mongoses:

"5.0.5" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }至此分片集群搭建结束

测试效果

数据库分片

语法:( { enablesharding : "数据库名称" } )语法:( { enablesharding : "数据库名称" } )#必须进入到admin下执行

mongos> use amdin;

switched to db amdin

mongos> sh.enableSharding("test")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641804070, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641804070, 3)

}

#或者

db.runCommand( { enablesharding : "test" } )#必须进入到admin下执行

mongos> use amdin;

switched to db amdin

mongos> sh.enableSharding("test")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641804070, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641804070, 3)

}

#或者

db.runCommand( { enablesharding : "test" } )hashed

- 对users表进行hash

mongos>db.runCommand({ shardcollection: "test.users",key: { _id: "hashed"}})

{

"collectionsharded" : "test.users",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641805793, 29),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641805793, 23)

}

#插入数据

mongos>for(i=1; i<=500;i++){

db.users.insert( {name:'mytest'+i, age:i} )

}

shard1:PRIMARY> use test

switched to db test

shard1:PRIMARY> db.users.count();

177

shard2:PRIMARY> use test;

switched to db test

shard2:PRIMARY> db.users.count();

161

shard3:PRIMARY> db.users.count()

162

#或者,进行查看是否分片

mongos>db.users.status()mongos>db.runCommand({ shardcollection: "test.users",key: { _id: "hashed"}})

{

"collectionsharded" : "test.users",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1641805793, 29),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1641805793, 23)

}

#插入数据

mongos>for(i=1; i<=500;i++){

db.users.insert( {name:'mytest'+i, age:i} )

}

shard1:PRIMARY> use test

switched to db test

shard1:PRIMARY> db.users.count();

177

shard2:PRIMARY> use test;

switched to db test

shard2:PRIMARY> db.users.count();

161

shard3:PRIMARY> db.users.count()

162

#或者,进行查看是否分片

mongos>db.users.status()普通分片

mongos>db.runCommand({ shardcollection: "test.users",key: { _id:1}})mongos>db.runCommand({ shardcollection: "test.users",key: { _id:1}})分片集数据分布策略

MongoDB分片集提供了三种数据分布的策略:

(1)基于范围(Range)

(2)基于哈希(Hash)

(3)基于zone/tag

基于范围分片

首先,基于范围的数据分片很好理解,通常会按照某个字段如创建日期来区分不同范围的数据存储

其优点是分片范围的查询性能足够好,缺点是存在热点数据问题,数据的分布可能会不够均匀

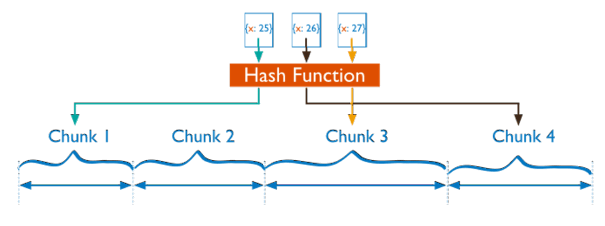

基于哈希分片

其次,基于Hash的分片策略也比较好理解,通常会按照某个字段的哈希值来确定数据存储的位置

其优点是数据的分布会比较均匀,缺点则是范围查询的效率会较低,因为可能会涉及在多个节点读取数据并聚合

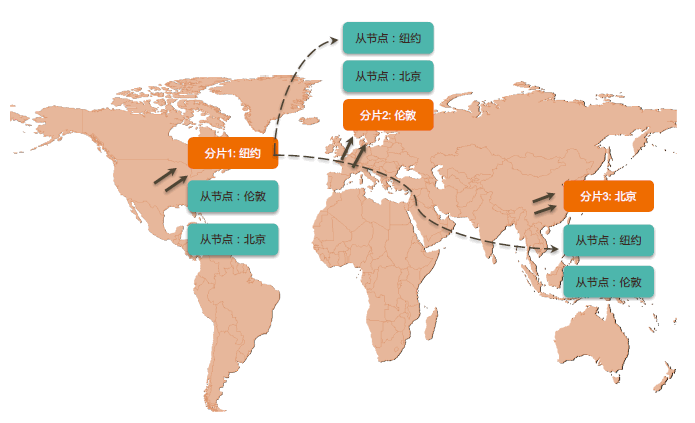

基于Zone/Tag分片

基于zone/tag的数据分片则有点不太好理解,它不是一般我们所熟知的分片方式。所谓基于zone/tag的数据分片,一般是指在两地三中心或异地多活的应用场景中,如果数据存在地域性的访问需求,那么就可以自定义Zone来进行分片

通过打tag的方式,可以实现将为某个地域服务的数据存储到指定地域的数据分片上(比如CountryCode=NewYork),最终实现本地读和本地写的目的

常用操作

查看分片信息

mongos> db.printShardingStatus()

#或者

mongos>sh.status()

#出错调试

mongos> sh.status({"verbose":1})

或则

mongos> db.printShardingStatus("vvvv")

或则

mongos> printShardingStatus(db.getSisterDB("config"),1)mongos> db.printShardingStatus()

#或者

mongos>sh.status()

#出错调试

mongos> sh.status({"verbose":1})

或则

mongos> db.printShardingStatus("vvvv")

或则

mongos> printShardingStatus(db.getSisterDB("config"),1)是否开启均衡状态

mongos> sh.getBalancerState()

truemongos> sh.getBalancerState()

true查看是否有数据迁移

mongos> sh.isBalancerRunning()mongos> sh.isBalancerRunning()启动关闭

mongodb的启动顺序是,先启动配置服务器,在启动分片,最后启动mongos

#关闭,无先后顺序mongodb的启动顺序是,先启动配置服务器,在启动分片,最后启动mongos

#关闭,无先后顺序开启用户验证

每个实例的配置文件中

security:

authorization: enabled #若启动实例报错,可删除此行

keyFile: /root/keyfile

clusterAuthMode: keyFile每个实例的配置文件中

security:

authorization: enabled #若启动实例报错,可删除此行

keyFile: /root/keyfile

clusterAuthMode: keyFile删除分片

mongos>use admin

mongos>db.runCommand( { removeShard: "myshardrs02" } )mongos>use admin

mongos>db.runCommand( { removeShard: "myshardrs02" } )- 如果只剩下最后一个 shard,是无法删除的

- 移除时会自动转移分片数据,需要一个时间过程

- 完成后,再次执行删除分片命令才能真正删除

添加分片

mongos>sh.addShard("shard2/192.168.122.244:27002,192.168.122.246:27002,192.168.122.14:27002")mongos>sh.addShard("shard2/192.168.122.244:27002,192.168.122.246:27002,192.168.122.14:27002")分片连接

// 连接到复制集

mongodb://节点1,节点2,节点3…/database?[options]

// 连接到分片集

mongodb://mongos1,mongos2,mongos3…/database?[options]// 连接到复制集

mongodb://节点1,节点2,节点3…/database?[options]

// 连接到分片集

mongodb://mongos1,mongos2,mongos3…/database?[options]常见的连接字符串参数有:

- maxPoolSize :连接池大小

- maxWaitTime:最大等待时间,建议设置,自动杀掉太慢的查询

- writeConcern:建议设置为majority 保证数据安全

- readConcern:对于数据一致性要求较高的场景适当使用

对于连接字符串中的节点和地址:

- 无论对于复制集或分片集,连接字符串中建议全部列出所有节点地址

- 连接字符串中尽可能使用与复制集内部配置相同的域名或IP地址,建议均使用域名

不要在mongos前面使用负载均衡:MongoDB Driver自己会处理负载均衡和自动故障恢复,不要在mongos或复制集上层放置负载均衡器(比如LVS或Nginx),否则Driver会无法探测具体哪个节点存活,也无法判断游标是在哪个节点创建的

https://blog.51cto.com/bigboss/2160311

https://www.cnblogs.com/clsn/p/8214345.html#auto-id-37

https://www.cnblogs.com/wushaoyu/p/10723599.html

视频:https://www.bilibili.com/video/BV1vL4y1J7i3?p=30

查看分片信息和状态: 在mongos上: db.runCommand({listshards:1}) 判断是否sharding: db.runCommand({isdbgrid:1}) printShardingStatus(); --和rs.status()一样 db.collection.stats() db.collection.getShardDistribution()