- 文档

https://docs.oracle.com/cd/E26926_01/html/E25826/gavwg.html#scrolltoc

ZFS 管理由两个工具组成

- zpool: 控制存储池和增加、删除、替换和管理磁盘

- zfs: 增加、删除和管理文件系统和卷

1.查看磁盘

[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

├─nvme0n2p1 259:4 0 5G 0 part

└─nvme0n2p9 259:5 0 8M 0 part[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

├─nvme0n2p1 259:4 0 5G 0 part

└─nvme0n2p9 259:5 0 8M 0 part2.创建

2.0 help

[root@other ~]# zfs set -h[root@other ~]# zfs set -h2.1磁盘池和zfs文件系统

#语法,(type: “”,mirror,raidz,raidz2)

zpool create -o ashift=12 zpool_name disk_name <type>

[root@other ~]# zpool create -o ashift=12 zp1 nvme0n1

#优化

zfs set compression=lz4 zp1

zfs set canmount=off zp1

zfs set atime=off zp1#语法,(type: “”,mirror,raidz,raidz2)

zpool create -o ashift=12 zpool_name disk_name <type>

[root@other ~]# zpool create -o ashift=12 zp1 nvme0n1

#优化

zfs set compression=lz4 zp1

zfs set canmount=off zp1

zfs set atime=off zp12.1挂载

#语法 zfs create -o mountpoint=/data zp1/data

--->/data 挂载点,没有会自动创建

--->zp1/data 文件系统名字

[root@other ~]# zfs create -o mountpoint=/data zp1/data

#zfs create -o mountpoint=/pg_arch zp1/pg_arch#语法 zfs create -o mountpoint=/data zp1/data

--->/data 挂载点,没有会自动创建

--->zp1/data 文件系统名字

[root@other ~]# zfs create -o mountpoint=/data zp1/data

#zfs create -o mountpoint=/pg_arch zp1/pg_arch

3.修改

3.1修改文件系统名称

# 修改文件系统名称

zfs rename old_name new_name# 修改文件系统名称

zfs rename old_name new_name3.2修改存储池的名称

zpool export tank

zpool import tank newpoolzpool export tank

zpool import tank newpool4.删除

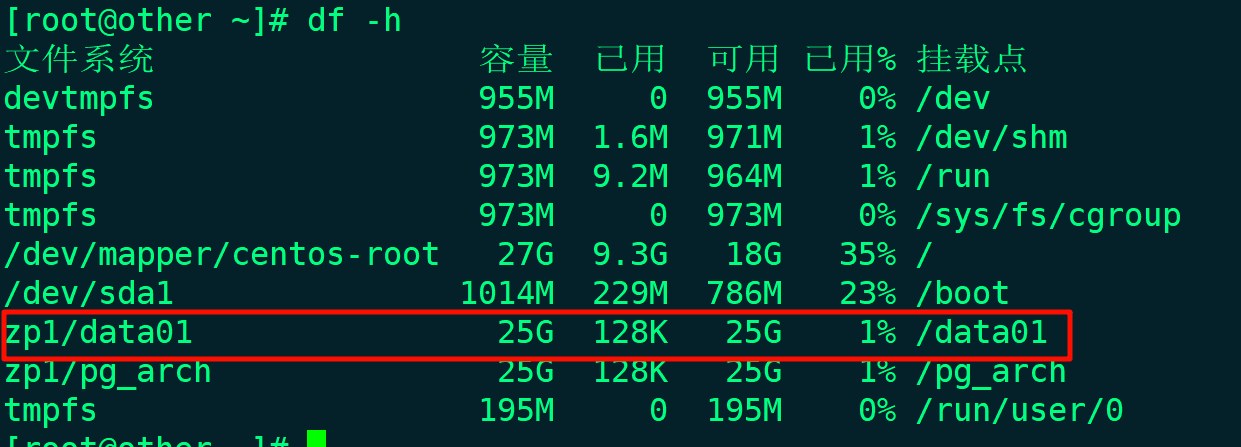

4.1删除文件系统

# 删除文件系统

zfs destroy -Rf zp1/pg_arch# 删除文件系统

zfs destroy -Rf zp1/pg_arch

4.2删除存储池

# 销毁一个地址池

## zpool destroy 存储池名称

zpool destroy zfspool_name

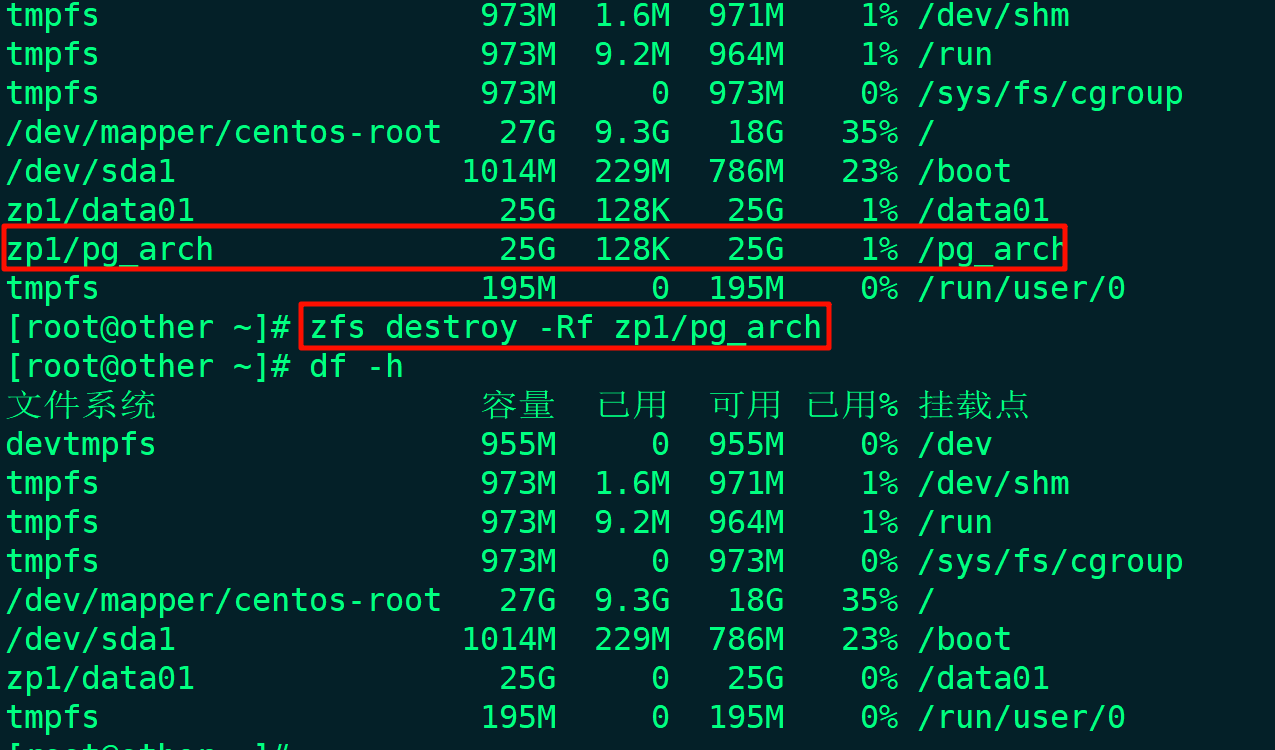

# 查看存储池列表

zpool list

# 查看存储池状态

zpool status

# 查看磁盘空间使用情况

df -Th# 销毁一个地址池

## zpool destroy 存储池名称

zpool destroy zfspool_name

# 查看存储池列表

zpool list

# 查看存储池状态

zpool status

# 查看磁盘空间使用情况

df -Th5.扩容

5.1向现有磁盘中添加

#查看磁盘名字

[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

# 向存储池中添加磁盘

[root@other ~]# zpool add zfspool_name -f nvme0n2#查看磁盘名字

[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

# 向存储池中添加磁盘

[root@other ~]# zpool add zfspool_name -f nvme0n26.查看状态

6.1显示存储池的状态

[root@other ~]# zpool status zp1

pool: zp1 #存储池名称

state: ONLINE #存储池当前运行状态

scan: none requested

config: #发出读取请求时出现I/O错误|发出写入请求时出现I/O错误|校验和错误

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors #确定是否存在已知的数据错误[root@other ~]# zpool status zp1

pool: zp1 #存储池名称

state: ONLINE #存储池当前运行状态

scan: none requested

config: #发出读取请求时出现I/O错误|发出写入请求时出现I/O错误|校验和错误

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors #确定是否存在已知的数据错误6.2获取存储服务的状态

# 获取所有pool状态

[root@other ~]# zpool get all

NAME PROPERTY VALUE SOURCE

zp1 size 24.8G -

zp1 capacity 0% -

zp1 altroot - default

zp1 health ONLINE -

zp1 guid 17760530291839665031 -

zp1 version - default

zp1 bootfs - default

zp1 delegation on default

zp1 autoreplace off default

zp1 cachefile - default

zp1 failmode wait default

zp1 listsnapshots off default

zp1 autoexpand off default

zp1 dedupditto 0 default

zp1 dedupratio 1.00x -

zp1 free 24.8G -

zp1 allocated 1.37M -

zp1 readonly off -

zp1 ashift 12 local

zp1 comment - default

zp1 expandsize - -

zp1 freeing 0 -

zp1 fragmentation 0% -

zp1 leaked 0 -

zp1 multihost off default

zp1 feature@async_destroy enabled local

zp1 feature@empty_bpobj active local

zp1 feature@lz4_compress active local

zp1 feature@multi_vdev_crash_dump enabled local

zp1 feature@spacemap_histogram active local

zp1 feature@enabled_txg active local

zp1 feature@hole_birth active local

zp1 feature@extensible_dataset active local

zp1 feature@embedded_data active local

zp1 feature@bookmarks enabled local

zp1 feature@filesystem_limits enabled local

zp1 feature@large_blocks enabled local

zp1 feature@large_dnode enabled local

zp1 feature@sha512 enabled local

zp1 feature@skein enabled local

zp1 feature@edonr enabled local

zp1 feature@userobj_accounting active local

# 获取指定pool状态

[root@other ~]# zpool get zp1# 获取所有pool状态

[root@other ~]# zpool get all

NAME PROPERTY VALUE SOURCE

zp1 size 24.8G -

zp1 capacity 0% -

zp1 altroot - default

zp1 health ONLINE -

zp1 guid 17760530291839665031 -

zp1 version - default

zp1 bootfs - default

zp1 delegation on default

zp1 autoreplace off default

zp1 cachefile - default

zp1 failmode wait default

zp1 listsnapshots off default

zp1 autoexpand off default

zp1 dedupditto 0 default

zp1 dedupratio 1.00x -

zp1 free 24.8G -

zp1 allocated 1.37M -

zp1 readonly off -

zp1 ashift 12 local

zp1 comment - default

zp1 expandsize - -

zp1 freeing 0 -

zp1 fragmentation 0% -

zp1 leaked 0 -

zp1 multihost off default

zp1 feature@async_destroy enabled local

zp1 feature@empty_bpobj active local

zp1 feature@lz4_compress active local

zp1 feature@multi_vdev_crash_dump enabled local

zp1 feature@spacemap_histogram active local

zp1 feature@enabled_txg active local

zp1 feature@hole_birth active local

zp1 feature@extensible_dataset active local

zp1 feature@embedded_data active local

zp1 feature@bookmarks enabled local

zp1 feature@filesystem_limits enabled local

zp1 feature@large_blocks enabled local

zp1 feature@large_dnode enabled local

zp1 feature@sha512 enabled local

zp1 feature@skein enabled local

zp1 feature@edonr enabled local

zp1 feature@userobj_accounting active local

# 获取指定pool状态

[root@other ~]# zpool get zp16.3查存储池列表

# 查看存储池

zpool list# 查看存储池

zpool list

6.3查看修改历史

# 显示以前修改了存储池状态信息

zpool history# 显示以前修改了存储池状态信息

zpool history7.磁盘管理

- 文档

https://docs.oracle.com/cd/E19253-01/819-7065/gayrd/index.html

7.1添加磁盘

#查看磁盘名字

[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

# 向存储池中添加磁盘

[root@other ~]# zpool add zfspool_name -f nvme0n2#查看磁盘名字

[root@other ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p9 259:2 0 8M 0 part

└─nvme0n1p1 259:1 0 20G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n2 259:3 0 5G 0 disk

# 向存储池中添加磁盘

[root@other ~]# zpool add zfspool_name -f nvme0n27.2标记磁盘运行状态

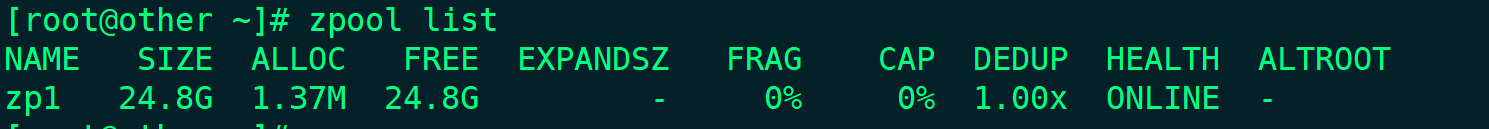

# 标记磁盘状态为运行

zpool online zp1 nvme0n2

# 在有故障的时候,标记磁盘状态为非运行

zpool offline zp1 -f nvme0n2# 标记磁盘状态为运行

zpool online zp1 nvme0n2

# 在有故障的时候,标记磁盘状态为非运行

zpool offline zp1 -f nvme0n2

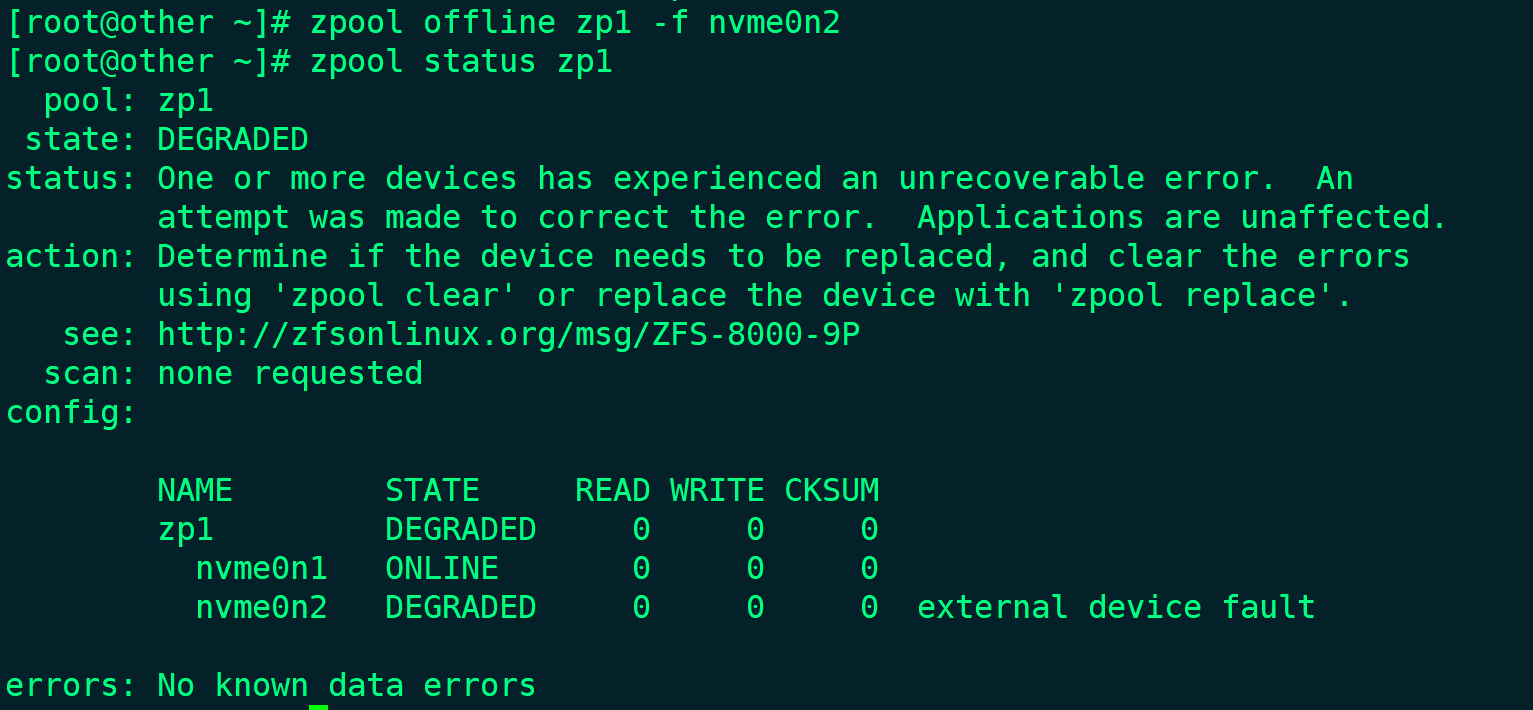

1.清除存储池设备错误

如果设备因出现故障(导致在 zpool status 输出中列出错误)而脱机,则可以使用 zpool clear 命令清除错误计数。

如果不指定任何参数,则此命令将清除池中的所有设备错误。例如:

[root@other ~]# zpool clear zp1

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:21:41 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors[root@other ~]# zpool clear zp1

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:21:41 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors如果指定了一个或多个设备,则此命令仅清除与指定设备关联的错误。例如:

[root@other ~]# zpool offline zp1 -f nvme0n2

[root@other ~]# zpool status

pool: zp1

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://zfsonlinux.org/msg/ZFS-8000-9P

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:23:17 2024

config:

NAME STATE READ WRITE CKSUM

zp1 DEGRADED 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 DEGRADED 0 0 0 external device fault

errors: No known data errors

[root@other ~]# zpool clear zp1 nvme0n2

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:29:23 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors[root@other ~]# zpool offline zp1 -f nvme0n2

[root@other ~]# zpool status

pool: zp1

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://zfsonlinux.org/msg/ZFS-8000-9P

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:23:17 2024

config:

NAME STATE READ WRITE CKSUM

zp1 DEGRADED 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 DEGRADED 0 0 0 external device fault

errors: No known data errors

[root@other ~]# zpool clear zp1 nvme0n2

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Wed Apr 3 14:29:23 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errors7.3删除磁盘

目前,zpool remove 命令仅支持删除热备件、日志设备和高速缓存设备。可以使用 zpool detach 命令删除属于主镜像池配置的设备。非冗余设备和 RAID-Z 设备无法从池中删除

[root@other ~]# zpool remove -h

missing device

usage:

remove <pool> <device> ...[root@other ~]# zpool remove -h

missing device

usage:

remove <pool> <device> ...8.raid池

nvme0n[1-5]大小是5g

8.1RAIDZ-1

至少需要3块磁盘,必须保证磁盘大小一致,否则以最小单位的存储使用

[root@other ~]# zpool create zp1 raidz1 nvme0n1 nvme0n2 nvme0n3

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: resilvered 50.5K in 0h0m with 0 errors on Wed Apr 3 15:45:32 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

nvme0n3 ONLINE 0 0 0

errors: No known data errors

#删除zpool

[root@other ~]# zpool destroy zp1

#查看状态

[root@other ~]# zpool status

no pools available[root@other ~]# zpool create zp1 raidz1 nvme0n1 nvme0n2 nvme0n3

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: resilvered 50.5K in 0h0m with 0 errors on Wed Apr 3 15:45:32 2024

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

nvme0n3 ONLINE 0 0 0

errors: No known data errors

#删除zpool

[root@other ~]# zpool destroy zp1

#查看状态

[root@other ~]# zpool status

no pools available8.2RAIDZ-2

#创建

[root@other ~]# zpool create zp1 raidz2 nvme0n1 nvme0n2 nvme0n3 nvme0n4

#查看状态

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

nvme0n3 ONLINE 0 0 0

nvme0n4 ONLINE 0 0 0

errors: No known data errors

#查看可用空间大小

[root@other ~]# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zp1 111K 9.59G 32.9K /zp1#创建

[root@other ~]# zpool create zp1 raidz2 nvme0n1 nvme0n2 nvme0n3 nvme0n4

#查看状态

[root@other ~]# zpool status

pool: zp1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zp1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

nvme0n3 ONLINE 0 0 0

nvme0n4 ONLINE 0 0 0

errors: No known data errors

#查看可用空间大小

[root@other ~]# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zp1 111K 9.59G 32.9K /zp18.3RAIDZ-3

5块磁盘,可用两个

[root@other ~]# zpool create zp1 raidz3 nvme0n1 nvme0n2 nvme0n3 nvme0n4 nvme0n5

[root@other ~]# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zp1 114K 9.60G 35.1K /zp1[root@other ~]# zpool create zp1 raidz3 nvme0n1 nvme0n2 nvme0n3 nvme0n4 nvme0n5

[root@other ~]# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zp1 114K 9.60G 35.1K /zp19.快照

可以在池中保留至多2^64个快照,ZFS快照是持久化的,即使重启也不会丢失,而且它们不需要任何额外的备份存储;它们与其他数据一样使用相同的存储池

9.1创建快照

创建两种类型快照:池快照和数据集快照

语法,

- pool/dataset@snapshot-name

- pool@snapshot-name

数据集快照

#创建

[root@other ~]# zfs snapshot zp1/data01@2024

#查看

[root@other ~]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -#创建

[root@other ~]# zfs snapshot zp1/data01@2024

#查看

[root@other ~]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -快照物理物理存储位置,

[root@other ~]# cd /data01/.zfs/ [root@other .zfs]# ls shares snapshot

即使使用“ls -a”命令,“.zfs”目录也不可见

如果你想让“.zfs”目录可见,你可以更改该数据集上的“snapdir”属性。有效值是”hidden”和”visible”。默认情况下,它是隐藏的

# zfs set snapdir=visible zp1/data01

# ls -a /data01

.zfs/# zfs set snapdir=visible zp1/data01

# ls -a /data01

.zfs/删除快照

如果快照存在,它就被认为是数据集的子文件系统。因此,在销毁所有快照和嵌套数据集之前,不能删除数据集

[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

[root@other .zfs]# zfs destroy zp1/data01@2024[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

[root@other .zfs]# zfs destroy zp1/data01@2024重命名

zfs rename old_snapshot_name new_snapshot_namezfs rename old_snapshot_name new_snapshot_name回滚快照

zfs rollback -r snapshot_name

[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

zp1/data01@2025 0B - 96K -

zp1/data01@2026 0B - 96K -

zp1/data01@2027 0B - 96K -

#回滚zp1/data01@2025 这个时间点快照

[root@other .zfs]# zfs rollback zp1/data01@2025

cannot rollback to 'zp1/data01@2025': more recent snapshots or bookmarks exist

use '-r' to force deletion of the following snapshots and bookmarks:

zp1/data01@2027

zp1/data01@2026

[root@other .zfs]# zfs rollback -r zp1/data01@2025

-r 删除中间快照,恢复早期版本

-R 选项以销毁中间快照的克隆

[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

zp1/data01@2025 0B - 96K - zfs rollback -r snapshot_name

[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

zp1/data01@2025 0B - 96K -

zp1/data01@2026 0B - 96K -

zp1/data01@2027 0B - 96K -

#回滚zp1/data01@2025 这个时间点快照

[root@other .zfs]# zfs rollback zp1/data01@2025

cannot rollback to 'zp1/data01@2025': more recent snapshots or bookmarks exist

use '-r' to force deletion of the following snapshots and bookmarks:

zp1/data01@2027

zp1/data01@2026

[root@other .zfs]# zfs rollback -r zp1/data01@2025

-r 删除中间快照,恢复早期版本

-R 选项以销毁中间快照的克隆

[root@other .zfs]# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zp1/data01@2024 0B - 96K -

zp1/data01@2025 0B - 96K -要想回滚之前的快照,的必须删除回滚当时之前的时间

快照恢复

# 将快照挂载到一个临时挂载点,手动进行数据恢复

mkdir /recovery

mount -t zfs snapshot_name /recovery# 将快照挂载到一个临时挂载点,手动进行数据恢复

mkdir /recovery

mount -t zfs snapshot_name /recovery9.2快照策略

- 频率–每15分钟做一次快照,保留4张快照

- 每小时–每小时快照,保留24张快照

- Daily –每天快照,保留31个快照

- 每周–每周快照,保留7个快照

- Monthly–每月快照,保留12个快照

脚本

wget https://github.com/zfsonlinux/zfs-auto-snapshot/archive/upstream/1.2.4.tar.gz

tar -xzf 1.2.4.tar.gz

cd zfs-auto-snapshot-upstream-1.2.4

make installwget https://github.com/zfsonlinux/zfs-auto-snapshot/archive/upstream/1.2.4.tar.gz

tar -xzf 1.2.4.tar.gz

cd zfs-auto-snapshot-upstream-1.2.4

make install10.克隆

ZFS克隆是一个从快照“升级”的可写文件系统。克隆只能从快照创建,只要克隆存在,对快照的依赖就会保持。这意味着,如果你克隆了快照,就不能直接销毁这个快照。克隆依赖快照提供给它的数据,所以在销毁快照之前,必须先销毁克隆。

创建克隆几乎是瞬时的,就像快照一样,最初并不会占用任何额外的空间。相反,它会占用快照的所有初始空间。当数据在克隆中被修改时,它开始占用与快照分离的空间

1.创建

使用“zfs clone”命令、要克隆的快照和新文件系统的名称来创建克隆。克隆不需要驻留在与克隆相同的数据集中,但它需要驻留在相同的存储池中。

例如,如果我想克隆“zp1/data01@2025”快照,并给它命名为“zp1/2025”,我将运行以下命令:

# zfs clone zp1/data01@2025 zp1/2025

# dd if=/dev/zero of=/zp1/2025/random.img bs=1M count=100

# zfs list -r zp1# zfs clone zp1/data01@2025 zp1/2025

# dd if=/dev/zero of=/zp1/2025/random.img bs=1M count=100

# zfs list -r zp12.删除

[root@other ~]# zfs list -r zp1

NAME USED AVAIL REFER MOUNTPOINT

zp1 556K 9.63G 96K /zp1

zp1/2025 0B 9.63G 96K /zp1/2025

zp1/data01 160K 9.63G 96K /data01

[root@other ~]# zfs destroy zp1/2025

[root@other ~]# zfs list -r zp1

NAME USED AVAIL REFER MOUNTPOINT

zp1 544K 9.63G 96K /zp1

zp1/data01 160K 9.63G 96K /data01[root@other ~]# zfs list -r zp1

NAME USED AVAIL REFER MOUNTPOINT

zp1 556K 9.63G 96K /zp1

zp1/2025 0B 9.63G 96K /zp1/2025

zp1/data01 160K 9.63G 96K /data01

[root@other ~]# zfs destroy zp1/2025

[root@other ~]# zfs list -r zp1

NAME USED AVAIL REFER MOUNTPOINT

zp1 544K 9.63G 96K /zp1

zp1/data01 160K 9.63G 96K /data0111.监控

IO

[root@other ~]# zpool iostat zp_name 1

capacity operations bandwidth

pool alloc free read write read write

---------- ----- ----- ----- ----- ----- -----

zp1 728K 4.97G 0 5 12.0K 60.6K

zp1 728K 4.97G 0 0 0 0[root@other ~]# zpool iostat zp_name 1

capacity operations bandwidth

pool alloc free read write read write

---------- ----- ----- ----- ----- ----- -----

zp1 728K 4.97G 0 5 12.0K 60.6K

zp1 728K 4.97G 0 0 0 0