1. 背景

需要考虑如下几个维度:

1.1 业务维度

业务维度:在企业中,不同的业务拥有不同的指标和告警规则。例如,对于ToC平台,需要监控订单量、库存、支付成功率等指标,以确保业务的正常运行。

1.2 环境维度

企业中通常会有多个环境,例如开发、测试、预生产和生产环境等。由于每个环境的特点不同,因此需要为每个环境制定不同的告警规则。

1.3 应用程序维度

不同的应用程序拥有不同的指标和告警规则。例如,在监控Web应用程序时,需要监控HTTP请求失败率、响应时间和内存使用情况等指标。

1.4 基础设施维度

企业中的基础设施包括服务器、网络设备和存储设备等。在监控基础设施时,需要监控CPU使用率、磁盘空间和网络带宽等指标。

2. 定义告警规则

简单案例

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: |

up{job=~"other-ECS|k8s-nodes|prometheus|service_discovery_consul"} == 0

for: 5s

labels:

severity: critical

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} 主机名:{{ $labels.hostname }} 已经停止1分钟以上." groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: |

up{job=~"other-ECS|k8s-nodes|prometheus|service_discovery_consul"} == 0

for: 5s

labels:

severity: critical

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} 主机名:{{ $labels.hostname }} 已经停止1分钟以上."在告警文件中,将一组相关的规则设置定义在一个group下,在每个组里面可以定义多个规则

规则的组成:

alert:告警规则的名称。

expr:基于PromQL表达式告警触发条件,用于计算是否有时间序列满足该条件。

for:评估等待时间,可选参数。用于表示只有当触发条件持续一段时间后才发送告警。在等待期间新产生告警的状态为pending。

labels:自定义标签,允许用户指定要附加到告警上的一组附加标签。

annotations:用于指定一组附加信息,比如用于描述告警详细信息的文字等,annotations的内容在告警产生时会一同作为参数发送到Alertmanager。

3. 告警rules

参考文档-awesome-prometheus-alerts

3.0 prometheus.rules

prometheus.rules: |

groups:

- name: prometheus.rules

rules:

- alert: PrometheusErrorSendingAlertsToAnyAlertmanagers

expr: |

(rate(prometheus_notifications_errors_total{instance="localhost:9090", job="prometheus"}[5m]) /rate(prometheus_notifications_sent_total{instance="localhost:9090",job="prometheus"}[5m])) * 100 > 3

for: 5m

labels:

severity: warning

annotations:

description: '{{ printf "%.1f" $value }}% minimum errors while sending alerts from Prometheus {{$labels.namespace}}/{{$labels.pod}} to any Alertmanager.'

- alert: PrometheusNotConnectedToAlertmanagers

expr: |

max_over_time(prometheus_notifications_alertmanagers_discovered{instance="localhost:9090", job="prometheus"}[5m]) != 1

for: 5m

labels:

severity: critical

annotations:

description: "Prometheus {{$labels.namespace}}/{{$labels.pod}} 链接alertmanager异常!"

- alert: PrometheusRuleFailures

expr: |

increase(prometheus_rule_evaluation_failures_total{instance="localhost:9090", job="prometheus"}[5m]) > 0

for: 5m

labels:

severity: critical

annotations:

description: 'Prometheus {{$labels.namespace}}/{{$labels.pod}} 在5分钟执行失败的规则次数 {{ printf "%.0f" $value }}'

- alert: PrometheusRuleEvaluationFailures

expr: |

increase(prometheus_rule_evaluation_failures_total[3m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus rule evaluation failures (instance {{$labels.instance }})

description: "Prometheus 遇到规则 {{ $value }} 载入失败, 请及时检查."

- alert: PrometheusTsdbReloadFailures

expr: |

increase(prometheus_tsdb_reloads_failures_total[1m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus TSDB reload failures (instance {{$labels.instance }})

description: "Prometheus {{ $value }} TSDB 重载失败!"

- alert: PrometheusTsdbWalCorruptions

expr: |

increase(prometheus_tsdb_wal_corruptions_total[1m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus TSDB WAL corruptions (instance {{$labels.instance }})

description: "Prometheus {{ $value }} TSDB WAL 模块出现问题!" prometheus.rules: |

groups:

- name: prometheus.rules

rules:

- alert: PrometheusErrorSendingAlertsToAnyAlertmanagers

expr: |

(rate(prometheus_notifications_errors_total{instance="localhost:9090", job="prometheus"}[5m]) /rate(prometheus_notifications_sent_total{instance="localhost:9090",job="prometheus"}[5m])) * 100 > 3

for: 5m

labels:

severity: warning

annotations:

description: '{{ printf "%.1f" $value }}% minimum errors while sending alerts from Prometheus {{$labels.namespace}}/{{$labels.pod}} to any Alertmanager.'

- alert: PrometheusNotConnectedToAlertmanagers

expr: |

max_over_time(prometheus_notifications_alertmanagers_discovered{instance="localhost:9090", job="prometheus"}[5m]) != 1

for: 5m

labels:

severity: critical

annotations:

description: "Prometheus {{$labels.namespace}}/{{$labels.pod}} 链接alertmanager异常!"

- alert: PrometheusRuleFailures

expr: |

increase(prometheus_rule_evaluation_failures_total{instance="localhost:9090", job="prometheus"}[5m]) > 0

for: 5m

labels:

severity: critical

annotations:

description: 'Prometheus {{$labels.namespace}}/{{$labels.pod}} 在5分钟执行失败的规则次数 {{ printf "%.0f" $value }}'

- alert: PrometheusRuleEvaluationFailures

expr: |

increase(prometheus_rule_evaluation_failures_total[3m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus rule evaluation failures (instance {{$labels.instance }})

description: "Prometheus 遇到规则 {{ $value }} 载入失败, 请及时检查."

- alert: PrometheusTsdbReloadFailures

expr: |

increase(prometheus_tsdb_reloads_failures_total[1m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus TSDB reload failures (instance {{$labels.instance }})

description: "Prometheus {{ $value }} TSDB 重载失败!"

- alert: PrometheusTsdbWalCorruptions

expr: |

increase(prometheus_tsdb_wal_corruptions_total[1m]) > 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus TSDB WAL corruptions (instance {{$labels.instance }})

description: "Prometheus {{ $value }} TSDB WAL 模块出现问题!"3.1 Node.rules

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: |

100 - (node_filesystem_avail_bytes / node_filesystem_size_bytes) * 100 > 85

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }} 主机名:{{ $labels.hostname }} : {{ $labels.mountpoint }} 分区使用大于85% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: |

100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 85

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 内存使用大于85% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: |

(100 - (avg by (instance) (irate(node_cpu_seconds_total{job=~".*",mode="idle"}[5m])) * 100)) > 85

for: 10m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} CPU使用大于85% (当前值: {{ $value }})"

- alert: TCPEstab

expr: |

node_netstat_Tcp_CurrEstab > 6000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_Estab链接过高 > 6000"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_Estab链接过高!(当前值: {{ $value }})"

- alert: TCP_TIME_WAIT

expr: |

node_sockstat_TCP_tw > 3000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_TIME_WAIT过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_TIME_WAIT过高!(当前值: {{ $value }})"

- alert: TCP_Sockets

expr: |

node_sockstat_sockets_used > 10000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_Sockets链接过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_Sockets链接过高!(当前值: {{ $value }})"

- alert: KubeNodeNotReady

expr: |

kube_node_status_condition{condition="Ready",status="true"} == 0

for: 1m

labels:

severity: critical

annotations:

description: '{{ $labels.node }} NotReady已经1分钟.'

- alert: KubernetesMemoryPressure

expr: |

kube_node_status_condition{condition="MemoryPressure",status="true"} == 1

for: 2m

labels:

severity: critical

annotations:

summary: "Kubernetes memory pressure (instance {{$labels.instance }})"

description: "{{ $labels.node }} has MemoryPressurecondition VALUE = {{ $value }}"

- alert: KubernetesDiskPressure

expr: |

kube_node_status_condition{condition="DiskPressure",status="true"}

for: 2m

labels:

severity: critical

annotations:

summary: "Kubernetes disk pressure (instance {{$labels.instance }})"

description: "{{ $labels.node }} has DiskPressurecondition."

- alert: KubernetesJobFailed

expr: |

kube_job_status_failed > 0

for: 1m

labels:

severity: warning

annotations:

summary: "Kubernetes Job failed (instance {{$labels.instance }})"

description: "Job {{$labels.namespace}}/{{$labels.job_name}} failed to complete."

- alert: UnusualDiskWriteRate

expr: |

sum by (job,instance)(irate(node_disk_written_bytes_total[5m])) / 1024 / 1024 > 140

for: 5m

labels:

severity: critical

hostname: '{{ $labels.hostname }}'

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟磁盘写入数据(> 140 MB/s) (当前值: {{ $value}})阿里云ESSD PL0最大吞吐量180MB/s, PL1最大350MB/s'

- alert: UnusualDiskReadRate

expr: |

sum by (job,instance)(irate(node_disk_read_bytes_total[5m])) / 1024 / 1024 > 140

for: 5m

labels:

severity: critical

hostname: '{{ $labels.hostname }}'

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟磁盘读取数据(> 140 MB/s) (当前值: {{ $value}})阿里云ESSD PL0最大吞吐量180MB/s, PL1最大350MB/s'

- alert: UnusualNetworkThroughputIn

expr: |

sum by (job,instance)(irate(node_network_receive_bytes_total{job=~"aws-jd-monitor|k8s-nodes"}[5m])) / 1024 / 1024 > 80

for: 5m

labels:

severity: critical

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟网络带宽接收数据(> 80 MB/s) (当前值: {{$value }})'

- alert: UnusualNetworkThroughputOut

expr: |

sum by (job,instance)(irate(node_network_transmit_bytes_total{job=~"aws-hk-monitor|k8s-nodes"}[5m])) / 1024 / 1024 > 80

for: 5m

labels:

severity: critical

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟网络带宽发送数据(> 80 MB/s) (当前值: {{$value }})'

- alert: SystemdServiceCrashed

expr: |

node_systemd_unit_state{state="failed"} == 1

labels:

severity: warning

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 上的{{$labels.name}}服务有问题已经5分钟,请及时处理'

- alert: HostDiskWillFillIn24Hours

expr: |

(node_filesystem_avail_bytes * 100) /node_filesystem_size_bytes < 10 and ON (instance, device,mountpoint)predict_linear(node_filesystem_avail_bytes{fstype!~"tmpfs"}[1h], 24* 3600) < 0 and ON (instance, device, mountpoint)node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host disk will fill in 24 hours (instance {{$labels.instance }})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 以当前写入速率,预计文件系统将在未来24小时内耗尽空间!"

- alert: HostOutOfInodes

expr: |

node_filesystem_files_free / node_filesystem_files *100 < 10 and ON (instance, device, mountpoint)node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host out of inodes (instance {{ $labels.instance}})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 磁盘iNode空间剩余小于10%!\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: HostOomKillDetected

expr: |

increase(node_vmstat_oom_kill[1m]) > 0

for: 0m

labels:

severity: warning

annotations:

summary: Host OOM kill detected (instance {{$labels.instance }})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 当前主机检查到有OOM现象!"

- alert: 节点磁盘容量

expr: |

max((node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"}) *100/(node_filesystem_avail_bytes {fstype=~"ext.?|xfs"}+(node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"})))by(instance) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "节点磁盘分区使用率过高!"

description: "{{$labels.instance }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

- alert: 节点磁盘容量

expr: |

max((node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"}) *100/(node_filesystem_avail_bytes {fstype=~"ext.?|xfs"}+(node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"})))by(instance) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "节点磁盘分区使用率过高!"

description: "{{$labels.instance }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

- alert: node_processes_threads

expr: |

(node_processes_threads / on(instance) min by(instance) (node_processes_max_processes or node_processes_max_threads) > 0.8)

for: 1m

labels:

severity: warning

annotations:

summary: "节点processes_threads较高!"

description: "{{$labels.instance }} node_processes_max_threads大于80%(目前使用:{{$value}}%)" node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: |

100 - (node_filesystem_avail_bytes / node_filesystem_size_bytes) * 100 > 85

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }} 主机名:{{ $labels.hostname }} : {{ $labels.mountpoint }} 分区使用大于85% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: |

100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 85

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 内存使用大于85% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: |

(100 - (avg by (instance) (irate(node_cpu_seconds_total{job=~".*",mode="idle"}[5m])) * 100)) > 85

for: 10m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} CPU使用大于85% (当前值: {{ $value }})"

- alert: TCPEstab

expr: |

node_netstat_Tcp_CurrEstab > 6000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_Estab链接过高 > 6000"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_Estab链接过高!(当前值: {{ $value }})"

- alert: TCP_TIME_WAIT

expr: |

node_sockstat_TCP_tw > 3000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_TIME_WAIT过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_TIME_WAIT过高!(当前值: {{ $value }})"

- alert: TCP_Sockets

expr: |

node_sockstat_sockets_used > 10000

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} TCP_Sockets链接过高"

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} TCP_Sockets链接过高!(当前值: {{ $value }})"

- alert: KubeNodeNotReady

expr: |

kube_node_status_condition{condition="Ready",status="true"} == 0

for: 1m

labels:

severity: critical

annotations:

description: '{{ $labels.node }} NotReady已经1分钟.'

- alert: KubernetesMemoryPressure

expr: |

kube_node_status_condition{condition="MemoryPressure",status="true"} == 1

for: 2m

labels:

severity: critical

annotations:

summary: "Kubernetes memory pressure (instance {{$labels.instance }})"

description: "{{ $labels.node }} has MemoryPressurecondition VALUE = {{ $value }}"

- alert: KubernetesDiskPressure

expr: |

kube_node_status_condition{condition="DiskPressure",status="true"}

for: 2m

labels:

severity: critical

annotations:

summary: "Kubernetes disk pressure (instance {{$labels.instance }})"

description: "{{ $labels.node }} has DiskPressurecondition."

- alert: KubernetesJobFailed

expr: |

kube_job_status_failed > 0

for: 1m

labels:

severity: warning

annotations:

summary: "Kubernetes Job failed (instance {{$labels.instance }})"

description: "Job {{$labels.namespace}}/{{$labels.job_name}} failed to complete."

- alert: UnusualDiskWriteRate

expr: |

sum by (job,instance)(irate(node_disk_written_bytes_total[5m])) / 1024 / 1024 > 140

for: 5m

labels:

severity: critical

hostname: '{{ $labels.hostname }}'

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟磁盘写入数据(> 140 MB/s) (当前值: {{ $value}})阿里云ESSD PL0最大吞吐量180MB/s, PL1最大350MB/s'

- alert: UnusualDiskReadRate

expr: |

sum by (job,instance)(irate(node_disk_read_bytes_total[5m])) / 1024 / 1024 > 140

for: 5m

labels:

severity: critical

hostname: '{{ $labels.hostname }}'

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟磁盘读取数据(> 140 MB/s) (当前值: {{ $value}})阿里云ESSD PL0最大吞吐量180MB/s, PL1最大350MB/s'

- alert: UnusualNetworkThroughputIn

expr: |

sum by (job,instance)(irate(node_network_receive_bytes_total{job=~"aws-jd-monitor|k8s-nodes"}[5m])) / 1024 / 1024 > 80

for: 5m

labels:

severity: critical

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟网络带宽接收数据(> 80 MB/s) (当前值: {{$value }})'

- alert: UnusualNetworkThroughputOut

expr: |

sum by (job,instance)(irate(node_network_transmit_bytes_total{job=~"aws-hk-monitor|k8s-nodes"}[5m])) / 1024 / 1024 > 80

for: 5m

labels:

severity: critical

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 持续5分钟网络带宽发送数据(> 80 MB/s) (当前值: {{$value }})'

- alert: SystemdServiceCrashed

expr: |

node_systemd_unit_state{state="failed"} == 1

labels:

severity: warning

annotations:

description: '{{ $labels.instance }} 主机名:{{$labels.hostname }} 上的{{$labels.name}}服务有问题已经5分钟,请及时处理'

- alert: HostDiskWillFillIn24Hours

expr: |

(node_filesystem_avail_bytes * 100) /node_filesystem_size_bytes < 10 and ON (instance, device,mountpoint)predict_linear(node_filesystem_avail_bytes{fstype!~"tmpfs"}[1h], 24* 3600) < 0 and ON (instance, device, mountpoint)node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host disk will fill in 24 hours (instance {{$labels.instance }})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 以当前写入速率,预计文件系统将在未来24小时内耗尽空间!"

- alert: HostOutOfInodes

expr: |

node_filesystem_files_free / node_filesystem_files *100 < 10 and ON (instance, device, mountpoint)node_filesystem_readonly == 0

for: 2m

labels:

severity: warning

annotations:

summary: Host out of inodes (instance {{ $labels.instance}})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 磁盘iNode空间剩余小于10%!\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: HostOomKillDetected

expr: |

increase(node_vmstat_oom_kill[1m]) > 0

for: 0m

labels:

severity: warning

annotations:

summary: Host OOM kill detected (instance {{$labels.instance }})

description: "{{ $labels.instance }} 主机名:{{$labels.hostname }} 当前主机检查到有OOM现象!"

- alert: 节点磁盘容量

expr: |

max((node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"}) *100/(node_filesystem_avail_bytes {fstype=~"ext.?|xfs"}+(node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"})))by(instance) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "节点磁盘分区使用率过高!"

description: "{{$labels.instance }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

- alert: 节点磁盘容量

expr: |

max((node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"}) *100/(node_filesystem_avail_bytes {fstype=~"ext.?|xfs"}+(node_filesystem_size_bytes{fstype=~"ext.?|xfs"}-node_filesystem_free_bytes{fstype=~"ext.?|xfs"})))by(instance) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "节点磁盘分区使用率过高!"

description: "{{$labels.instance }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

- alert: node_processes_threads

expr: |

(node_processes_threads / on(instance) min by(instance) (node_processes_max_processes or node_processes_max_threads) > 0.8)

for: 1m

labels:

severity: warning

annotations:

summary: "节点processes_threads较高!"

description: "{{$labels.instance }} node_processes_max_threads大于80%(目前使用:{{$value}}%)"conntrack

- 查看是否采集该指标

curl -s localhost:9100/metrics | grep "node_nf_conntrack_" curl -s localhost:9100/metrics | grep "node_nf_conntrack_"Conntrack 是 Linux 内核中的一个模块,用来跟踪连接的状态。比如,你的机器是一个 NAT 网关,那么 Conntrack 就会记录内网 IP 和端口到外网 IP 和端口的映射关系。这样,当外网回包的时候,内核就能根据 Conntrack 表找到对应的内网 IP 和端口,把包转发给内网机器。我们可以通过 conntrack -L 命令查看 Conntrack 表的内容。

Conntrack 表是有限的,所以当表满了,新连接就无法建立。这时,就会出现 nf_conntrack: table full的错误,导致生产故障。

- alert: node_nf_conntrack

expr: |

100 * node_nf_conntrack_entries / node_nf_conntrack_entries_limit > 85

for: 1m

labels:

severity: critical

annotations:

summary: "节点node_nf_conntrack即将耗尽!"

description: "{{$labels.instance }} node_nf_conntrack_entries_limit 大于85%(目前使用:{{$value}}%)"- alert: node_nf_conntrack

expr: |

100 * node_nf_conntrack_entries / node_nf_conntrack_entries_limit > 85

for: 1m

labels:

severity: critical

annotations:

summary: "节点node_nf_conntrack即将耗尽!"

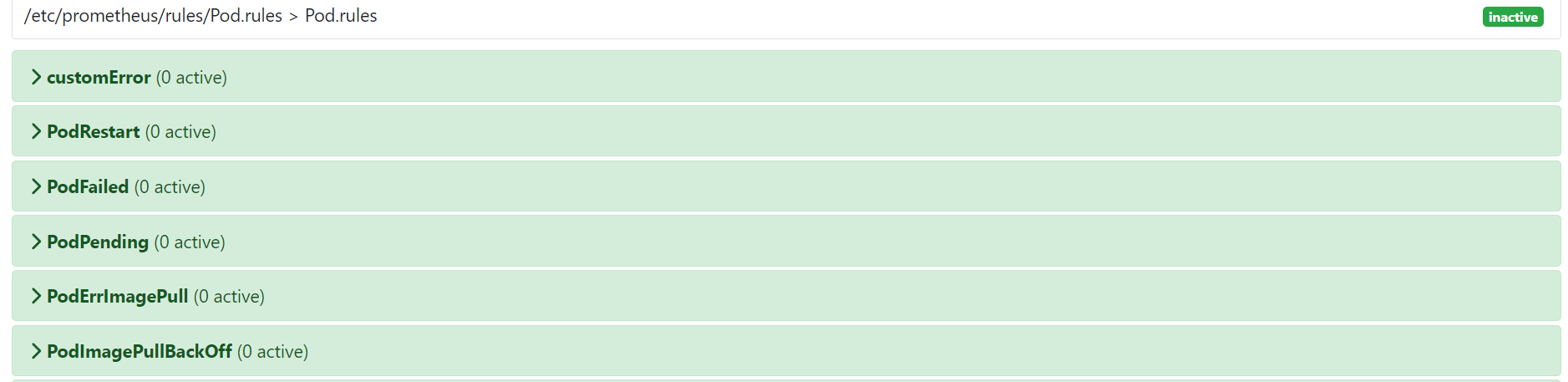

description: "{{$labels.instance }} node_nf_conntrack_entries_limit 大于85%(目前使用:{{$value}}%)"3.2 Pod.rules

- 效果

Pod.rules: |

groups:

- name: Pod.rules

rules:

- alert: customError

expr: |

kube_pod_container_status_running{container="custom"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} custome服务异常 (当前值: {{ $value }})"

- alert: PodRestart

expr: |

sum(changes(kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod重启 (当前值: {{ $value }})"

- alert: PodFailed

expr: |

sum(kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

for: 5s

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态Failed (当前值: {{ $value }})"

- alert: PodPending

expr: |

sum(kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

for: 30s

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态Pending (当前值: {{ $value }})"

- alert: PodErrImagePull

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="ErrImagePull"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态ErrImagePull (当前值: {{ $value }})"

- alert: PodImagePullBackOff

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="ImagePullBackOff"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态ImagePullBackOff (当前值: {{ $value }})"

- alert: PodCrashLoopBackOff

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="CrashLoopBackOff"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态CrashLoopBackOff (当前值: {{ $value }})"

- alert: PodInvalidImageName

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="InvalidImageName"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态InvalidImageName (当前值: {{ $value }})"

- alert: PodCreateContainerConfigError

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="CreateContainerConfigError"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态CreateContainerConfigError (当前值: {{ $value}})"

- alert: StatefulsetDown

expr: |

(kube_statefulset_status_replicas_ready / kube_statefulset_status_replicas_current) != 1

for: 1m

labels:

severity: critical

annotations:

summary: Kubernetes StatefulSet down (instance {{$labels.instance }})

description: "{{ $labels.statefulset }} A StatefulSet went down!"

- alert: StatefulsetReplicasMismatch

expr: |

kube_statefulset_status_replicas_ready != kube_statefulset_status_replicas

for: 10m

labels:

severity: warning

annotations:

summary: StatefulSet replicas mismatch (instance {{ $labels.instance }})

description: "{{ $labels.statefulset }} A StatefulSet does not match the expected number of replicas."

- alert: PodDown

expr: | #根据环境修改

min_over_time(kube_pod_container_status_ready{pod!~".*job.*|.*jenkins.*"} [1m])== 0

for: 1s # 持续多久确认报警信息

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

summary: 'Container: {{ $labels.container }} down'

message: 'Namespace: {{ $labels.namespace }}, Pod: {{ $labels.pod }} 服务不可用'

- alert: PodOomKiller

expr: |

(kube_pod_container_status_restarts_total - kube_pod_container_status_restarts_total offset 10m >= 1) and ignoring (reason)min_over_time(kube_pod_container_status_last_terminated_reason{reason="OOMKilled"}[10m]) == 1

for: 0m

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

summary: Kubernetes container oom killer (instance {{ $labels.instance }})

description: "Container {{ $labels.container }} in pod {{ $labels.namespace }}/{{ $labels.pod }} has been OOMKilled {{ $value }} times in the last 10 minutes.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: PodCPUUsage

expr: |

sum(rate(container_cpu_usage_seconds_total{image!=""}[5m]) * 100) by (pod, namespace) > 90

for: 5m

labels:

severity: warning

pod: '{{$labels.pod}}'

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} CPU使用大于90% (当前值: {{ $value }})"

- alert: PodMemoryUsage

expr: |

sum(container_memory_rss{image!=""}) by(pod, namespace)/sum(container_spec_memory_limit_bytes{image!=""}) by(pod, namespace)* 100 != +inf > 85

for: 5m

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 内存使用大于85% (当前值: {{ $value }})"

- alert: KubeDeploymentError

expr: |

kube_deployment_spec_replicas{job="kubernetes-service-endpoints"} != kube_deployment_status_replicas_available{job="kubernetes-service-endpoints"}

for: 3m

labels:

severity: critical

pod: '{{$labels.deployment}}'

annotations:

description: "Deployment {{ $labels.namespace }}/{{$labels.deployment }}控制器与实际数量不相符 (当前值: {{ $value }})"

- alert: coreDnsError

expr: |

kube_pod_container_status_running{container="coredns"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} coreDns服务异常 (当前值: {{ $value }})"

- alert: kubeProxyError

expr: |

kube_pod_container_status_running{container="kube-proxy"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} kube-proxy服务异常 (当前值: {{ $value }})"

- alert: PodNetworkReceive

expr: |

sum(rate(container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 60000

for: 5m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 入口流量大于60MB/s (当前值: {{ $value }}K/s)"

- alert: PodNetworkTransmit

expr: |

sum(rate(container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 60000

for: 5m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 出口流量大于60MB/s (当前值: {{ $value }}/K/s)" Pod.rules: |

groups:

- name: Pod.rules

rules:

- alert: customError

expr: |

kube_pod_container_status_running{container="custom"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} custome服务异常 (当前值: {{ $value }})"

- alert: PodRestart

expr: |

sum(changes(kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod重启 (当前值: {{ $value }})"

- alert: PodFailed

expr: |

sum(kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

for: 5s

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态Failed (当前值: {{ $value }})"

- alert: PodPending

expr: |

sum(kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

for: 30s

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态Pending (当前值: {{ $value }})"

- alert: PodErrImagePull

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="ErrImagePull"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态ErrImagePull (当前值: {{ $value }})"

- alert: PodImagePullBackOff

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="ImagePullBackOff"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态ImagePullBackOff (当前值: {{ $value }})"

- alert: PodCrashLoopBackOff

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="CrashLoopBackOff"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态CrashLoopBackOff (当前值: {{ $value }})"

- alert: PodInvalidImageName

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="InvalidImageName"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态InvalidImageName (当前值: {{ $value }})"

- alert: PodCreateContainerConfigError

expr: |

sum by(namespace,pod)(kube_pod_container_status_waiting_reason{reason="CreateContainerConfigError"}) == 1

for: 1m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} Pod状态CreateContainerConfigError (当前值: {{ $value}})"

- alert: StatefulsetDown

expr: |

(kube_statefulset_status_replicas_ready / kube_statefulset_status_replicas_current) != 1

for: 1m

labels:

severity: critical

annotations:

summary: Kubernetes StatefulSet down (instance {{$labels.instance }})

description: "{{ $labels.statefulset }} A StatefulSet went down!"

- alert: StatefulsetReplicasMismatch

expr: |

kube_statefulset_status_replicas_ready != kube_statefulset_status_replicas

for: 10m

labels:

severity: warning

annotations:

summary: StatefulSet replicas mismatch (instance {{ $labels.instance }})

description: "{{ $labels.statefulset }} A StatefulSet does not match the expected number of replicas."

- alert: PodDown

expr: | #根据环境修改

min_over_time(kube_pod_container_status_ready{pod!~".*job.*|.*jenkins.*"} [1m])== 0

for: 1s # 持续多久确认报警信息

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

summary: 'Container: {{ $labels.container }} down'

message: 'Namespace: {{ $labels.namespace }}, Pod: {{ $labels.pod }} 服务不可用'

- alert: PodOomKiller

expr: |

(kube_pod_container_status_restarts_total - kube_pod_container_status_restarts_total offset 10m >= 1) and ignoring (reason)min_over_time(kube_pod_container_status_last_terminated_reason{reason="OOMKilled"}[10m]) == 1

for: 0m

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

summary: Kubernetes container oom killer (instance {{ $labels.instance }})

description: "Container {{ $labels.container }} in pod {{ $labels.namespace }}/{{ $labels.pod }} has been OOMKilled {{ $value }} times in the last 10 minutes.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: PodCPUUsage

expr: |

sum(rate(container_cpu_usage_seconds_total{image!=""}[5m]) * 100) by (pod, namespace) > 90

for: 5m

labels:

severity: warning

pod: '{{$labels.pod}}'

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} CPU使用大于90% (当前值: {{ $value }})"

- alert: PodMemoryUsage

expr: |

sum(container_memory_rss{image!=""}) by(pod, namespace)/sum(container_spec_memory_limit_bytes{image!=""}) by(pod, namespace)* 100 != +inf > 85

for: 5m

labels:

severity: critical

pod: '{{$labels.pod}}'

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 内存使用大于85% (当前值: {{ $value }})"

- alert: KubeDeploymentError

expr: |

kube_deployment_spec_replicas{job="kubernetes-service-endpoints"} != kube_deployment_status_replicas_available{job="kubernetes-service-endpoints"}

for: 3m

labels:

severity: critical

pod: '{{$labels.deployment}}'

annotations:

description: "Deployment {{ $labels.namespace }}/{{$labels.deployment }}控制器与实际数量不相符 (当前值: {{ $value }})"

- alert: coreDnsError

expr: |

kube_pod_container_status_running{container="coredns"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} coreDns服务异常 (当前值: {{ $value }})"

- alert: kubeProxyError

expr: |

kube_pod_container_status_running{container="kube-proxy"} == 0

for: 1m

labels:

severity: critical

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} kube-proxy服务异常 (当前值: {{ $value }})"

- alert: PodNetworkReceive

expr: |

sum(rate(container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 60000

for: 5m

labels:

severity: warning

annotations:

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 入口流量大于60MB/s (当前值: {{ $value }}K/s)"

- alert: PodNetworkTransmit

expr: |

sum(rate(container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 60000

for: 5m

labels:

severity: warning

annotations:

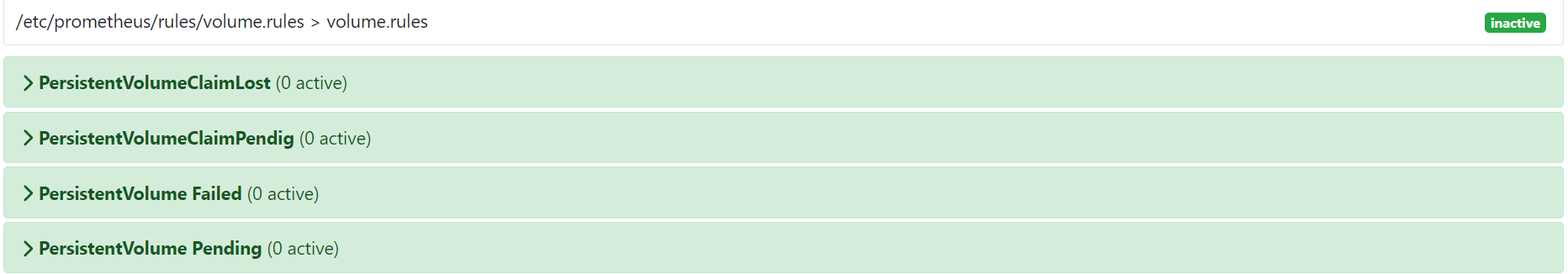

description: "命名空间: {{ $labels.namespace }} | Pod名称: {{$labels.pod }} 出口流量大于60MB/s (当前值: {{ $value }}/K/s)"3.3 Volume.rules

基于NFS

pvc涉及参数:

kube_persistentvolumeclaim_info 是一个预定义的指标,它提供了关于PVC的信息,包括它们的存储请求和限制

kube_persistentvolumeclaim_status_phase 指标提供了PVC的状态,例如Bound、Pending等kube_persistentvolumeclaim_info 是一个预定义的指标,它提供了关于PVC的信息,包括它们的存储请求和限制

kube_persistentvolumeclaim_status_phase 指标提供了PVC的状态,例如Bound、Pending等- 效果

volume.rules: |

groups:

- name: volume.rules

rules:

- alert: PersistentVolumeClaimLost

expr: |

sum by(namespace, persistentvolumeclaim)(kube_persistentvolumeclaim_status_phase{phase="Lost"}) == 1

for: 2m

labels:

severity: warning

annotations:

description: "PersistentVolumeClaim {{ $labels.namespace}}/{{ $labels.persistentvolumeclaim }} is lost!"

- alert: PersistentVolumeClaimPendig

expr: |

sum by(namespace, persistentvolumeclaim)(kube_persistentvolumeclaim_status_phase{phase="Pendig"}) == 1

for: 2m

labels:

severity: warning

annotations:

description: "PersistentVolumeClaim {{ $labels.namespace}}/{{ $labels.persistentvolumeclaim }} is pendig!"

- alert: PersistentVolume Failed

expr: |

sum(kube_persistentvolume_status_phase{phase="Failed",job="kubernetes-service-endpoints"}) by (persistentvolume) == 1

for: 2m

labels:

severity: warning

annotations:

description: "Persistent volume is failed state\n VALUE ={{ $value }}\n LABELS = {{ $labels }}"

- alert: PersistentVolume Pending

expr: |

sum(kube_persistentvolume_status_phase{phase="Pending",job="kubernetes-service-endpoints"}) by (persistentvolume) == 1

for: 2m

labels:

severity: warning

annotations:

description: "Persistent volume is pending state\n VALUE= {{ $value }}\n LABELS = {{ $labels }}"

- alert: PVCUsageHigh

expr: |

(kubelet_volume_stats_used_bytes / kubelet_volume_stats_capacity_bytes) * 100 > 80

for: 5m

labels:

severity: warning

annotations:

summary: "PVC usage is above 85% (instance {{ $labels.instance }})"

description: "PVC {{ $labels.persistentvolumeclaim }} in namespace {{ $labels.namespace }} is using more than 85%,请相关人员及时处理!" volume.rules: |

groups:

- name: volume.rules

rules:

- alert: PersistentVolumeClaimLost

expr: |

sum by(namespace, persistentvolumeclaim)(kube_persistentvolumeclaim_status_phase{phase="Lost"}) == 1

for: 2m

labels:

severity: warning

annotations:

description: "PersistentVolumeClaim {{ $labels.namespace}}/{{ $labels.persistentvolumeclaim }} is lost!"

- alert: PersistentVolumeClaimPendig

expr: |

sum by(namespace, persistentvolumeclaim)(kube_persistentvolumeclaim_status_phase{phase="Pendig"}) == 1

for: 2m

labels:

severity: warning

annotations:

description: "PersistentVolumeClaim {{ $labels.namespace}}/{{ $labels.persistentvolumeclaim }} is pendig!"

- alert: PersistentVolume Failed

expr: |

sum(kube_persistentvolume_status_phase{phase="Failed",job="kubernetes-service-endpoints"}) by (persistentvolume) == 1

for: 2m

labels:

severity: warning

annotations:

description: "Persistent volume is failed state\n VALUE ={{ $value }}\n LABELS = {{ $labels }}"

- alert: PersistentVolume Pending

expr: |

sum(kube_persistentvolume_status_phase{phase="Pending",job="kubernetes-service-endpoints"}) by (persistentvolume) == 1

for: 2m

labels:

severity: warning

annotations:

description: "Persistent volume is pending state\n VALUE= {{ $value }}\n LABELS = {{ $labels }}"

- alert: PVCUsageHigh

expr: |

(kubelet_volume_stats_used_bytes / kubelet_volume_stats_capacity_bytes) * 100 > 80

for: 5m

labels:

severity: warning

annotations:

summary: "PVC usage is above 85% (instance {{ $labels.instance }})"

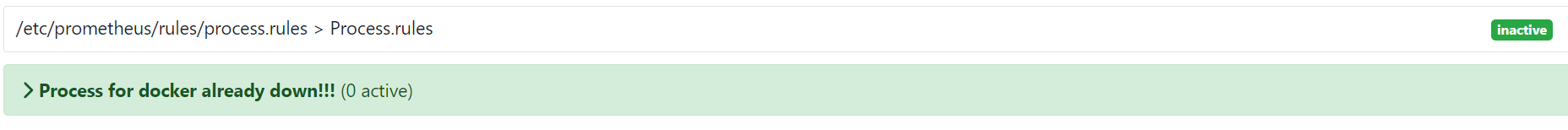

description: "PVC {{ $labels.persistentvolumeclaim }} in namespace {{ $labels.namespace }} is using more than 85%,请相关人员及时处理!"3.4 Process.rules

- 效果

process.rules: |

groups:

- name: Process.rules

rules:

- alert: 'Process for docker already down!!!'

expr: |

(namedprocess_namegroup_num_procs{groupname="map[:docker]"}) < 1

for: 1m

labels:

severity: critical

annotations:

summary: "Process 停止工作"

description: "任务名称: docker | 正常进程数量: 1个 | 当前值: {{ $value }},请相关人员及时处理!" process.rules: |

groups:

- name: Process.rules

rules:

- alert: 'Process for docker already down!!!'

expr: |

(namedprocess_namegroup_num_procs{groupname="map[:docker]"}) < 1

for: 1m

labels:

severity: critical

annotations:

summary: "Process 停止工作"

description: "任务名称: docker | 正常进程数量: 1个 | 当前值: {{ $value }},请相关人员及时处理!"根据实际业务进行修改

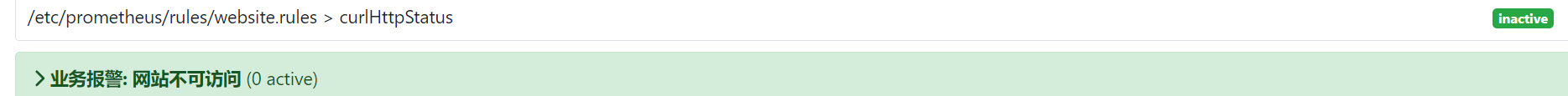

3.5 Website.rules

- 效果

website.rules: |

groups:

- name: curlHttpStatus

rules:

- alert: "业务报警: 网站不可访问"

expr: probe_http_status_code{job="blackbox-external-website"} >= 422 and probe_success{job="blackbox-external-website"} == 0

for: 1m

labels:

severity: critical

annotations:

summary: '业务报警: 网站不可访问'

description: '{{$labels.instance}} 不可访问,请及时查看,当前状态码为{{$value}}' website.rules: |

groups:

- name: curlHttpStatus

rules:

- alert: "业务报警: 网站不可访问"

expr: probe_http_status_code{job="blackbox-external-website"} >= 422 and probe_success{job="blackbox-external-website"} == 0

for: 1m

labels:

severity: critical

annotations:

summary: '业务报警: 网站不可访问'

description: '{{$labels.instance}} 不可访问,请及时查看,当前状态码为{{$value}}'job根据自己环境修改

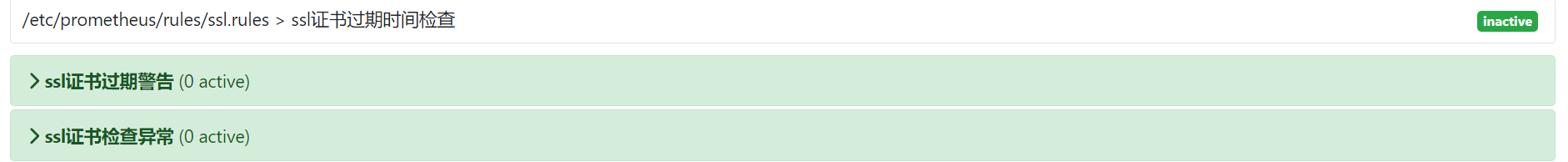

3.6 ssl.rules

- 效果

ssl.rules: |

groups:

- name: "ssl证书过期时间检查"

rules:

- alert: "ssl证书过期警告"

expr: (ssl_cert_not_after-time())/3600/24 <7

for: 3h

labels:

severity: critical

annotations:

description: '证书{{ $labels.instance }}还有{{ printf "%.1f" $value }}天就过期了,请尽快更新证书'

summary: "ssl证书过期警告"

- alert: "ssl证书检查异常"

expr: ssl_probe_success == 0

for: 5m

labels:

severity: critical

annotations:

summary: "ssl证书检查异常"

description: "证书 {{ $labels.instance }} 识别失败,请检查" ssl.rules: |

groups:

- name: "ssl证书过期时间检查"

rules:

- alert: "ssl证书过期警告"

expr: (ssl_cert_not_after-time())/3600/24 <7

for: 3h

labels:

severity: critical

annotations:

description: '证书{{ $labels.instance }}还有{{ printf "%.1f" $value }}天就过期了,请尽快更新证书'

summary: "ssl证书过期警告"

- alert: "ssl证书检查异常"

expr: ssl_probe_success == 0

for: 5m

labels:

severity: critical

annotations:

summary: "ssl证书检查异常"

description: "证书 {{ $labels.instance }} 识别失败,请检查"- 热更新

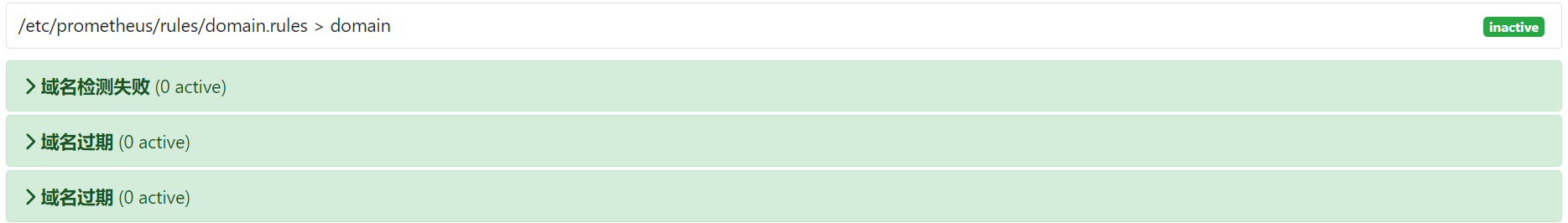

curl -XPOST http://prometheus.ikubernetes.net/-/reloadcurl -XPOST http://prometheus.ikubernetes.net/-/reload3.7 domain.rules

- 效果

groups:

- name: domain

rules:

- alert: 域名检测失败

expr: domain_probe_success == 0

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }} ,域名检测'

description: '{{ $labels.domain }}, 域名检测失败,请及时查看!!!'

- alert: 域名过期

expr: domain_expiry_days < 15

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }},域名过期'

description: '{{ $labels.domain }},将在15天后过期,请及时查看!!!'

- alert: 域名过期

expr: domain_expiry_days < 5

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }},域名过期'

description: '{{ $labels.domain }},将在5天后过期,请及时查看!!!' groups:

- name: domain

rules:

- alert: 域名检测失败

expr: domain_probe_success == 0

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }} ,域名检测'

description: '{{ $labels.domain }}, 域名检测失败,请及时查看!!!'

- alert: 域名过期

expr: domain_expiry_days < 15

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }},域名过期'

description: '{{ $labels.domain }},将在15天后过期,请及时查看!!!'

- alert: 域名过期

expr: domain_expiry_days < 5

for: 2h

labels:

severity: warning

annotations:

summary: '{{ $labels.instance }},域名过期'

description: '{{ $labels.domain }},将在5天后过期,请及时查看!!!'- 对某个域名进行探测

- name: blackbox-exporter

rules:

- alert: DomainAccessDelayExceeds0.5s

annotations:

description: 域名:{{ $labels.instance }} 探测延迟大于 0.5 秒,当前延迟为:{{ $value }}

summary: 域名探测,访问延迟超过 0.5 秒

expr: sum(probe_http_duration_seconds{job=~"blackbox"}) by (instance) > 0.5

for: 1m

labels:

severity: warning

type: blackbox- name: blackbox-exporter

rules:

- alert: DomainAccessDelayExceeds0.5s

annotations:

description: 域名:{{ $labels.instance }} 探测延迟大于 0.5 秒,当前延迟为:{{ $value }}

summary: 域名探测,访问延迟超过 0.5 秒

expr: sum(probe_http_duration_seconds{job=~"blackbox"}) by (instance) > 0.5

for: 1m

labels:

severity: warning

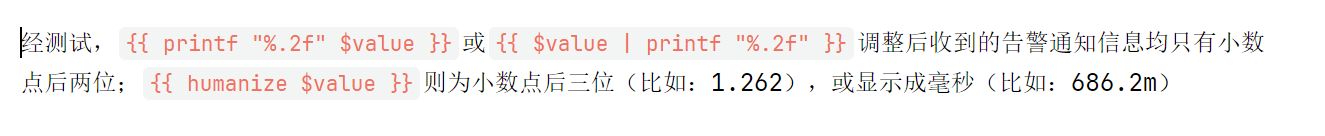

type: blackbox- 格式化

格式化下告警规则中的 $value 变量,有三种格式化数值的方法:

https://blog.csdn.net/weixin_43798031/article/details/127488164?spm=1001.2014.3001.5502